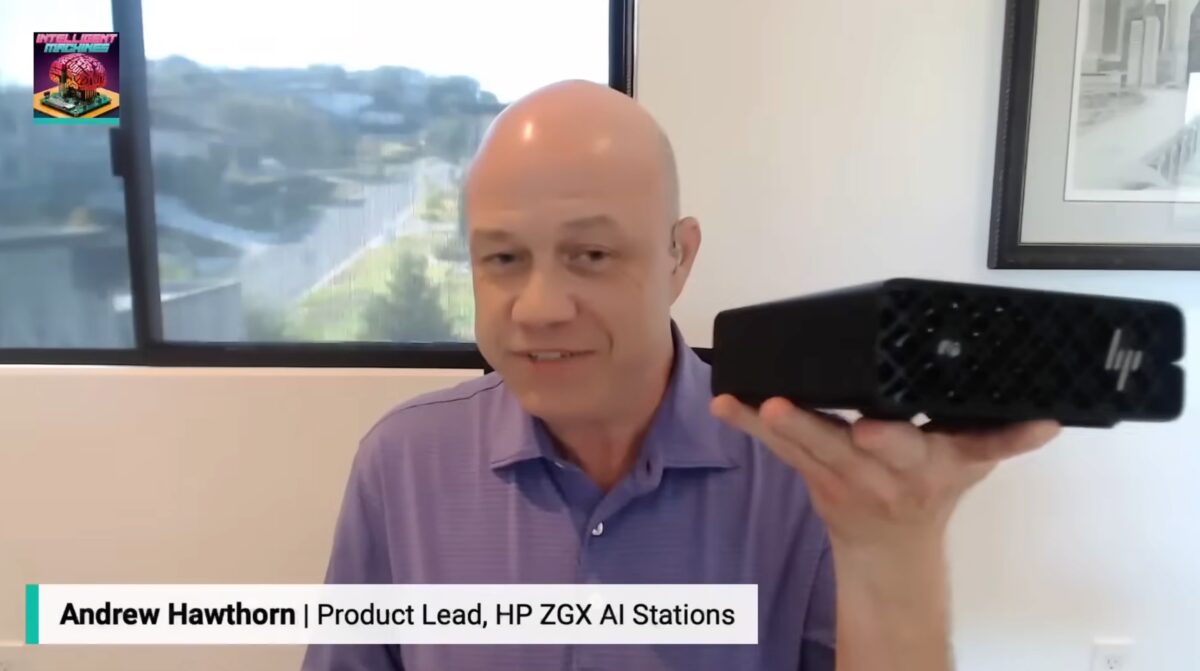

On Wednesday, HP’s Andrew Hawthorn (Product Manager and Planner for HP’s Z AI hardward) and I appeared on the Intelligent Machines podcast to talk about the computer that I’m doing developer relations consulting for: HP’s ZGX Nano.

You can watch the episode here. We appear at the start, and we’re on for the first 35 minutes:

A few details about the ZGX Nano:

-

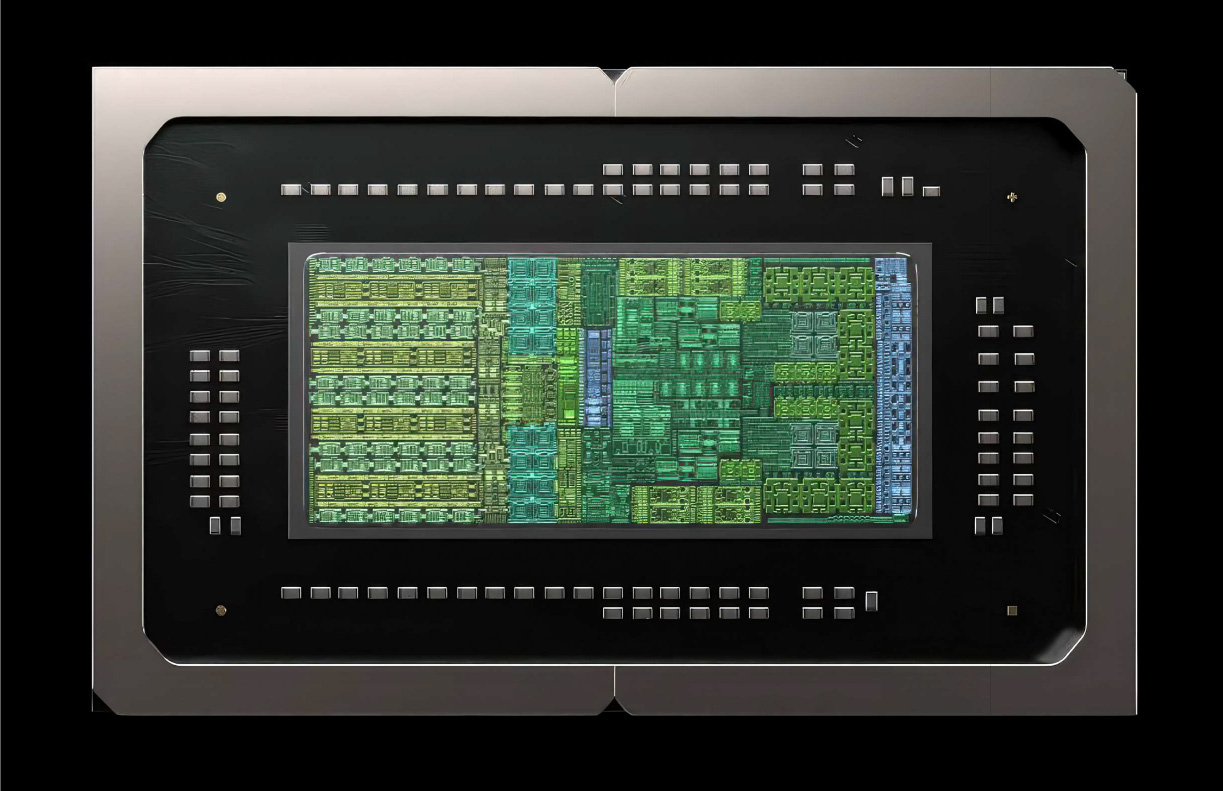

It’s built around the NVIDIA GB10 Grace Blackwell “superchip,” which combines a 20-core Grace CPU and a GPU based on NVIDIA’s Blackwell architecture.

- Also built into the GB10 chip is a lot of RAM: 128 GB of LPDDR5X coherent memory shared between CPU and GPU, which helps avoid the kind of memory bottlenecks that arise when the CPU and GPU each have their own memory (and usually, the GPU has considerably less memory than the CPU).

-

It can perform up to about 1000 TOPS (trillions of operations per second) or 1015 operations per second and can handle model sizes of up to 200 billion parameters.

-

Want to work on bigger models? By connecting two ZGX Nanos together using the 200 gigabit per second ConnectX-7 interface, you can scale up to work on models with 400 billion parameters.

- ZGX Nano’s operating system in NVIDIA’s DGX OS, which is a version of Ubuntu Linux with additional tweaking to take advantage of the underlying GB10 hardware.

Some topics we discussed:

- Model sizes and AI workloads are getting bigger, and developers are getting more and more constrained by factors such as:

- Increasing or unpredictable cloud costs

- Latency

- Data movement

- There’s an opportunity to “bring serious AI compute to the desk” so that teams can prototype their AI applications and iterate locally

- The ZGX Nano isn’t meant to replace large datacenter clusters for full training of massive models, It’s aimed at “the earlier parts of the pipeline,” where developers do prototyping, fine-tuning, smaller deployments, inference, and model evaluation

- The Nano’s 128 gigabytes of unified memory gets around the issues of bottlenecks with distinct CPU memory and GPU memory allowing bigger models to be loaded in a local box without “paging to cloud” or being forced into distributed setups early

- While the cloud remains dominant, there are real benefits to local compute:

- Shorter iteration loops

- Immediate control, data-privacy

- Less dependence on remote queueing

- We expect that many AI development workflows will hybridize: a mix of local box and cloud/back-end

- The target users include:

- AI/ML researchers

- Developers building generative AI tools

- Internal data-science teams fine-tuning models for enterprise use-cases (e.g., inside a retail, insurance or e-commerce firm).

- Maker/developer-communities

- The ZGX Nano is part of the “local-to-cloud” continuum

- The Nano won’t cover all AI development…

- For training truly massive models, beyond the low hundreds of billions of parameters, the datacenter/cloud will still dominate

- ZGX Nano’s use case is “serious but not massive” local workloads

- Is it for you? Look at model size, number of iterations per week, data sensitivity, latency needs, and cloud cost profile

One thing I brought up that seemed to capture the imagination of hosts Leo Laporte, Paris Martineau, and Mike Elgan was the MCP server that I demonstrated a couple of months ago at the Tampa Bay Artificial Intelligence Meetup: Too Many Cats.

Too Many Cats is an MCP server that an LLM can call upon to determine if a household has too many cats, given the number of humans and cats.

Here’s the code for a Too Many Cats MCP server that runs on your computer and works with a local CLaude client:

from typing import TypedDict

from mcp.server.fastmcp import FastMCP

mcp = FastMCP(name="Too Many Cats?")

class CatAnalysis(TypedDict):

too_many_cats: bool

human_cat_ratio: float

@mcp.tool(

annotations={

"title": "Find Out If You Have Too Many Cats",

"readOnlyHint": True,

"openWorldHint": False

}

)

def determine_if_too_many_cats(cat_count: int, human_count: int) -> CatAnalysis:

"""Determines if you have too many cats based on the number of cats and a human-cat ratio."""

human_cat_ratio = cat_count / human_count if human_count > 0 else 0

too_many_cats = human_cat_ratio >= 3.0

return CatAnalysis(

too_many_cats=too_many_cats,

human_cat_ratio=human_cat_ratio

)

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')

I’ll cover writing MCP servers in more detail on the Global Nerdy YouTube channel — watch this space!