This was just too good and too timely to save for Saturday’s picdump. Enjoy!

And don’t forget the “official unofficial” Bitcoin logo:

Standard time, daylight saving time — can we just pick one and stick with it instead of switching twice a year?

Here’s a handy tip from the first episode of the 1975 TV series Space: 1999 that seems tailor-made for the current era of AI. Keep it in mind!

(And in case you’re curious, here’s that episode, and I’ve even cued it up to the scene where the computer displays that message…)

Be especially nice to your sysadmins today, because a bad update from Crowdstrike’s Falcon anti-threat system has blue-screened a lot of computers worldwide. They’re now working in “surprise guest mode.”

Some techies hold the attitude that “what I do is important, and what you do isn’t,” and the more socially savvy ones don’t say the quiet part out loud.

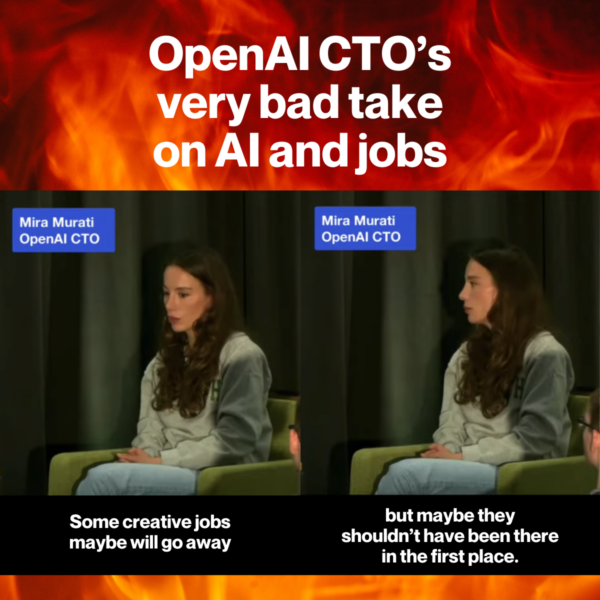

But Mira Murati, OpenAI’s CTO, did just that onstage at her alma mater, Dartmouth University, where she said this about AI displacing jobs in creative lines of work:

Some creative jobs maybe will go away, but maybe they shouldn’t have been there in the first place.

Mira Murati, from AI Everywhere: Transforming Our World, Empowering Humanity

(she says this around the 29:30 mark)

Here’s my take on her bad take, courtesy of the Global Nerdy YouTube channel, which you should subscribe to…

…and here’s the video with her full talk at Dartmouth:

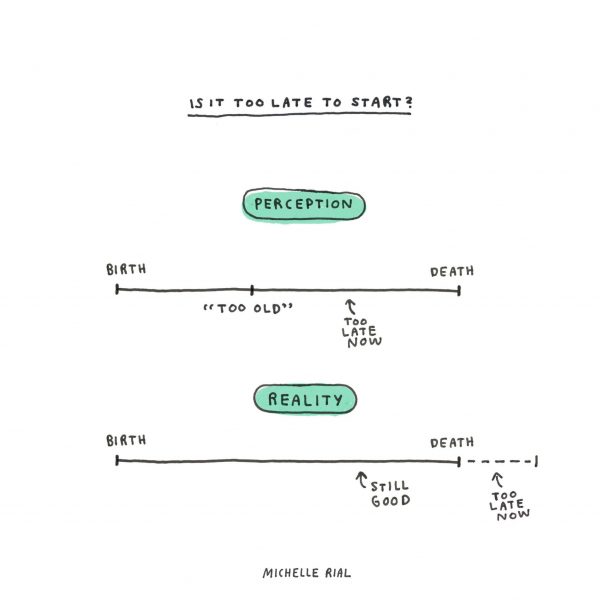

Happy New Year, fellow techies! As is tradition on this blog, the first post of the first day back to work is the very important reminder above, which was created by graphic designer Michelle Rial.

If you like this infographic, you might also like her book book of similar infographics, Maybe This Will Help.

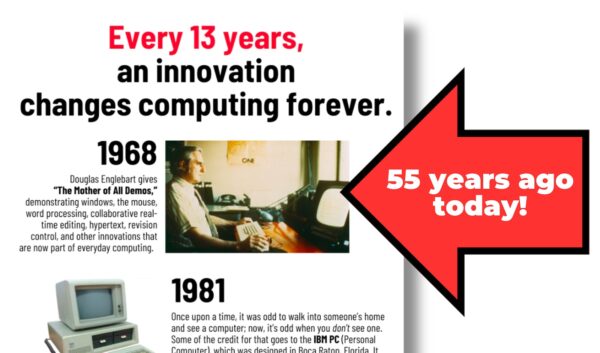

My poster from May, titled Every 13 years, an innovation changes computing forever, theorizes that roughly every thirteen years, a new technology appears, and it changes the way we use computers in unexpectedly large ways.

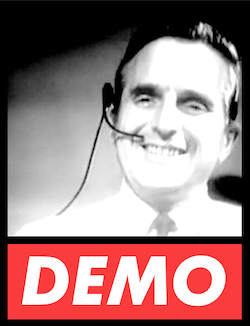

The first entry in my list was an exception because it didn’t feature just one technology, but a number of them. It was “The Mother of All Demos,” a demonstration of technologies that are part of our everyday life now, but must have seemed like pure science fiction at the time, December 9, 1968 — 55 years ago today.

In the demo, computer scientist Douglas Engelbart demonstrated:

Rather than continue to tell you about it, it’s so much easier to simply show it to you:

Happy 55th anniversary, Mother of All Demos, and thank you, Dr. Engelbart!