It’s been about a week since Gemini 3 has come out, and I’ve been hearing some really good things about it. So I’m trying out Google’s “try Gemini 3 Pro for free for a month” offer and putting it to as much use as the account will allow, and I’m posting my findings here.

Gemini 3 and context entropy

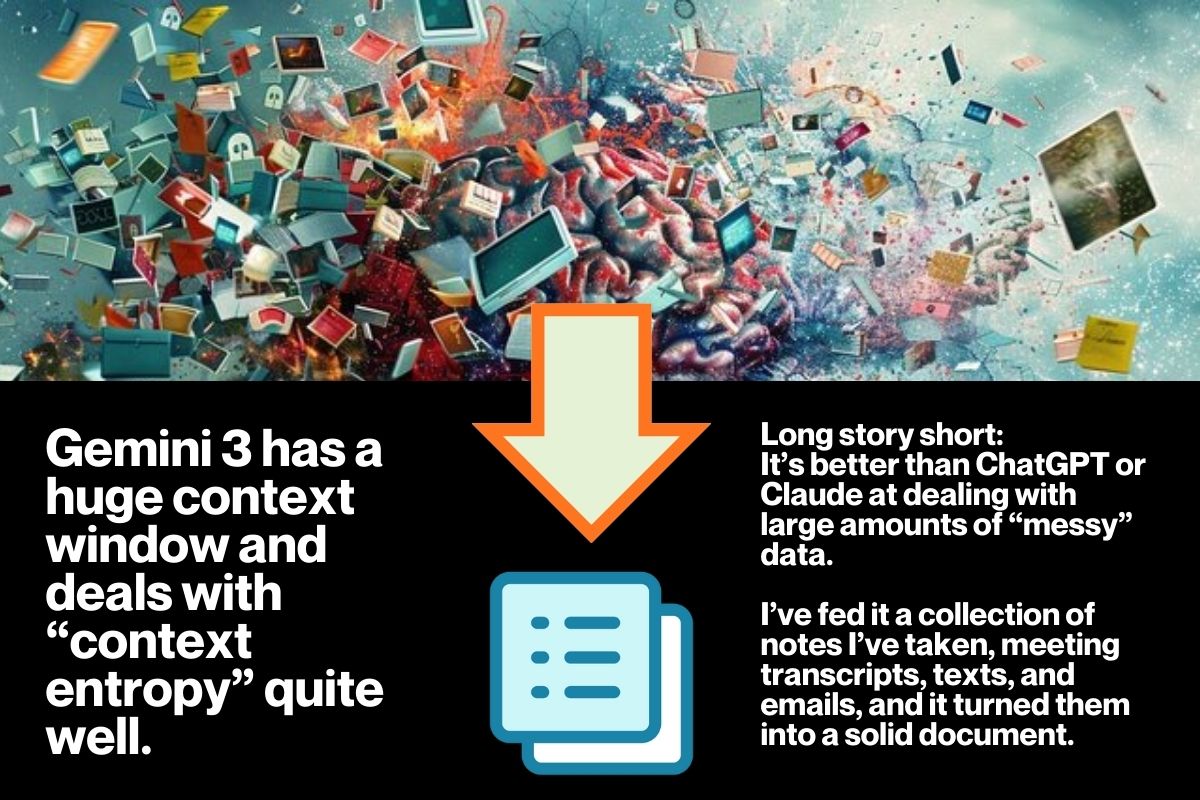

Gemini 3, probably owing to its roots at Google, seems to handle “context entropy” better than ChatGPT or Claude. By “context entropy,” I mean “messy data,” with the disorder and chaos that you expect to find in notes and documents that you accumulate over time. Unless you’ve put in a lot of time, you probably haven’t put this information into much of a structured form or created some kind of “map” that explains how (or even if) the various parts of the information are related to each other.

Gemini 3 does a good job of taking chaotic information and finding the signal. I recently fed it a collection of…

- Job descriptions for positions that I’ve applied for

- Various versions of my resume, each one tuned for a specific job application

- Cover letters for each job application

- Screenshots of job application forms, where I had to answer -pre-screening questions posed by prospective employers

- Notes from interviews with recruiters and prospective employers

- Video recordings of the aformentioned interviews, which took place on Zoom, Teams, or Google Meet (with the approval of the other parties, of course)

- Slide presentations that I gave as part of the interview process

- Follow-up emails, texts, and other messages

- “Conversations” with ChatGPT and Claude through the job search process, from generating customized cover letters and resumes all the way to post-mortems

…and it’s produced some useful stuff, including a strategy document that I plan to use in my job search going forward. (More on that in a later post here and video on the Global Nerdy YouTube channel.)

Gemini 3’s huge context window

The other notable thing about Gemini 3 is its context window, which is an AI model’s working memory, or the maximum amount of information that it can work with in any given chat session. Gemini 3 Pro’s context window is a huge 1 million tokens, which is roughly equivalent to about 750,000 words (a token is a small chunk of language that lies somewhere between a character and a full word).

This big context window means that it’s possible to feed Gemini 3 with a LOT of data, which could be a big application codebase, a couple of textbooks, lots of notes or transcripts, or any other big pile of messy data that you’re trying to extract meaning from.

I’m going to be working heavily with Gemini 3 over the next few weeks, and I’ll continue to post my observations here, along with tips and tricks that I either find online or figure out. Watch this space!