It’s been a week since Gemini 3 has come out, and I’ve been hearing some really good things about it. So I’m trying out Google’s “try Gemini 3 Pro for free for a month” offer and putting it to as much use as the account will allow, and I’m posting my findings here.

What does it mean for an AI model to be multimodal?

Multimodal, in the AI sense of the word, means “capable of understanding and working with multiple types of information, such as text, images, audio, and video.” We humans are multimodal, and we’re increasingly expecting AIs to be multimodal too.

The payoff that comes from an AI model being multimodal is flexibility and naturalness. There are times when it’s better to provide a picture instead of trying to type a description of that picture, or give the model a recording of a discussion rather than transcribing it first.

Gemini 3 is natively multimodal

The marketing and developer relations teams behind AI models will claim that their particular product is multimodal, but a number of them are only indirectly that way.

Many models are strictly language models (the second “L” in “LLM”). These models use other models to translate non-text information to text, including models that “see” an image and generate text to describe it, and then use that text as the input. This approach works, but as you might have guessed, a lot gets lost in the translation. The loss is even greater with video.

Gemini 3 is different, since it’s natively multimodal. That means that it processes text, images, audio, video, and code simultaneously as a single stream of information, and without translating non-text data into text first.

How to use Gemini 3’s multimodality

1. Coding multimodally

Because Gemini 3 processes all inputs together, it can reason across them in ways other models can’t. For example, when you upload a video, it can analyze the audio tone against the facial expressions in the video while cross-referencing a PDF transcript of that video.

This opens a lot of interesting possibilities, but I thought I’d go with a couple of ideas I want to try out when coding:

- On-brand user interfaces: I could upload a hand-drawn sketch or a screenshot of a website I liked and say: “Build a functional React front end that captures this ‘look,’ but uses my company’s brand colors, which are defined in this style guide PDF.”

-

Multimedia debugging: When debugging with a text-first AI model, I’d simply give it the error log. But with a multimodal model like Gemini 3, I could provide additional info for more context, including:

- The code

- The raw server logs

-

A screen recording of the application that includes the moment the bug rears its ugly head, I could even ask: “Locate the specific line of code in File A that’s causing the visual glitch at 0:15 in the video.”

2. Get multimodal answers

Here’s one straight out of science fiction. Instead of asking for text answers, try asking for an answer that includes text, graphics, and interactivity.

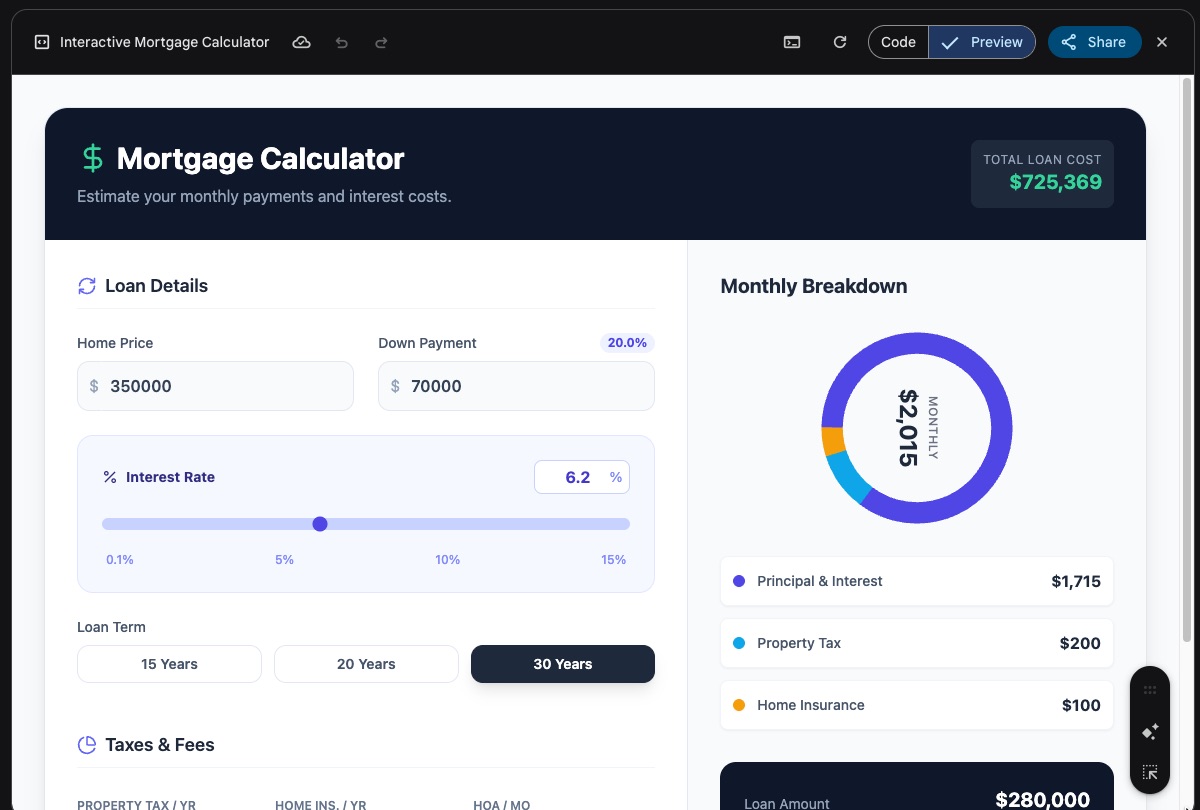

For example, I gave it this prompt:

Don’t just explain mortgage rates. Code me an interactive loan calculator that lets me slide the interest rate and see the monthly cost change in real time.

It got to work, and in about half a minute, it generated this app…

…and yes, it worked!

3. Using multimodality and Gemini 3’s massive context window

In my previous article on Gemini 3, I said that it had a context window of 1 million tokens. When you combine that with multimodality, you get a chaos crushing machine. You don’t have to “clean” your data before hading it over to the model anymore!

-

The haystack search: Upload an entire hour-long lecture video, three related textbooks, and your messy lecture notes, and then prompt it with: “Create a study guide that highlights the 5 concepts from the video that are NOT covered in the textbooks.”

-

Archiving in multiple languages: Upload handwritten recipes or letters in different languages. Gemini can decipher the handwriting, translate it, and format it into a digital cookbook or archive. For a cross-cultural family like mine, this could come in really handy.

Multimodal tips

To get the best results…

-

Name your inputs. Don’t say “look at the image.” Provide names for images when you upload them (“This is image A…”) and then refer to them when providing instructions (“Using image A…”).

-

Give it the info first, instructions last. When taking advantage of that huge context window, provide Gemini with all the data you want it to use first, and put your instructions at the end, after the data. Use a bridging phrase like “Based on the info above…”

-

With video, use timestamps. If there’s a part of the video that’s relevant to your instructions, refer to it by timestamp (e.g., “The trend visible at 1:45 in video B…”).