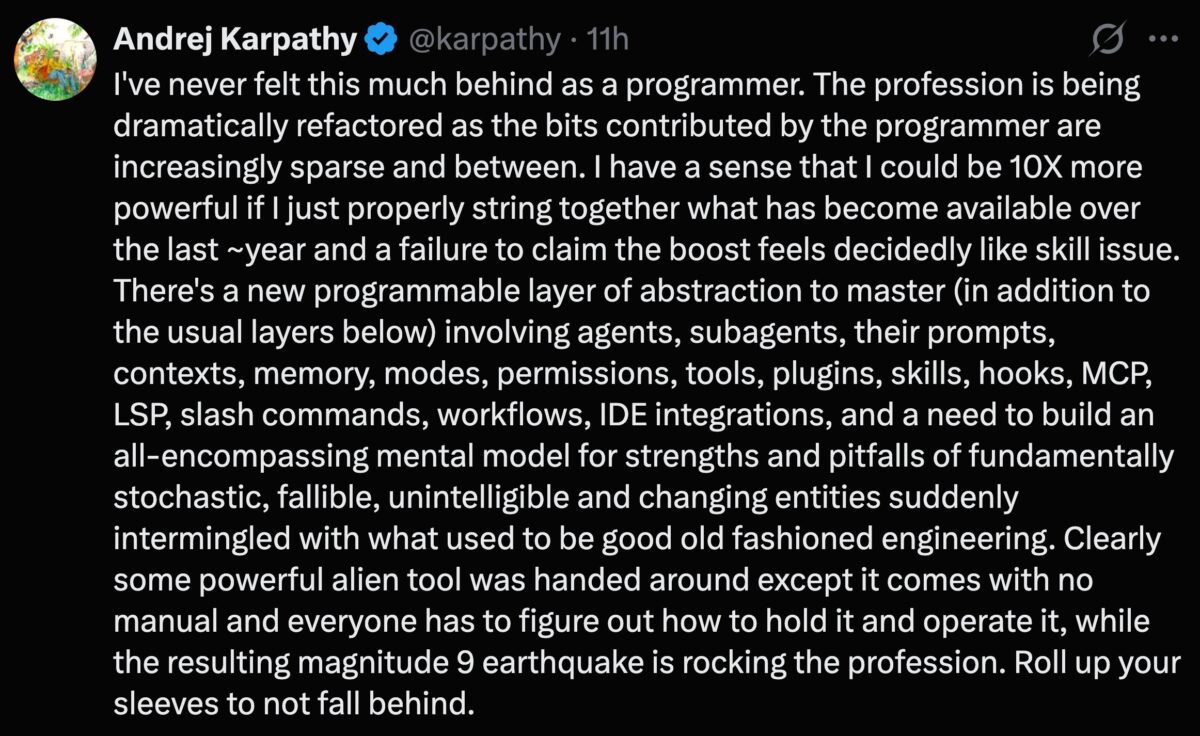

You’ve probably seen this tweet, written by none other than Andrej Karpathy, founding member of OpenAI, former director of AI at Tesla, and creator of the Zero to Hero video tutorial series on AI development from first principles:

I’ve never felt this much behind as a programmer. The profession is being dramatically refactored as the bits contributed by the programmer are increasingly sparse and between. I have a sense that I could be 10X more powerful if I just properly string together what has become available over the last ~year and a failure to claim the boost feels decidedly like skill issue. There’s a new programmable layer of abstraction to master (in addition to the usual layers below) involving agents, subagents, their prompts, contexts, memory, modes, permissions, tools, plugins, skills, hooks, MCP, LSP, slash commands, workflows, IDE integrations, and a need to build an all-encompassing mental model for strengths and pitfalls of fundamentally stochastic, fallible, unintelligible and changing entities suddenly intermingled with what used to be good old fashioned engineering. Clearly some powerful alien tool was handed around except it comes with no manual and everyone has to figure out how to hold it and operate it, while the resulting magnitude 9 earthquake is rocking the profession. Roll up your sleeves to not fall behind.

It would be perfectly natural to react to this tweet like so:

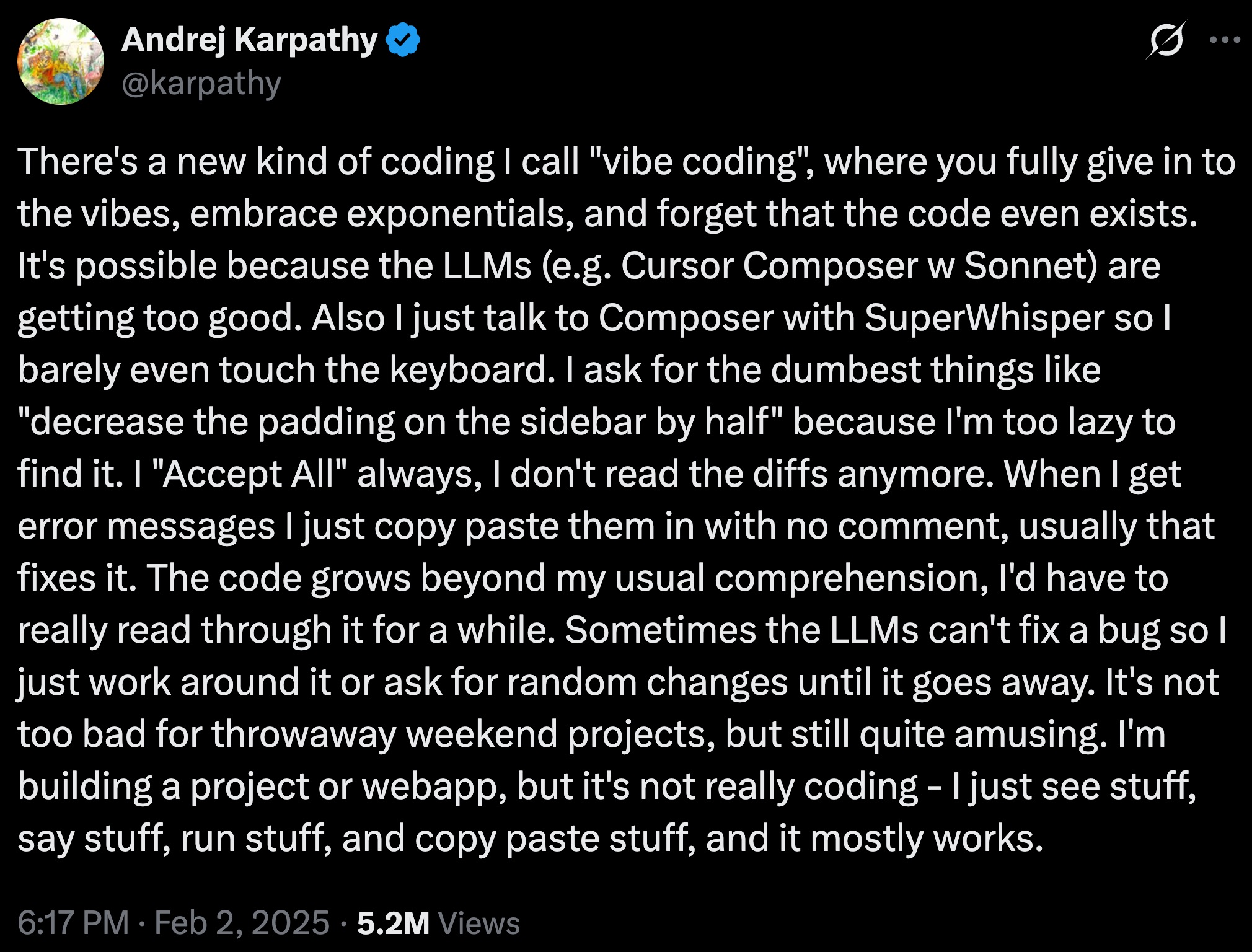

After all, this “falling behind” statement isn’t coming from just any programmer, but a programmer who’s been so far ahead of most of us for so long that he’s the one who coined the term vibe coding in the first place — and the term’s first anniversary isn’t unit next month:

After all, this “falling behind” statement isn’t coming from just any programmer, but a programmer who’s been so far ahead of most of us for so long that he’s the one who coined the term vibe coding in the first place — and the term’s first anniversary isn’t unit next month:

Karpathy’s post came at the end of 2025, so I thought I’d share my thoughts on it — in the form of a “battle plan” for how I’m going to approach AI in 2026.

It has eight parts, listed below:

- Accept “falling behind” as the new normal. Learn to live with it and work around it.

- Understand that our sense of time has been altered by recent events.

- Forget “mastery.” Go for continuous, lightweight experimentation instead.

- Less coding, more developing.

- Yes, AI is an “alien tool,” but what if that alien tool is a droid instead of a probe?

- Other ideas I’m still working out

- This may be the “new normal” for Karpathy, but it’s just the “same old normal” for my dumb ass.

- The difference between an adventure and an ordeal is attitude.

1. Accept “falling behind” as the new normal. Learn to live with it and work around it.

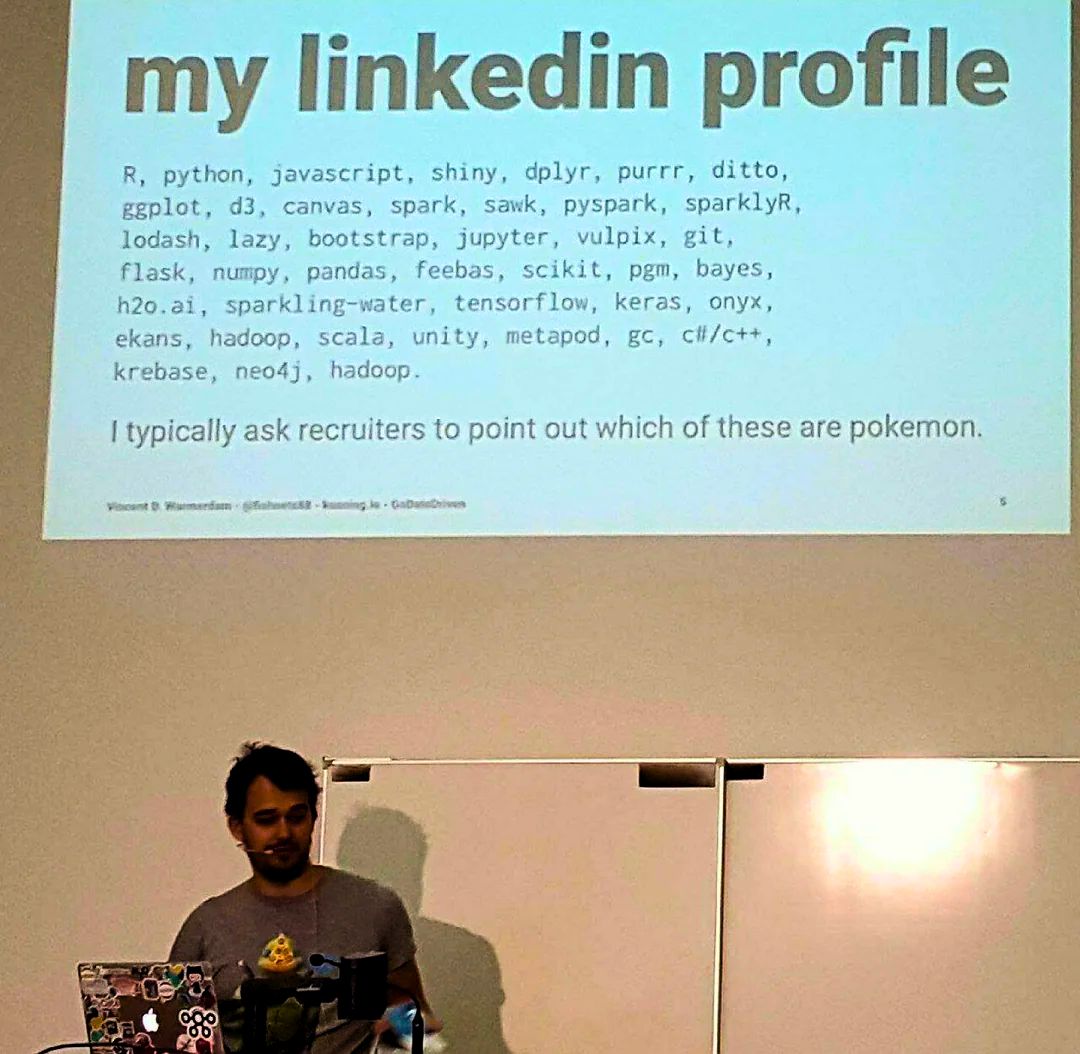

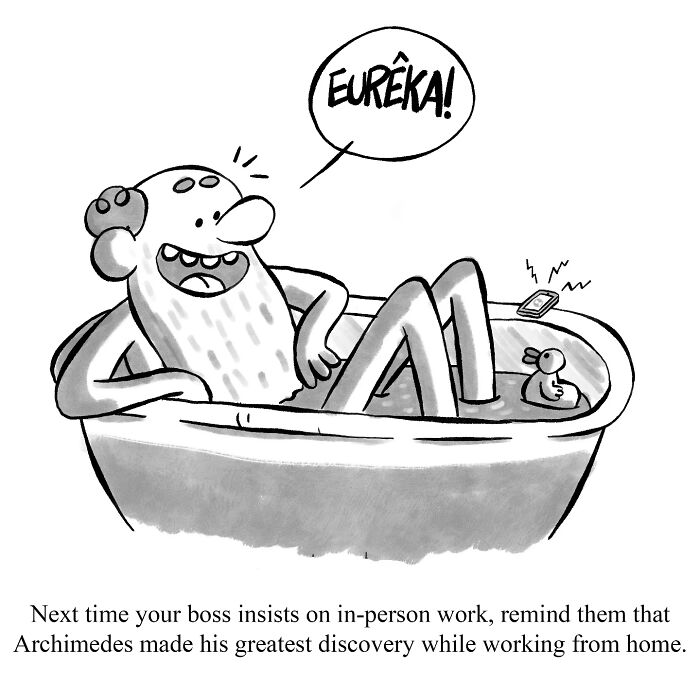

Even before the current age of AI, tech was already moving at a pretty frantic pace, and it was pretty hard to keep up. That’s why we make jokes like the slide pictured above, or the Pokemon or Big Data? quiz.

As a result, many people make the choice between “going deep” and specializing in a few things or “going wide” and being a generalist with a little knowledge over many areas. While the approaches are quite different, they have one thing in common: they’re built on the acceptance that you can’t be skilled at everything.

With that in mind, let me present you with an idea that might seem uncomfortable to some of you: Accept “falling behind” as the new normal. Learn to live with it and work around it.

A lot of developers, myself included, accepted being “behind” as our normal state of affairs for years. We’re still in the stone ages of our field — the definition of “computable” won’t even be 100 years old until the next decade — so we should expect changes to continue to come at a fast and furious pace during our lifetimes.

Don’t think of “being behind” as being a personal failing, but as a sensible, sanity-preserving way of looking at the tech world.

It’s a sensible approach to the world that Karpathy describes, which is a firehose of agents, prompts, and stochastic systems, all of which lack established best practices, mature frameworks, or even documentation. If you’re feeling “current” and “on top of things” in the current AI era, it means you don’t understand the situation.

That feeling of playing perpetual “catch-up?” That’s proof you are actively playing on the new frontier, where the map is getting redrawn every day. It means you’ve got a mindset suited for the current Age of AI.

2. Understand that our sense of time has been altered by recent events.

I think some of Karpathy’s feeling comes from how the pandemic and lockdowns messed up our sense of time. Events from years ago feel like they just happened, and events from months in the past feel like a lifetime ago.

AI — and once again, I’m talking about AI after ChatGPT — sometimes feels like it’s been around for a long time, but it’s still a recent development.

“How recent?” you might ask.

Think of it this way: the non-D&D playing world was introduced to Vecna through season 4 of Stranger Things months before it was introduced to ChatGPT.

Need more perspective? Here are more things that “just happened” that also predate the present AI age:

- The Russia/Ukraine War started in February 2022, over 9 months before ChatGPT.

- Elon Musk made his $44 billion offer to buy Twitter in April 2022, tried to back out in July, and, after being forced by court intervention, bought it in October, which means all that drama happened before ChatGPT.

- The FBI executed their search warrant of Donald Trump’s Mar-a-Lago residence in August 2022, finding seizing of over at least 300 classified government documents and 48 empty folders labeled “classified” — 3 months before ChatGPT.

- These superhero movies were released in 2022, and all of them predate ChatGPT:

- Doctor Strange in the Multiverse of Madness (May)

- Thor: Love and Thunder (June)

- Black Panther: Wakanda Forever (October)

- Black Adam (October)

- The Will Smith / Chris Rock slap happened in April — 7 months before ChatGPT.

(Just recalling about “the slap” made me think “Wow, that was a while back.” In fact, I get the feeling that the only person who remembers it as if it happened yesterday is Chris Rock.)

All these examples are pretty recent news, and they all happened before that fateful day — November 30, 2022 — when ChatGPT was unleashed on an unsuspecting world.

3. Forget “mastery.” Go for continuous, lightweight experimentation instead.

Accepting “behind” as the new normal turns any anxiety you may be feeling into a strategic advantage. It changes your mindset to one where you embrace continuous, lightweight experimentation rather than mastery. You know, that “growth mindset” thing that Carol Dweick keeps going on about.

Put your energy into the skill of learning and critically evaluating new basic tools and skills that are subject to change over the gathering static domain knowledge that you think will be timeless.

We’re emerging from an older era where code was scarce and expensive. It used to take time and effort (and as a result, money) to produce code, which is why a lot of software engineering is based on the concept of code reuse and why older-school OOP developers are hung up on the concept of inheritance. Now that AI can generate screens of code in a snap, we’re going to need to change the way we do development.

My 2026 developer strategy will roughly follow these steps:

- Embracing ephemeral code: I’m adopting the mindset of “post code-scarcity” or “code abundance.” I’ll happily fire up

$TOOL_OF_THE_MOMENTand have it generate lots of lines of code that I won’t mind deleting after I’ve gotten what I need out of it. The idea is to drive the “cost” of experimentation down to zero, which means I’ll do more experimenting. - Try new things, constantly, but not all at once: My plan is to dedicate a week or two to one thing and experiment with it. Examples:

- First week or so: Prompts, especially going beyond basic instructions. Play with few-shot prompting, chain-of-thought, and providing context. Look at r/ChatGPTPromptGenius/ and similar places for ideas.

- Following week or so: Agents. Build a simple agent using a guide or framework. Understand its core components: reasoning, tools, and memory.

- Week or so after that: Tools and integrations. Give an agent the ability to search the web, call an API, or write to a file.

- Learn by teaching and building: Active learning is the most efficient learning! Building something and then showing others how to build it is my go-to trick for getting good at some aspect of tech, and it’s why this blog exists, why the Tampa Bay Tech Events List exists, why the Global Nerdy YouTube channel exists, and why I co-organize the Tampa Bay AI Meetup and Tampa Bay Python.

4. Less coding, more developing.

I used to laugh at this scene from Star Trek: Voyager, but damn, it’s pretty close to what we can do now…

Your enduring value as a developer or techies is going to move “up the stack,” away from remembering the minutae API call parameter order and syntax and towards “big picture” things like system design, architectural judgment, and the critical oversight, judgement, and quality control required to pull together AI components that are stochastic and fundamentally unpredictable.

That “behind” feeling? That’s the necessary friction for keeping your footing while climbing the much taller ladder that development. Your expertise is less about for loops and design patterns more about system design, problem decomposition, and even taste. You’re more focused on solving the users’ problems (and therefore, operating closer to the user) and providing what the AI can’t.

Worry less about specific tools, and more about principles. Specific tools — LangChain and LlamaIndex, I’m lookin’ right at you — will change rapidly. They may be drastically different or even replaced by something else this time next year! (Maybe next month!) Focus on understanding the underlying principles of agentic reasoning, prompt engineering, and (ugh, this term drives me crazy, and I can’t put my finger on why) — “workflow orchestration.”

Programming isn’t being replaced. It’s being refactored. The developer’s role is becoming one of a conductor or integrator, writing sparse “glue code” to orchestrate powerful, alien AI components. (Come to think of it, that’s not all too different from what we were doing before; there’s just an additional layer of abstraction now.)

5. Yes, AI is an “alien tool,” but what if that alien tool is a droid instead of a probe?

Let’s take a closer look at the last two lines of Karpathy’s tweet:

Clearly some powerful alien tool was handed around except it comes with no manual and everyone has to figure out how to hold it and operate it, while the resulting magnitude 9 earthquake is rocking the profession. Roll up your sleeves to not fall behind.

First, let me say that I think Karpathy’s choice of “alien tool” as a metaphor is at least a little bit colored by Silicon Valley mentality of disruption — that tech is for doing things to fields, industries, or people instead of for them. There’s also the popular culture portrayal of alien tools, and I think he feels he’s been getting the “alien tools doing things to me” feeling lately:

But what if that “alien tool” is something that can we can converse with? What if we reframe “alien too” to…“droid?”

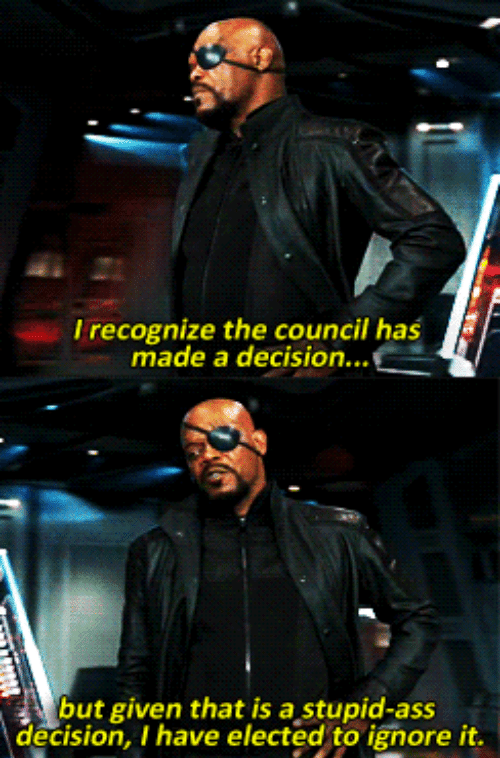

Unlike an interpreter or compiler that gives one of those classic cryptic error messages or a framework or library with fixed documentation, you can actively interrogate AI, just like you can interrogate a Star Wars droid (or even the Millennium Falcon’s computer, which is a collection of droids). When an AI generates code that you can’t make sense of, you’re not left to reverse-engineer its logic unassisted. You can demand an explanation in plain language. You ask it to walk through its solution step by step or challenge its choices (and better yet, do it Samuel L. Jackson style):

This transforms debugging and learning from a solitary puzzle into a dialogue. This is the real-life version of droids in Star Wars and computers in Star Trek! Ask whatever AI tool you’re using to refactor its code for readability, make it give you the “Explain it is if I’m a junior dev” walkthrough of a complex algorithm, or debate the trade-offs between two architectural approaches. Turn AI into a tireless, on-demand pair programmer and tutor!

By reframing AI not as an alien tool, but as a Star Wars droid (or if you prefer, Star Trek computer), you can change the pace at which you can understand and manage systems. Unfamiliar libraries and cryptic errors are no longer major show-stoppers, but speed bumps that you can overcome with a Socratic dialogue to build your understanding. The AI-as-droid approach allows you to rapidly decompose and reconstruct the AI’s own output, turning its stochastic suggestions into knowledge and understanding that you can carry forward.

In the end, you’re moving from merely accepting or rejecting its code to using conversation to get clarity, both in the AI’s output and in your own mental model. By treating AI not as an alien probe but as a droid, the “alien tool” becomes less alien through dialogue. The terra incognita of this new age won’t be navigated by a map, but by directed exploration with the assistance of a local guide you can question at every turn.

6. Other ideas I’m still working out

Like “Todd” from BoJack Horseman, I’m still working out some ideas. I’ve listed them here so that you can get an advance look at them; I expect to cover them in upcoming videos on the Global Nerdy YouTube channel.

These ideas, taken together, are a call not to blindly climb the AI hype curve, but a call to develop a sophisticated, expert-level understanding of its limits. I hope to make them the basis of a structured way to conduct that research without falling into the “Mount Dumbass” of overconfidence.

-

Your tech / developer expertise is the guardrail. I believe that knowledge is the antidote to AI’s Dunning-Kruger effect. What you bring to the table in the Age of AI is critical evaluation, debugging, and architectural oversight. AI generates candidates; you approve or reject them, or, to quote Nick Fury…

-

Adopt a skeptical, experimental stance. Let’s follow Karpathy’s own method: try the new tools on non-critical projects. When they fail (as he said they did for

nanochat), analyze why they failed. This hands-on experience with failure builds the accurate mental model he described as lacking. -

Focus on understanding AI “psychology.” Understand the new stochastic layer provided by AI not to worship it, but to debug it (please stop treating AI like a god). Learn about prompts, context windows, and agent frameworks so you can diagnose why an AI produces bad code or an agent gets stuck. This turns a weakness into a diagnosable system.

-

Prioritize team and talent dynamics: You’ll hear and read losts of stories and articles warning of talent leaving as a result of AI. If you’re in a leadership or decision-making role, focus on creating an environment where critical thinking about tools is valued over blind adoption. Trust your team, and protect their “state of flow” and their deep work.

7. This may be the “new normal” for Karpathy, but it’s just the “same old normal” for my dumb ass.

Maybe it’s because he’s made some really amazing stuff that he’s surprised that the wave of change that it brought about has come back to bite him. For most of the rest of us — once again, that includes me — we’ve always been trying our level best to keep up, and doing what we can to manage our tiny corners of the tech world.

The take-away here is that if the guy who helped make vibe coding a reality and coined the term “vibe coding” is feeling a bit overwhelmed, we can take comfort that we’re not alone. Welcome to our club, Andrej!

8. The difference between an adventure and an ordeal is attitude.

Yes, it’s a lot. Yes, I’m overwhelmed. Yes, I’m trying to catch up.

But it’s also exciting. It’s a whole new world. It’s full of possibilities.

I’m outside my comfort zone, but that’s where the magic happens.