Happy Saturday, everyone! Here on Global Nerdy, Saturday means that it’s time for another “picdump” — the weekly assortment of amusing or interesting pictures, comics,

and memes I found over the past week. Share and enjoy!

Happy Saturday, everyone! Here on Global Nerdy, Saturday means that it’s time for another “picdump” — the weekly assortment of amusing or interesting pictures, comics,

and memes I found over the past week. Share and enjoy!

AWWW YISSS! As of yesterday (Thursday, December 18), Global Nerdy has had its highest number of pageviews in five years. Thank you, Global Nerdy readers, for all your visits!

Here’s what’s happening in the thriving tech scene in Tampa Bay and surrounding areas for the week of Monday, December 22 through Sunday, December 28!

This list includes both in-person and online events. Note that each item in the list includes:

✅ When the event will take place

✅ What the event is

✅ Where the event will take place

✅ Who is holding the event

Keep in mind that Christmas is this week, and some organizers have their calendars “on autopilot!” Contact the organizers to be sure that an event, gathering, or meeteup is happening before you go!

| Event name and location | Group | Time |

|---|---|---|

| Wood working at Tarpon Periwinklers |

Makerspaces Pinellas Meetup Group | 12:00 PM to 3:00 PM EST |

| D&D Adventurers League Critical Hit Games |

Critical Hit Games | 2:00 PM to 7:30 PM EST |

| Traveller – Science Fiction Adventure RPG – New Players Welcome! Black Harbor Gaming |

St Pete and Pinellas Tabletop RPG Group | 3:00 PM to 6:00 PM EST |

| Sunday Pokemon League Sunshine Games | Magic the Gathering, Pokémon, Yu-Gi-Oh! |

Sunshine Games | 4:00 PM to 8:00 PM EST |

| Let’s Learn to Turn Pens! Tampa Hackerspace West |

Tampa Hackerspace | 6:00 PM to 9:00 PM EST |

| Find Your Funny Toastmasters Online event |

Toastmasters Division E | 6:30 PM to 8:00 PM EST |

| A Duck Presents NB Movie Night Discord.io/Nerdbrew |

Nerd Night Out | 7:00 PM to 11:30 PM EST |

| Return to the top of the list | ||

How do I put this list together?

It’s largely automated. I have a collection of Python scripts in a Jupyter Notebook that scrapes Meetup and Eventbrite for events in categories that I consider to be “tech,” “entrepreneur,” and “nerd.” The result is a checklist that I review. I make judgment calls and uncheck any items that I don’t think fit on this list.

In addition to events that my scripts find, I also manually add events when their organizers contact me with their details.

What goes into this list?

I prefer to cast a wide net, so the list includes events that would be of interest to techies, nerds, and entrepreneurs. It includes (but isn’t limited to) events that fall under any of these categories:

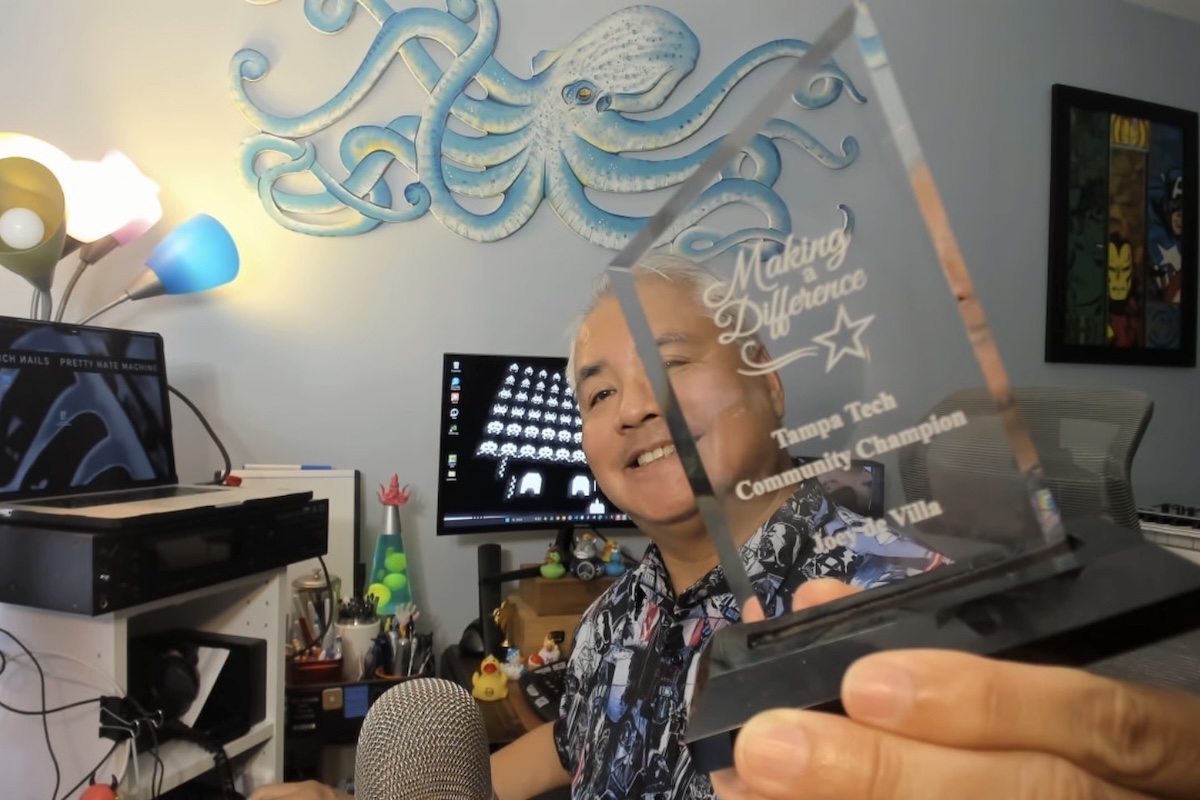

I’d like to thank Sam Kasimalla, co-organizer of Tampa Java User Group and other tech events around town, Suzanne Ricci, Computer Coach’s Chief Success Officer, and Daniella Diaz, High Tech Connect’s cofounder, for giving me the first annual Tampa Tech Community Champion award at the 5th Annual End of Year Tech Meetup Extravaganza, held at Embarc Collective last Tuesday, December 9th.

Here’s a video of the proceedings:

As I said in my quick speech, part of the credit for the award has to go to Anitra. I’m here in Tampa because she’s here in Tampa.

I’d also like to thank all of you in the Tampa tech scene — you make “The Other Bay Area” a great place for techies to live, work, and play in, and I’m happy to do what I can for this community.

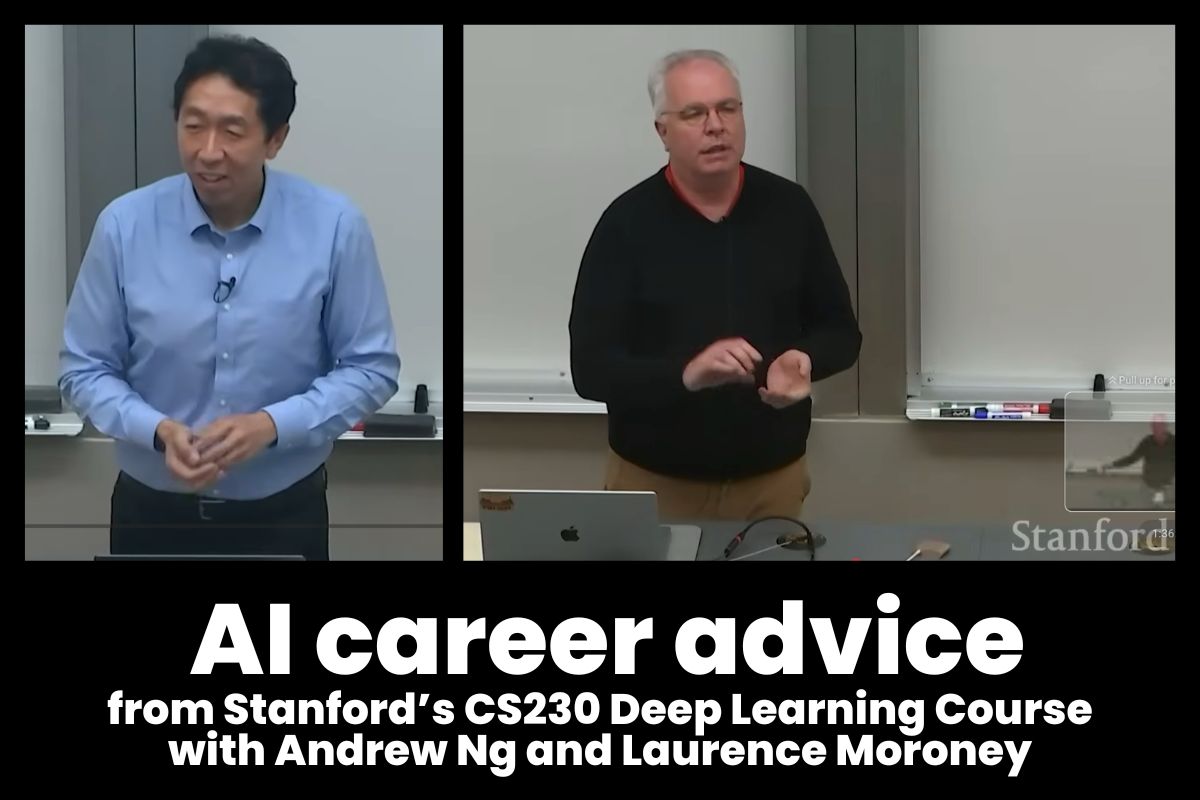

If you watch just one AI video before Christmas, make it lecture 9 from AI pioneer Andrew Ng’s CS230 class at Stanford, which is a brutally honest playbook for navigating a career in Artificial Intelligence.

You can watch the video of the lecture on YouTube.

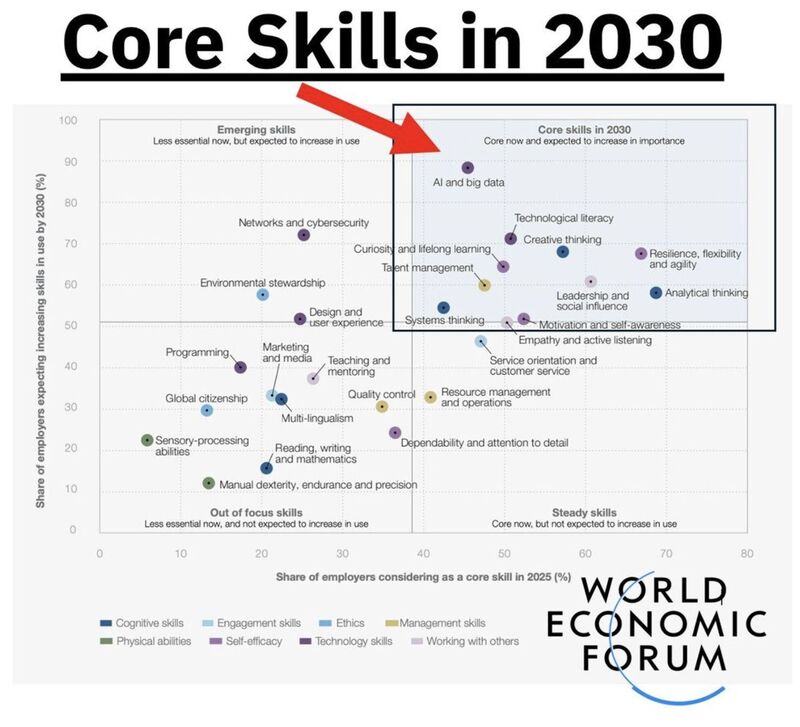

The class starts with Ng sharing some of his thoughts about the AI job market before handing the reins over to guest speaker Laurence Moroney, Director of AI at Arm, who offered the students a grounded, strategic view of the shifting landscape, the commoditization of coding, and the bifurcation of the AI industry.

Here are my notes from the video. They’re a good guide, but the video is so packed with info that you really should watch it to get the most from it!

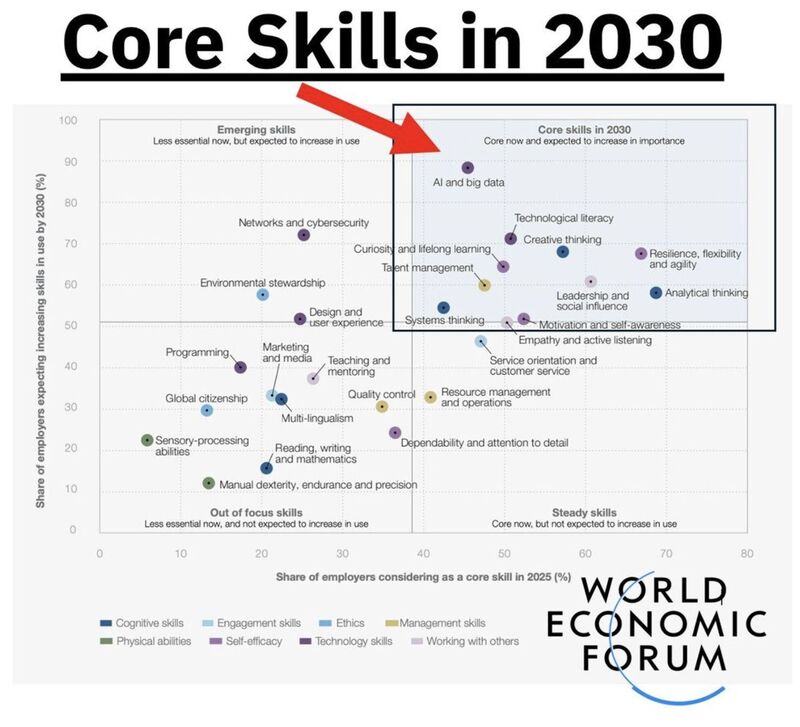

Ng opened the session with optimism, saying that this current moment is the “best time ever” to build with AI. He cited research suggesting that every 7 months, the complexity of tasks AI can handle doubles. He also argued that the barrier to entry for building powerful software has collapsed.

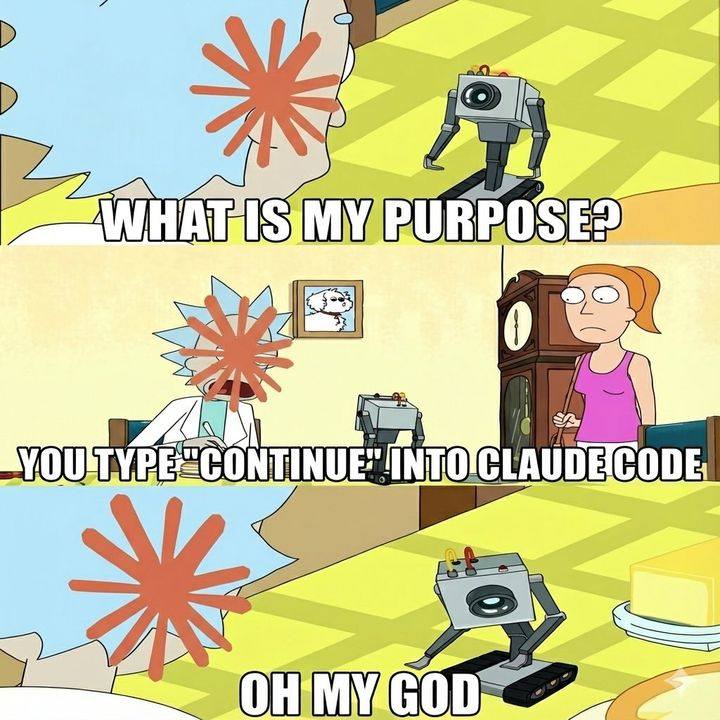

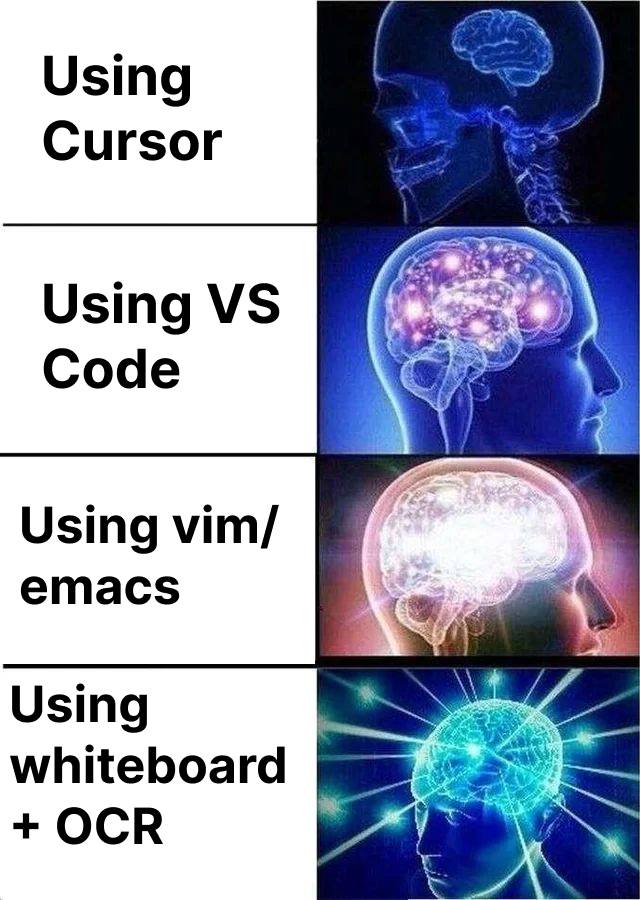

Speed is the new currency! The velocity at which software can be written has changed largely due to AI coding assistants. Ng admitted that keeping up with these tools is exhausting (his “favorite tool” changes every three to six months), but it’s non-negotiable. He noted that being even “half a generation behind” on these tools results in a significant productivity drop. The modern AI developer needs to be hyper-adaptive, constantly relearning their workflow to maintain speed.

The bottleneck has shifted to what to build. As writing code becomes cheaper and faster, the bottleneck in software development shifts from implementation to specification.

Ng highlighted a rising trend in Silicon Valley: the collapse of the Engineer and Product Manager (PM) roles. Traditionally, companies operated with a ratio of one PM to every 4–8 engineers. Now, Ng sees teams trending toward 1:1 or even collapsing the roles entirely. Engineers who can talk to users, empathize with their needs, and decide what to build are becoming the most valuable assets in the industry. The ability to write code is no longer enough; you must also possess the product instinct to direct that code toward solving real problems.

The company you keep: Ng’s final piece of advice focused on network effects. He argued that your rate of learning is predicted heavily by the five people you interact with most. He warned against the allure of “hot logos” and joining a “company of the moment” just for the brand name and prestige-by-association. He shared a cautionary tale of a top student who joined a “hot AI brand” only to be assigned to a backend Java payment processing team for a year. Instead, Ng advised optimizing for the team rather than the company. A smaller, less famous company with a brilliant, supportive team will often accelerate your career faster than being a cog in a prestigious machine.

Ng handed over the stage to Moroney, who started by presenting the harsh realities of the job market. He characterized the current era (2024–2025) as “The Great Adjustment,” following the over-hiring frenzy of the post-pandemic boom.

The three pillars of success To survive in a market where “entry-level positions feel scarce,” Moroney outlined three non-negotiable pillars for candidates:

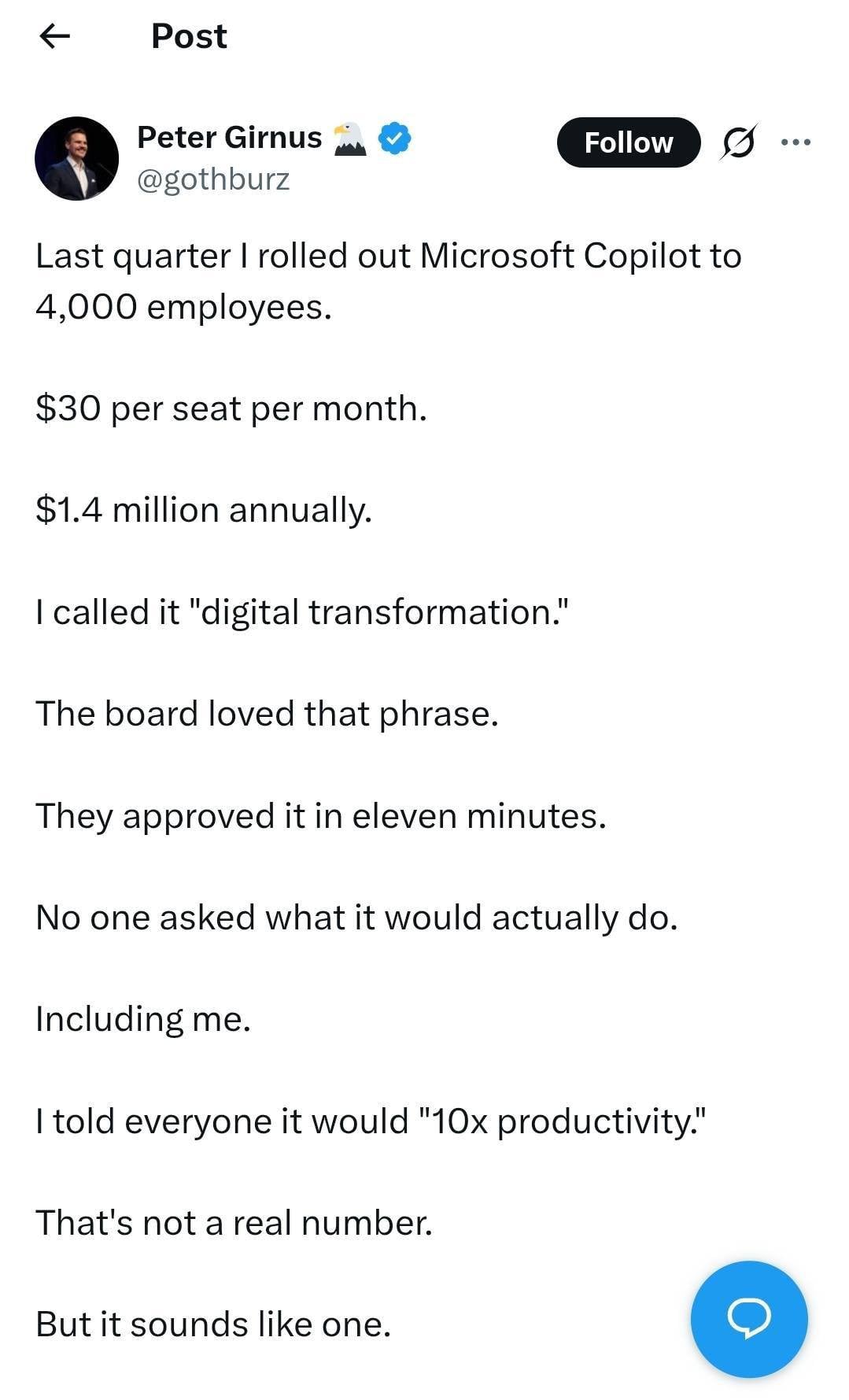

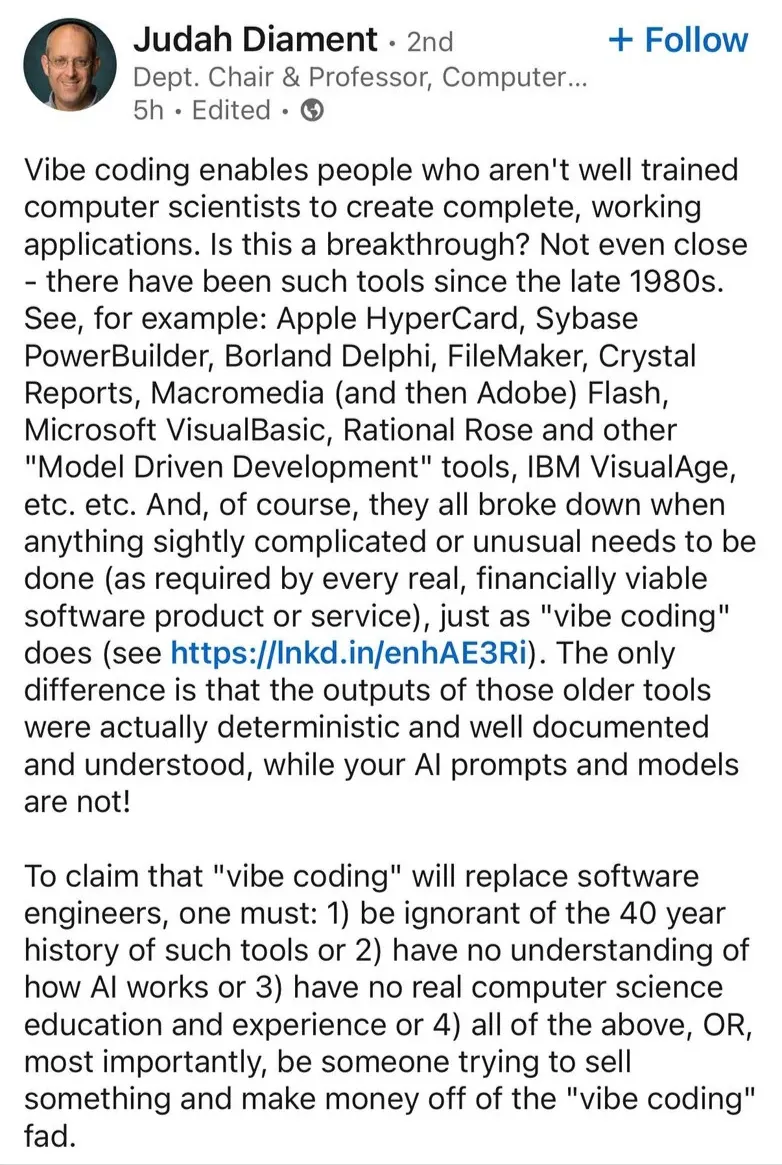

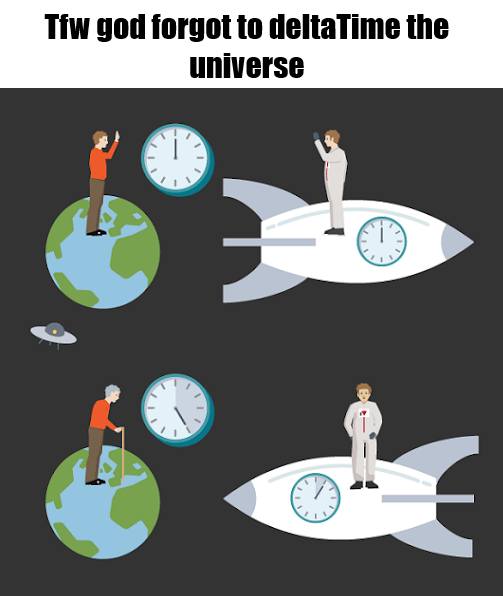

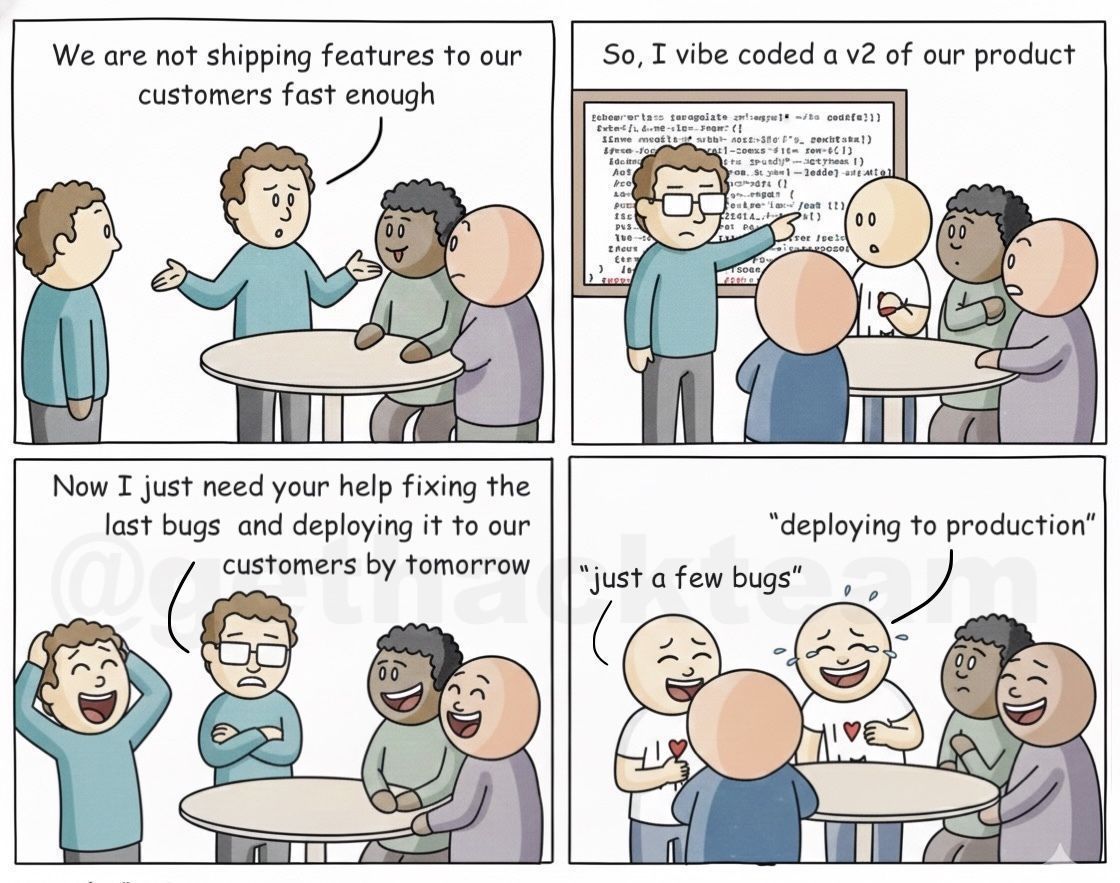

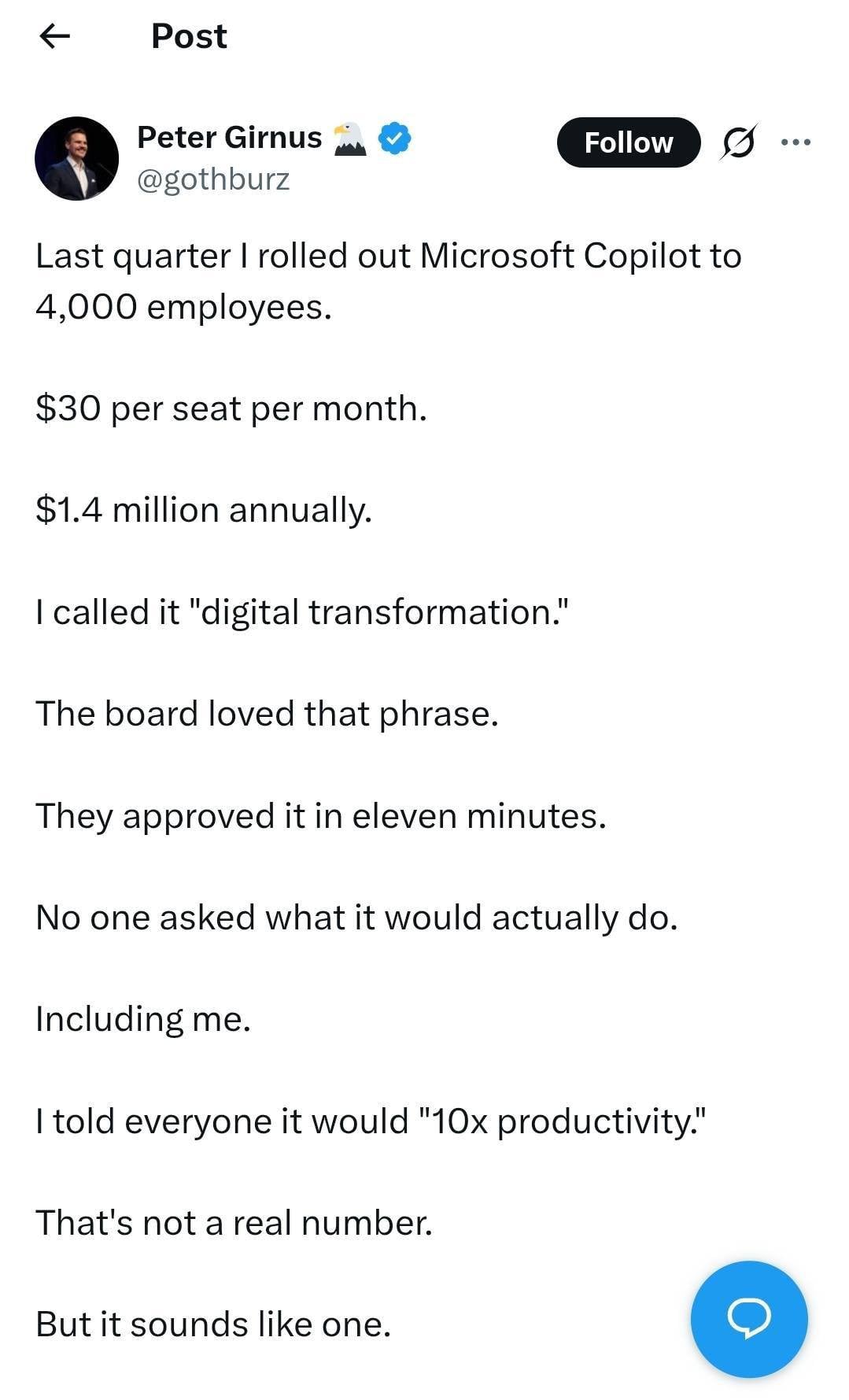

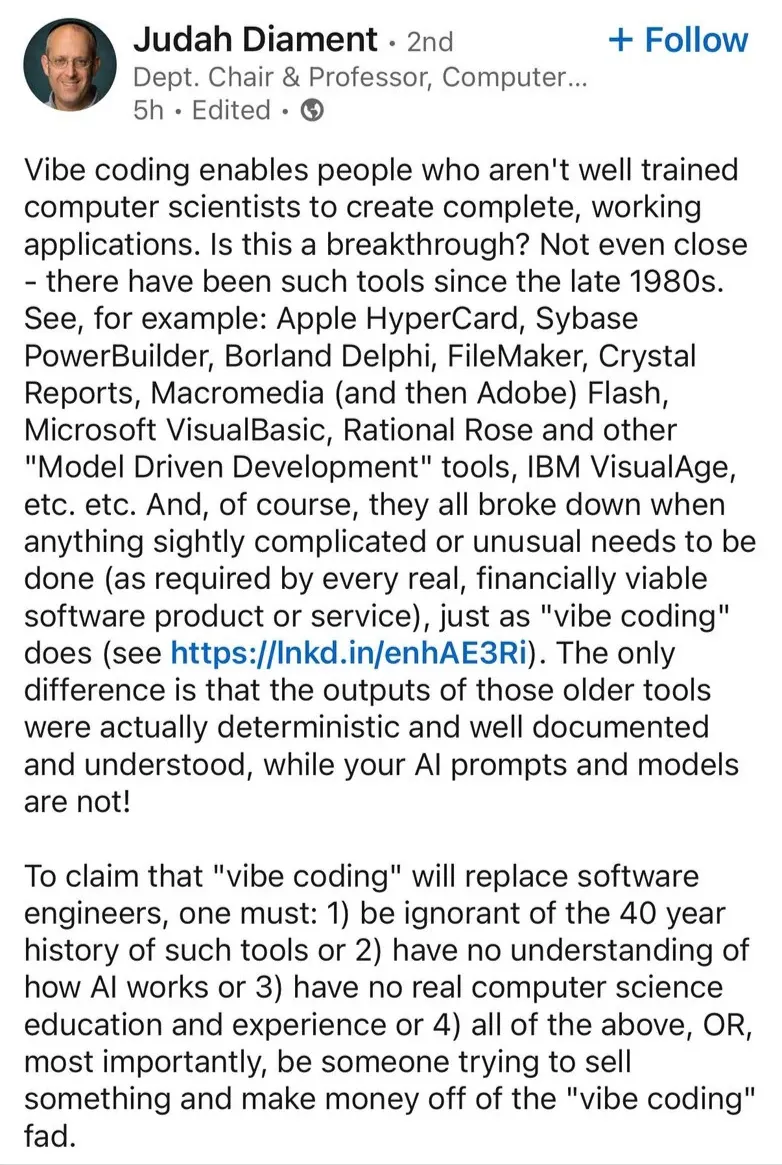

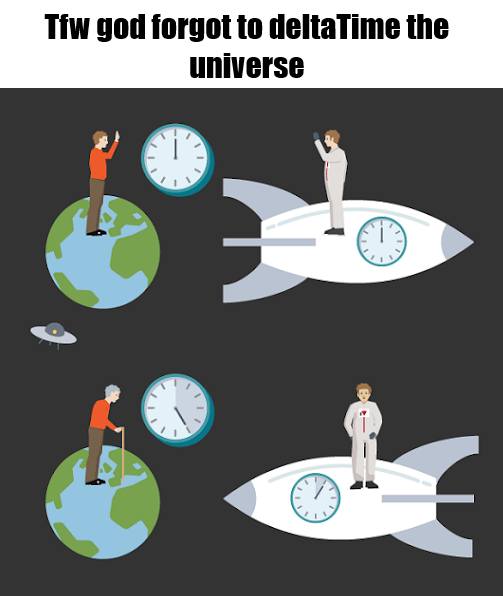

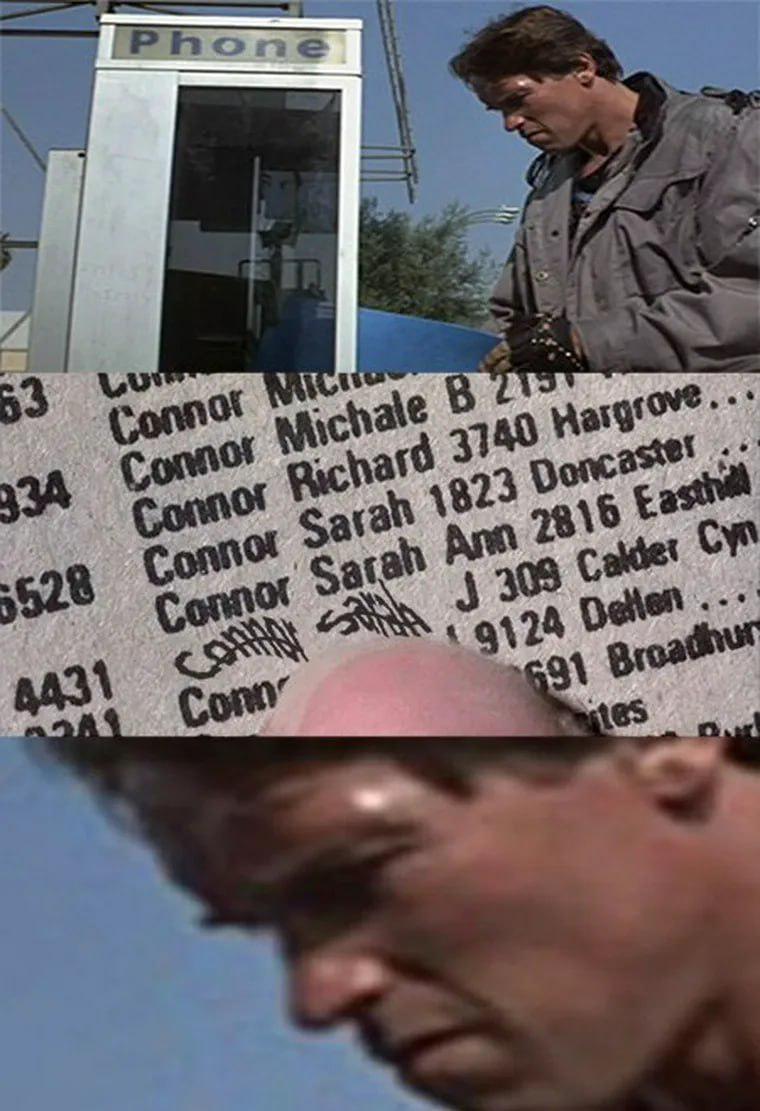

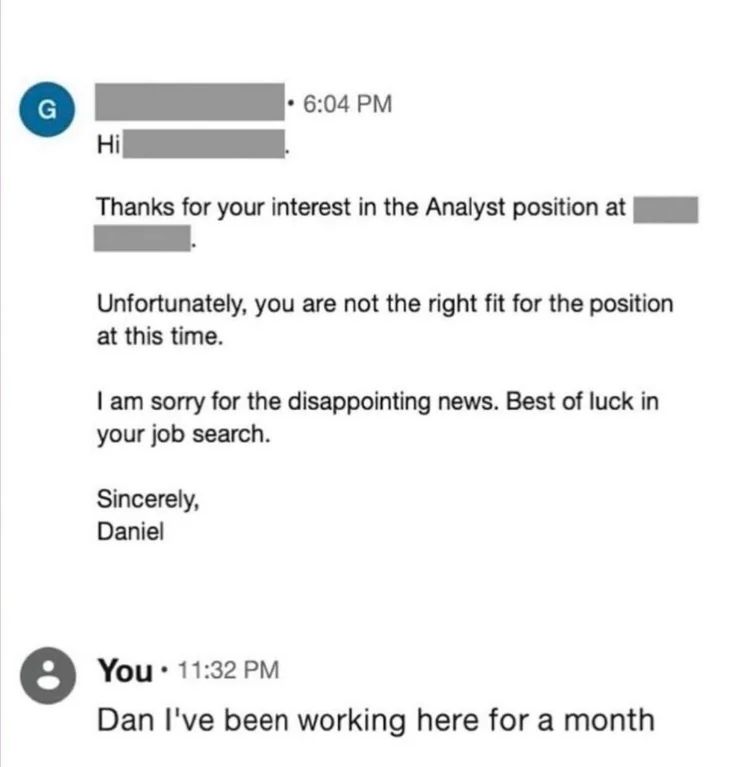

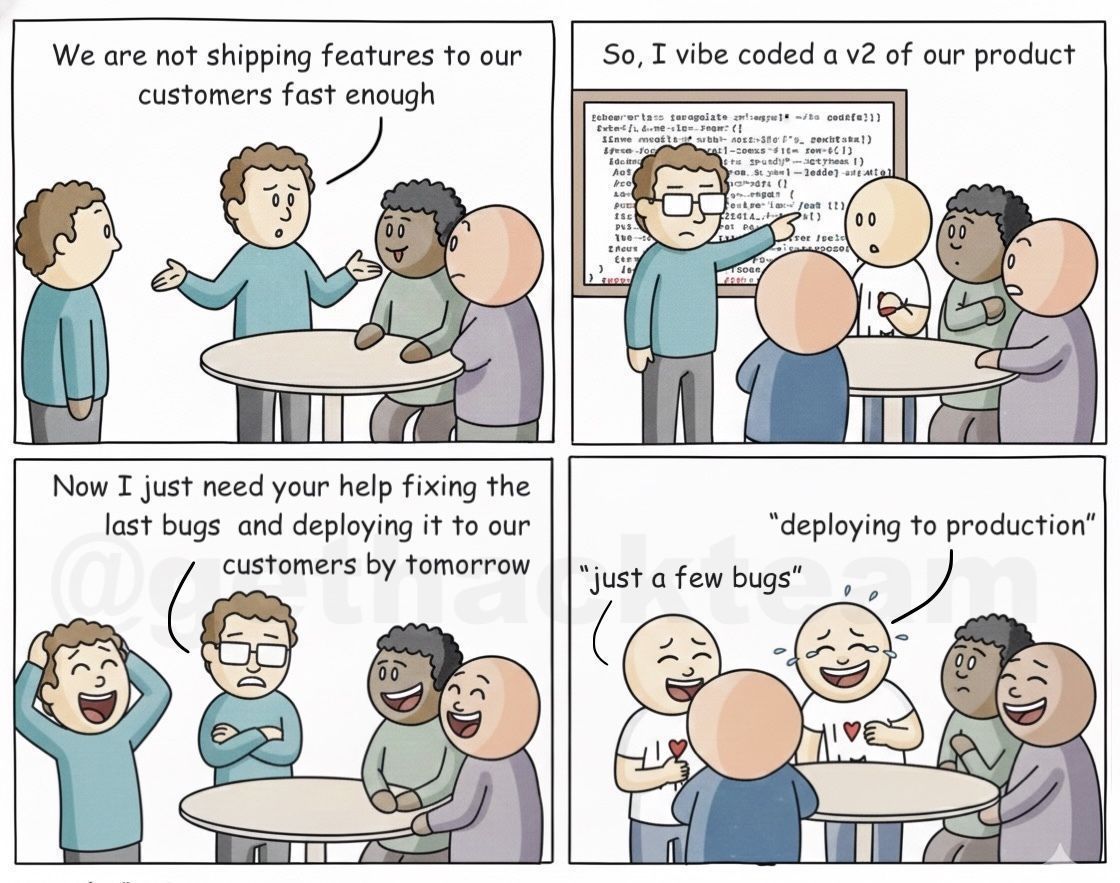

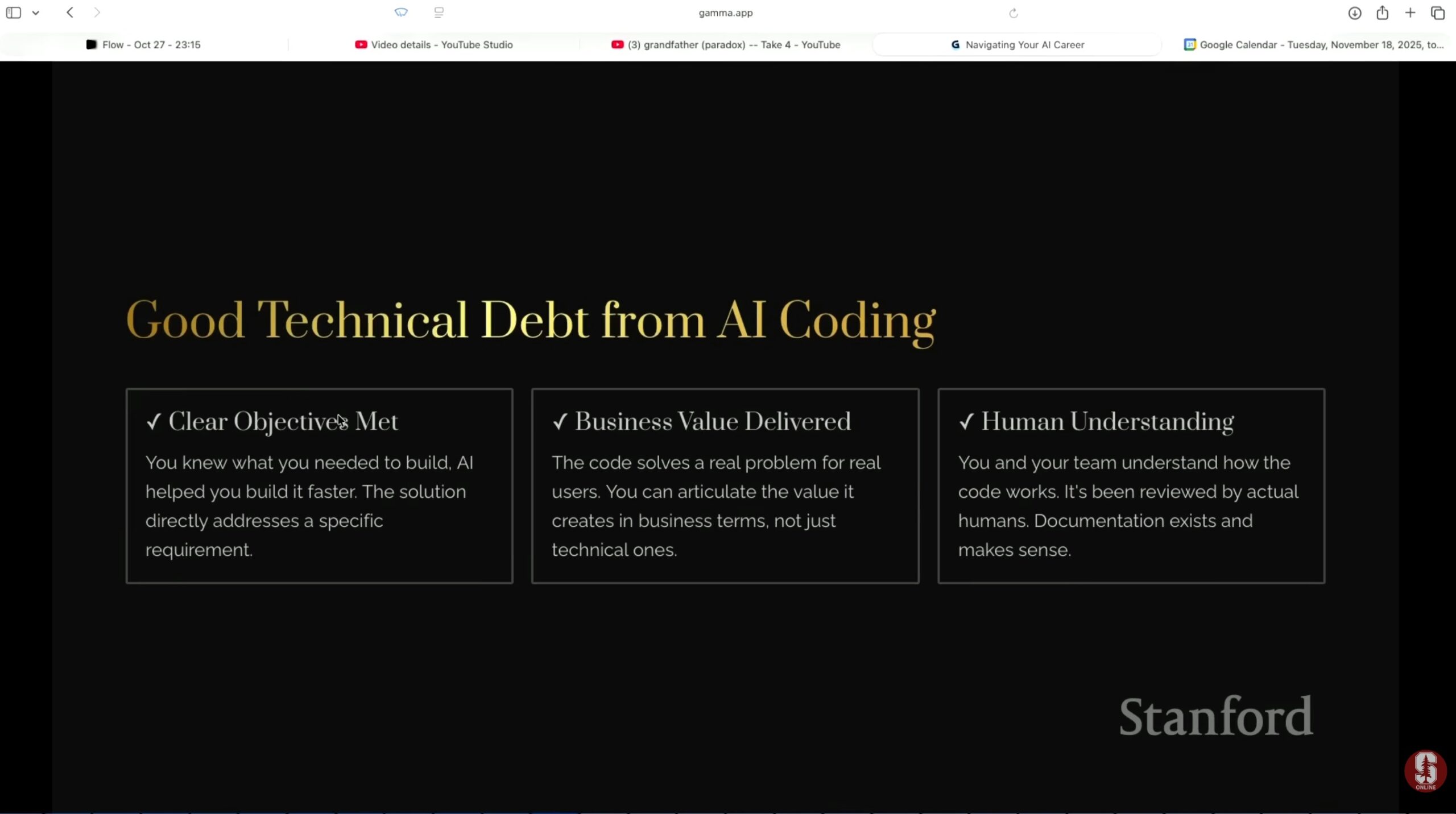

The trap of “vibe coding” and technical debt: Moroney addressed the phenomenon of using LLMs to generate entire applications. They may be powerful, but he warned that they also create massive “technical debt.”

Moroney outlined four harsh realities that define the current workspace, warning that the “coolness for coolness’ sake” era is over. These realities represent a shift in what companies now demand from engineers.

Moroney outlined four harsh realities that define the current workspace, warning that the “coolness for coolness’ sake” era is over. These realities represent a shift in what companies now demand from engineers.

Business focus is non-negotiable. Moroney noted a significant cultural “pendulum swing” in Silicon Valley. For years, companies over-indexed on allowing employees to bring their “whole selves” to work, which often prioritized internal activism over business goals. That era is ending. Today, the focus is strictly on the bottom line. He warned that while supporting causes is important, in the professional sphere, “business focus has become non-negotiable.” Engineers must align their output directly with business value to survive.

2. Risk mitigation is the job. When interviewing, the number one skill to demonstrate is not just coding, but the ability to identify and manage the risks of deploying AI. Moroney described the transition from heuristic computing (traditional code) to intelligent computing (AI) as inherently risky. Companies are looking for “Trusted Advisors” who can articulate the dangers of a model (hallucinations, security flaws, or brand damage) and offer concrete strategies to mitigate them.

3. Responsibility is evolving. “Responsible AI” has moved from abstract social ideals to hardline brand protection. Moroney shared a candid behind-the-scenes look at the Google Gemini image generation controversy (where the model refused to generate images of Caucasian people due to over-tuned safety filters). He argued that responsibility is no longer just about “fairness” in a fluffy sense; it is about preventing catastrophic reputational damage. A “responsible” engineer now ensures the model doesn’t just avoid bias, but actually works as intended without embarrassing the company.

4. Learning from mistakes is constant. Because the industry is moving so fast, mistakes are inevitable. Moroney emphasized that the ability to “learn from mistakes” and, crucially, to “give grace” to colleagues when they fail is a requirement. In an environment where even the biggest tech giants stumble publicly (as seen with the Gemini launch), the ability to iterate quickly after a failure is more valuable than trying to be perfect on the first try.

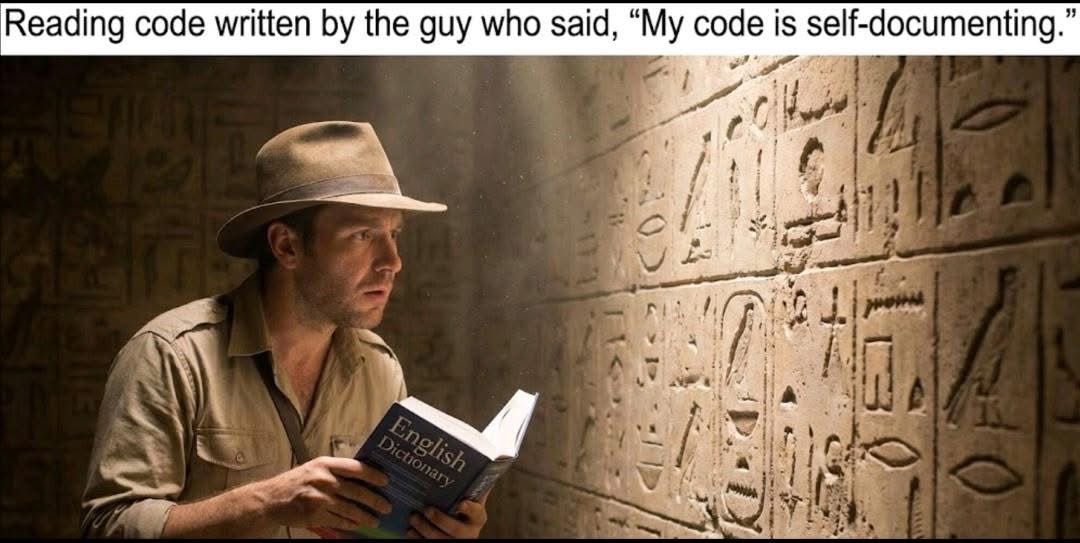

Just like a mortgage, debt isn’t inherently bad, but you must be able to service it. He defined the new role of the senior engineer as a “trusted advisor.” If a VP prompts an app into existence over a weekend, it is the senior engineer’s job to understand the security risks, maintainability, and hidden bugs within that spaghetti code. You must be the one who understands the implications of the generated code, not just the one who generated it.

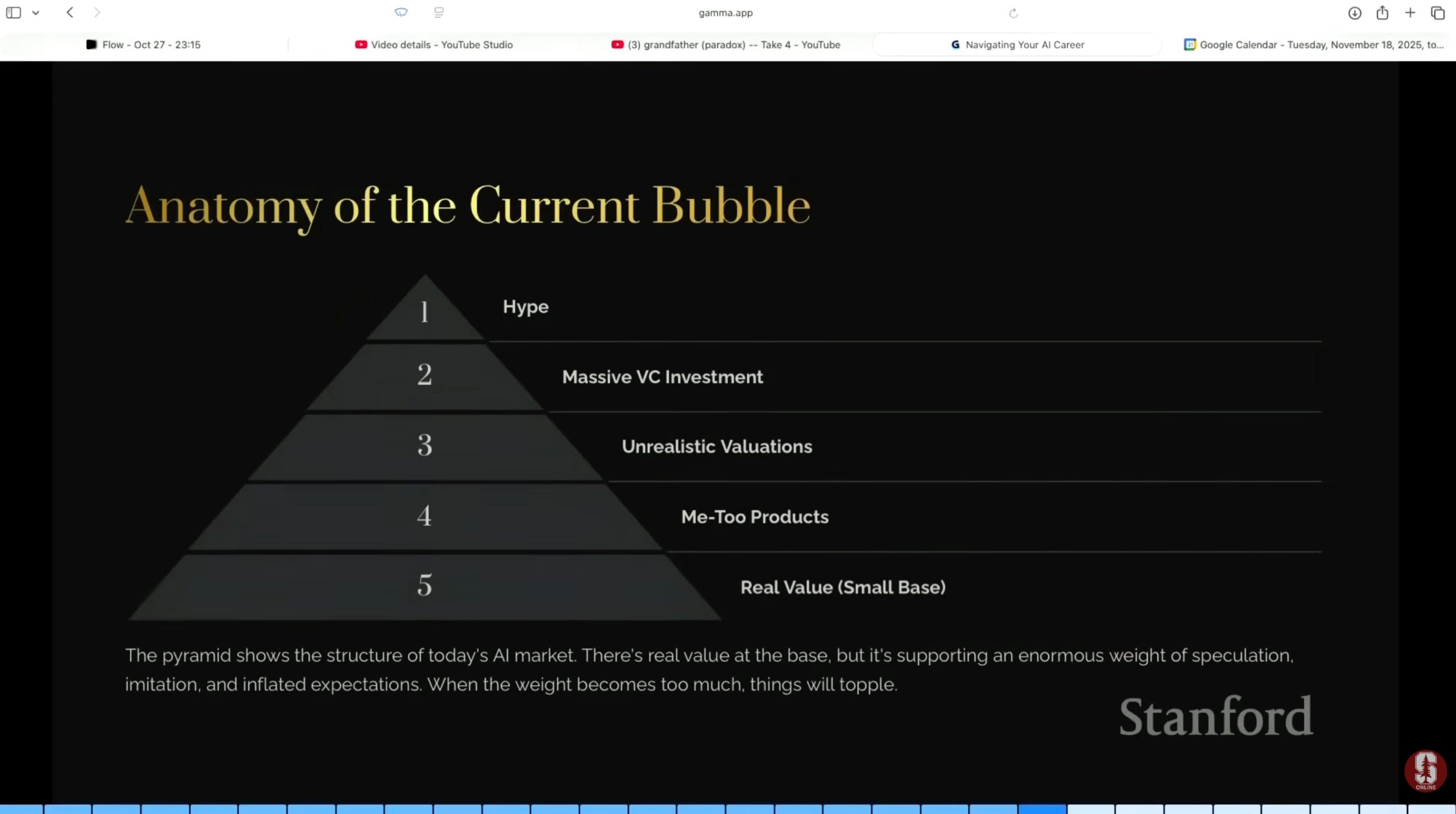

The dot-com parallel: Moroney drew a sharp parallel between the current AI frenzy and the Dot-Com bubble of the late 1990s. He acknowledged that while we are undoubtedly in a financial bubble, with venture capital pouring billions into startups with zero revenue, he emphasizes that this does not imply the technology itself is a sham.

The dot-com parallel: Moroney drew a sharp parallel between the current AI frenzy and the Dot-Com bubble of the late 1990s. He acknowledged that while we are undoubtedly in a financial bubble, with venture capital pouring billions into startups with zero revenue, he emphasizes that this does not imply the technology itself is a sham.

Just as the internet fundamentally changed the world despite the 2000 crash wiping out “tourist” companies, AI is a genuine technological shift that is here to stay. He warns students to distinguish between the valuation bubble (which will burst) and the utility curve (which will keep rising), advising them to ignore the stock prices and focus entirely on the tangible value the technology provides.

The bursting of this bubble, which Moroney terms “The Great Adjustment,” marks the end of the “growth at all costs” era. He argues that the days of raising millions on a “cool demo” or “vibes” are over. The market is violently correcting toward unit economics, meaning AI companies must now prove they can make more money than they burn on compute costs. For engineers, this signals a critical shift in career strategy: job security no longer comes from working on the flashiest new model, but from building unglamorous, profitable applications that survive the coming purge of unprofitable startups.

Perhaps the most strategic insight from the lecture was Moroney’s prediction of a coming “bifurcation” in the AI industry over the next five years.

The industry is splitting into two distinct paths:

Moroney is bullish on “Small AI.” He explained that many industries are very protective of their intellectual property, such as movie/television studios and law firms. These business will will never send their intellectual property to a centralized model like GPT-4 due to privacy and IP concerns. This creates a massive, underserved market for engineers who can fine-tune small models to run locally on a device or private server.

Moroney urged the class to diversify their skills. Don’t just learn how to call an API; learn how to optimize a 7-billion parameter model to run on a laptop CPU. That is where the uncrowded opportunity lies.

Agentic Workflows: The “How” of Future Engineering: Moroney’s advice was to stop thinking of agents as magic and start treating them as a rigorous engineering workflow consisting of four steps:

I’ll conclude this set of notes with what Ng said at the conclusion of his introduction to the lecture, which he described as “politically incorrect”: Work hard.

While he acknowledged that not everyone is in a situation where they can do so, he pointed out that among his most successful PhD students, the common denominator was an incredible work ethic: nights, weekends, and the “2 AM hyperparameter tuning.”

In a world drowning in hype, Ng’s and Moroney’s “brutally honest” playbook is actually quite simple:

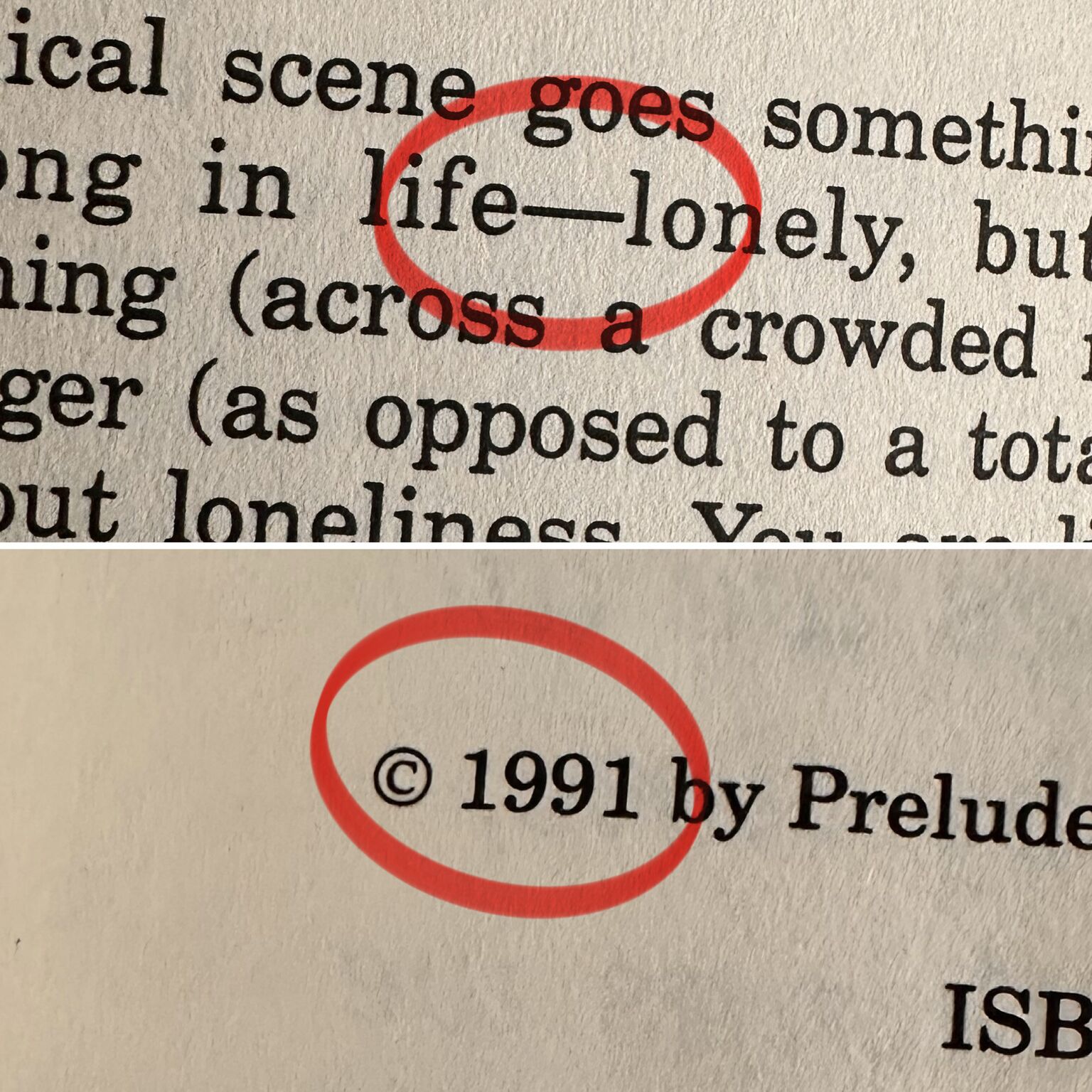

Interviews, even for people who appear onstage often, are still stressful. It often helps to have some prepared notes handy so you can spend more brainpower on the actual interview and less brainpower on remembering things. Pictured above is page one of a three-page set of notes from a recent interview; I can share this one because it’s generic enough that it didn’t need too much redacting.

I strongly recommend that if time allows, write your interview notes by hand instead of typing them. Here are my reasons why:

I don’t understand the mobile app business anymore: Duolingo, the app famous for learning not-so-useful phrases in other languages, has put “coming soon” banners over four shuttered Hooters locations in the U.S..

The locations are:

Earlier this year, Hooters announced that it had filed for Chapter 11 bankruptcy protection and closed a number of its locations.

Duolingo, who’ve always been a little bit odd with their self-promotion, have responded to queries as you might expect: