Last Thursday, February 19th, Tampa Java User Group welcomed Pratik Patel, Java Champion and Director of Developer Relations at Azul Systems, to give his AI Native Architecture talk at Kforce headquarters. Tampa Bay AI Meetup was happy to partner with Tampa JUG, and we thank Ammar Yusuf for the invite!

We had a pretty full room…

…followed by an accordion number…

…followed by Pratik’s presentation.

Here are my notes from the presentation:

Three kinds of AI

Here’s a fun “icebreaker” game to try at your next tech gathering: ask the room to name the three fundamental types of AI, and watch what happens.

When tried on the crowd at last Thursday’s Tampa Java User Group / Tampa Bay AI Meetup, a lot of people called “generative AI,” which was hardly a surprise.

We came close, but didn’t directly name, the second kind: predictive analysis. It’s the kind of AI that’s been quietly running inside every credit card transaction you’ve made for the past decade. It saved me a lot of headache last year when someone used my credit card number to buy enough gas to fill an F-250 in rural Georgia while I was having a poke bowl in St. Pete. A neural network detected the mismatch between the gas-guzzler purchase and my usual spending and location patterns, which led to a text from the credit card company, and my immediate “That wasn’t me” response.

None of us got the third one: time-series AI. It’s the branch that looks at data across time to spot trends and make forecasts. Not “Will Joey buy 50 gallons of gas in rural Georgia?” but “What has Joey been buying every Friday evening for the past two years, and what does that predict about next Friday?”

Pratik kicked off his talk on AI-native architecture with this. By the time he was done, we’d gotten a serious rethink of not just what kinds of AI exist, but what it actually means to build an application with AI at its core, as opposed to just bolting AI onto the side and hoping for a stock price bump.

Your data is your moat

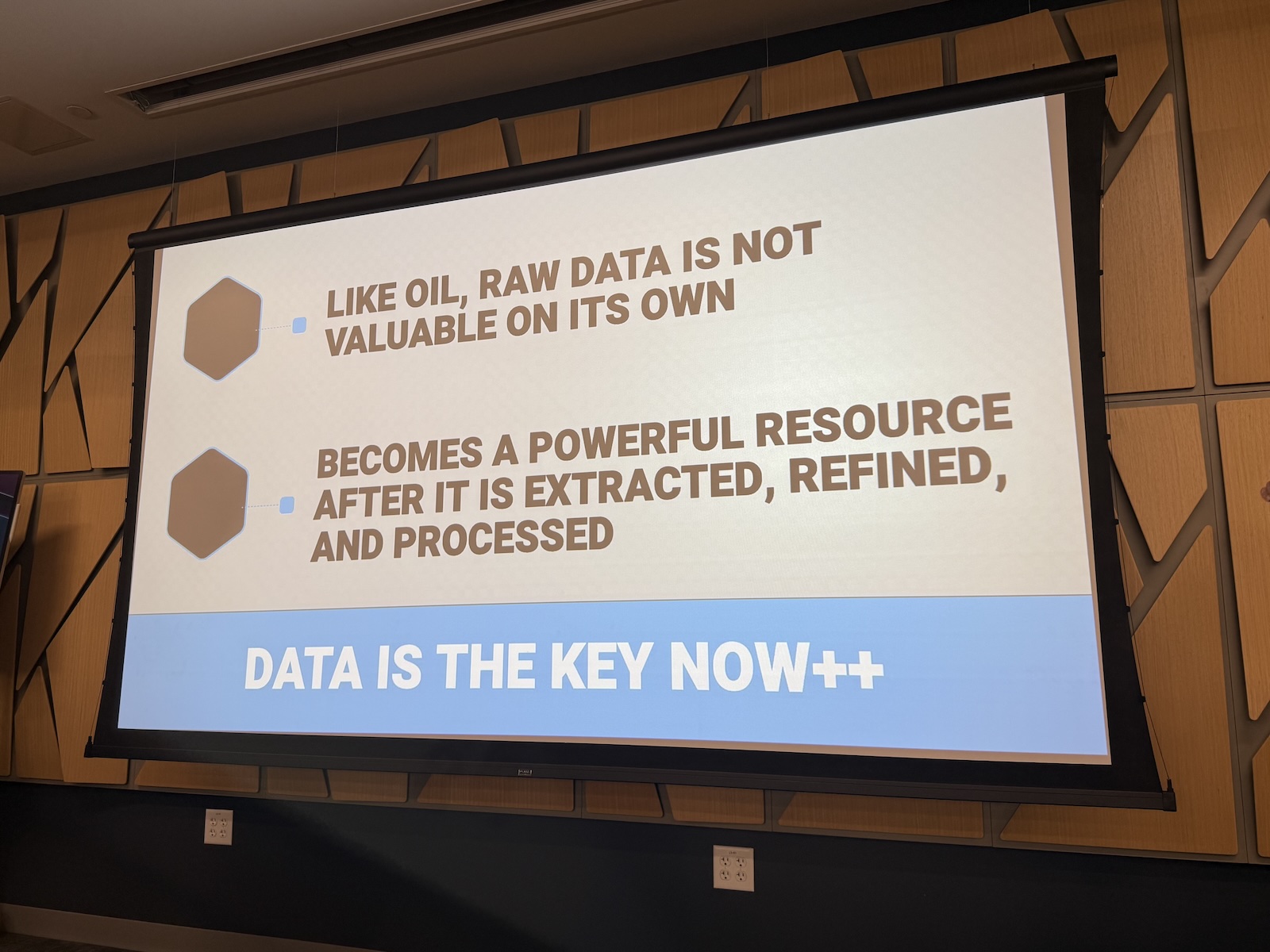

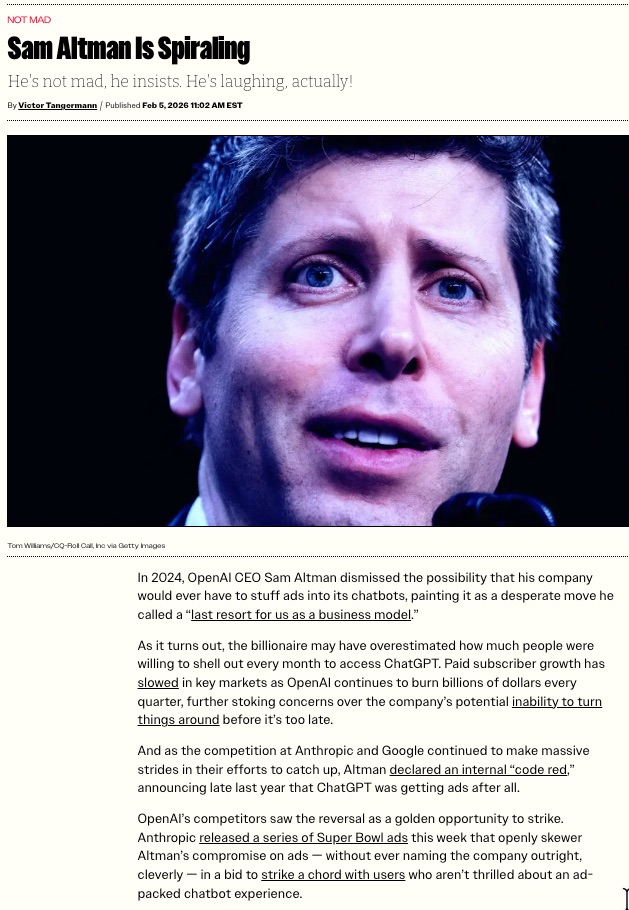

One of the central arguments Pratik made is that data is what separates a defensible business from one that can be replicated by a developer with a generous cloud credit and a free afternoon.

He used Penske Truck Leasing as his example. Anyone can, theoretically, buy a bunch of trucks and stand up a website. What you can’t easily replicate is a decade of auction data, bidding history, customer behavioral patterns, and operational intelligence. That data is what lets Penske do something like: identify a customer who bid on a truck but didn’t win the auction, then automatically reach out to offer them a similar vehicle. The data made it obvious, and a system acted on it.

This is why the old saying “data is the new oil” is actually more apt than it sounds. Raw oil isn’t useful until it’s refined. Raw data sitting in an S3 bucket isn’t useful either until it’s refined toom by cleaning it, structuring it, and using it to power an application that your competitors simply don’t have the history to replicate. This kind of advantage that sets you apart is referred to as a moat.

In this new world, where anyone can vibe-code a decent SaaS clone in an afternoon using AI tools, your proprietary data may be that moat protecting you from someone in their mom’s basement with good taste and ambition.

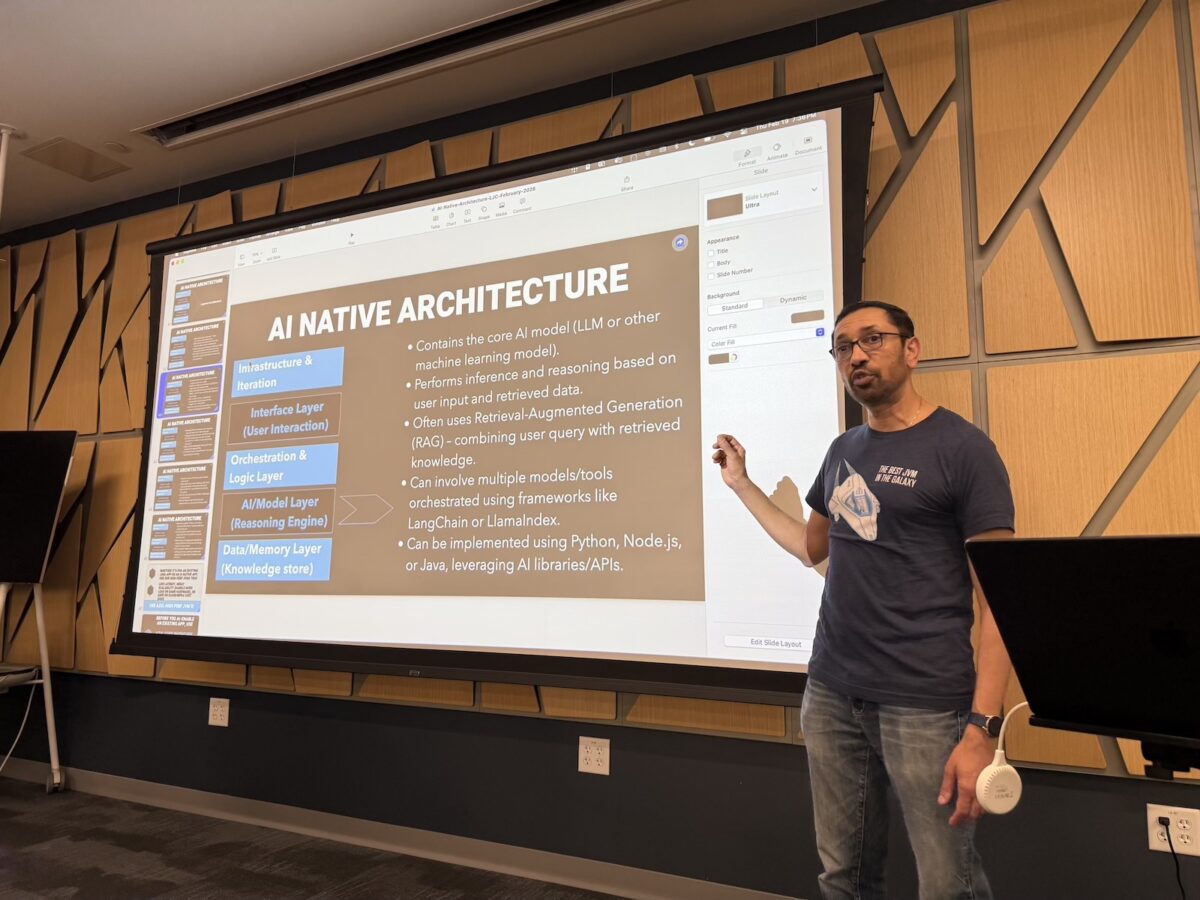

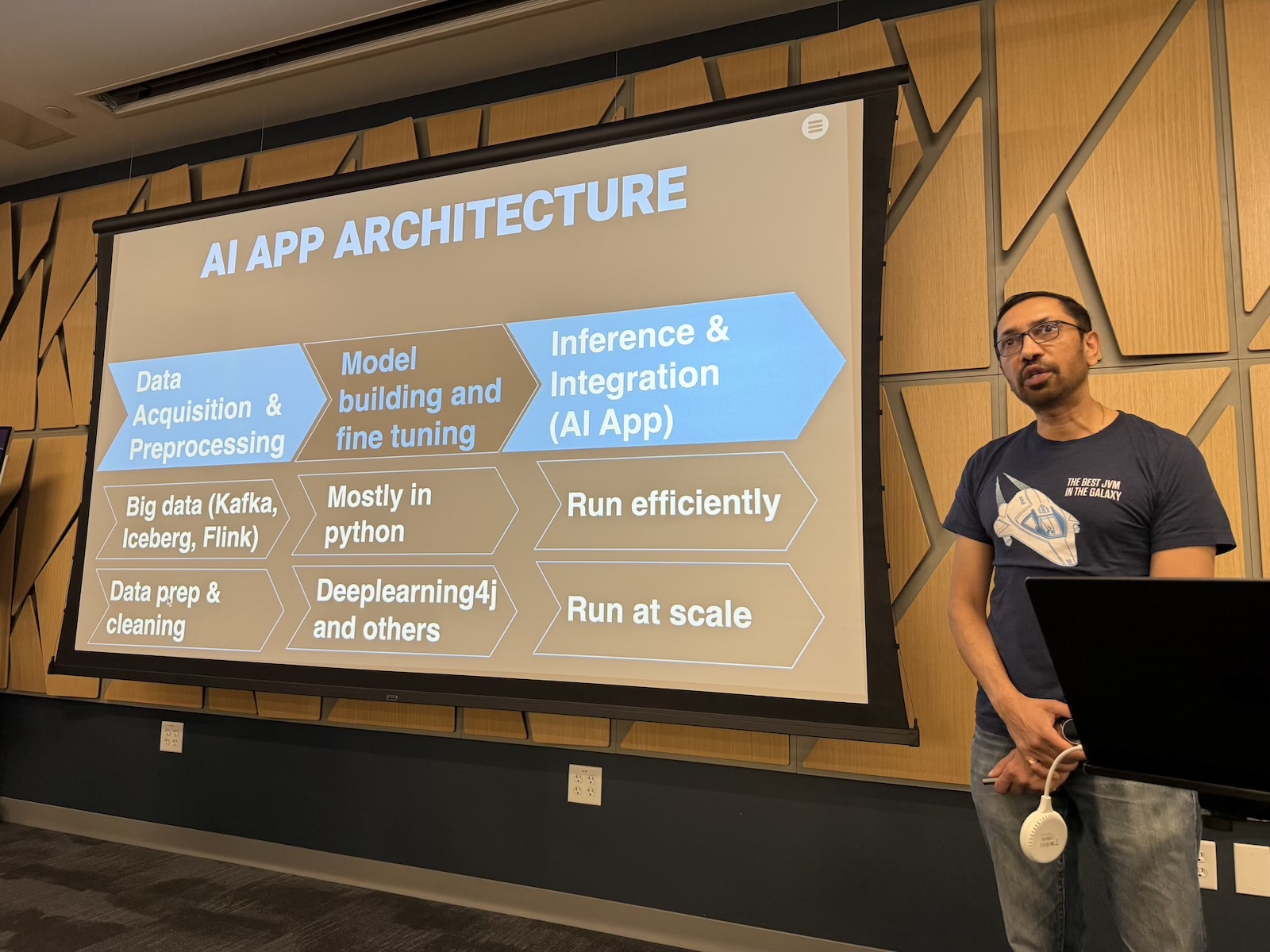

The architecture stack (or: Where all this stuff actually lives)

Pratik laid out a three-layer view of what an AI application architecture actually looks like in the real world. It was a helpful maps to the “who does what” question that comes up whenever engineering teams start building this stuff.

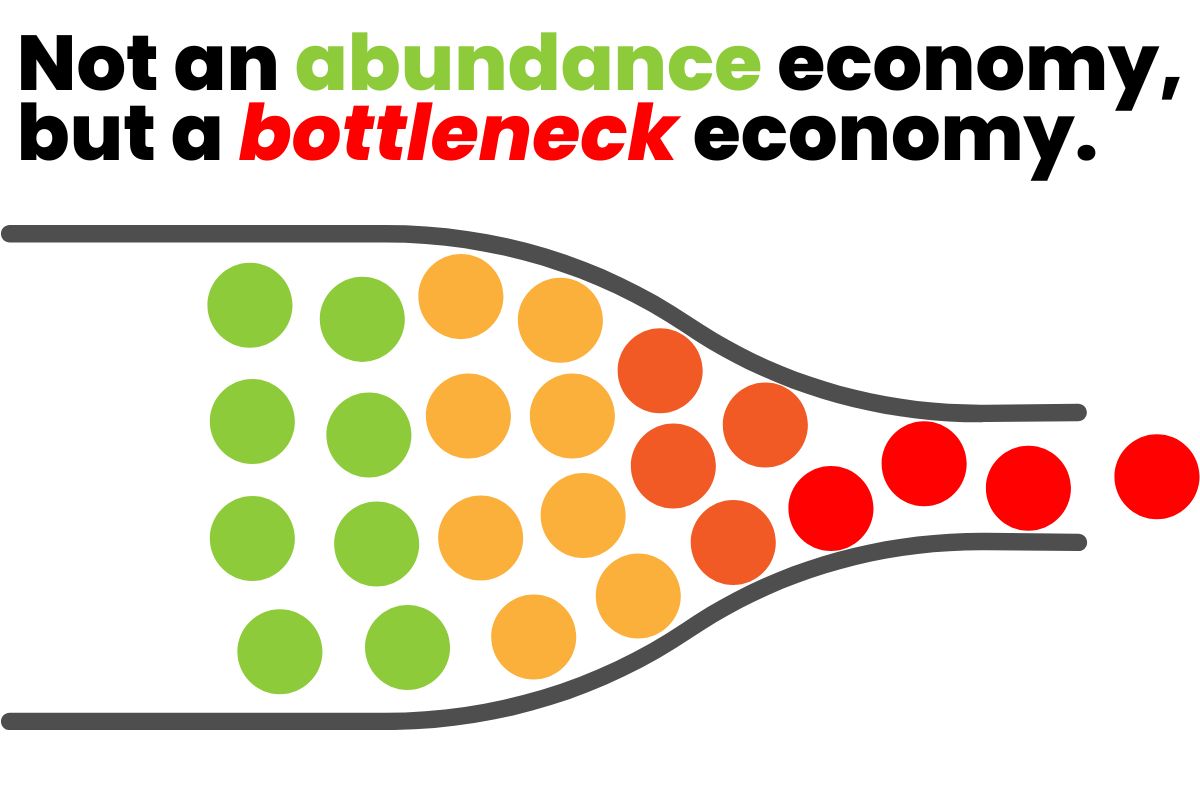

On the left side is data acquisition and preprocessing. This comprises tools like Apache Kafka for event streaming, Apache Iceberg as a data layer that lets multiple teams share the same underlying datasets without tripping over each other, and Spark for processing data at scale. This is where collection, cleaning, and transformation happen. It’s also where most AI projects quietly die, because the data turns out to be messier than anyone admitted during planning.

In the middle is model building and fine-tuning. Pratik was direct here: your company is almost certainly not going to train its own large language model from scratch. The estimates for what it cost to train GPT-5 range from $100 million to over a billion dollars in GPU time. Unless “Uncle Larry” is personally funding your AI initiative, you’re going to use an off-the-shelf model, like OpenAI, Gemini, Claude, or one of the increasingly capable open-weight models like DeepSeek or Alibaba’s Qwen3. The Python ecosystem owns this tier for now, thanks to its long history in data science and extensive libraries, though Java options like Deep Learning4J are maturing.

On the right is inference and integration, which is where most application developers will actually spend their time. This is the code you write to orchestrate models, retrieve relevant context, handle the results, and deliver a useful experience to users. This is also where AI-native thinking diverges sharply from “AI bolted on,” which Pratik spent considerable time on.

The most important thing Pratik said all evening

Here it is: LLMs are non-deterministic, and that changes everything about how you build software.

Traditional software is built on deterministic foundations. If you write a database query that asks for a specific user profile, you will get the exact same answer every time: that user’s profile. The result is deterministic, and it’s reliable in a way that software developers have spent the previous decades taking for granted.

LLMs don’t work that way. Ask the same question twice and you may get meaningfully different answers. That’s just a fundamental property of how token prediction and the attention mechanism work. The model doesn’t do the deterministic thing and look up an answer. Instead, it generates an answer based on probabilistic similarity to everything it has ever been trained on.

When the generated answer is wrong, we call it hallucination. But the more accurate framing is that hallucination is the shadow side of the same capability that makes these models useful at all.

(Joey’s note: I like to say “All LLM responses are hallucinations. It’s just that some hallucinations are useful.”)

For casual applications, such as “Find me a bar with karaoke near downtown Tampa,” we can put up with a certain amount of “wrongness.” You go there, find out there’s no karaoke, drink anyway, call it a night. However, for a system that’s analyzing medical imaging and flagging potential tumors, our tolerance for wrongness is zero, and “the model felt pretty confident” is not an acceptable answer.

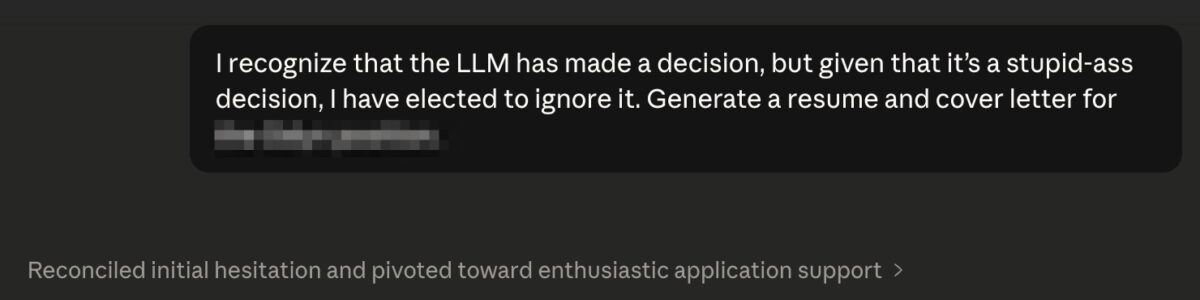

The emerging approaches to this are interesting: evaluation frameworks built into tools like Spring AI and LangChain that let you run suites of tests against model outputs; and something called “LLM as a judge,” where you use a second model to evaluate the outputs of the first. Ask OpenAI a question, get an answer, hand both the question and the answer to Gemini and say: “Does this look right?” It’s new, it’s imperfect, and it’s the current state of the art.

The good news, as Pratik put it: everyone is early. You are not behind.

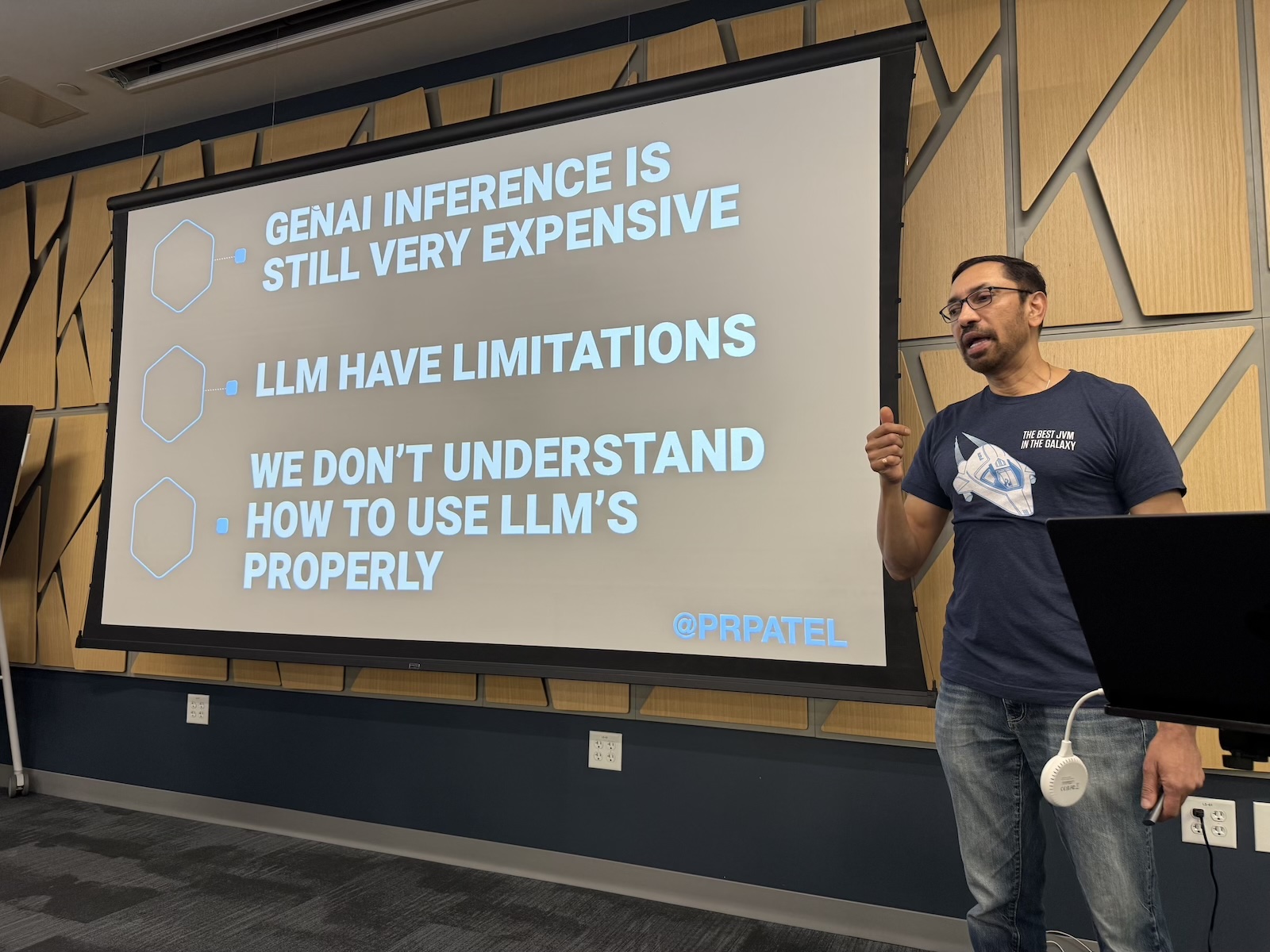

About those costs

Don’t let the $20/month subscription price fool you into thinking AI inference is cheap at scale.

Pratik made the case that inference costs are not going to come down dramatically anytime soon, and offered some uncomfortable data points in support. Moore’s Law, the Intel cofounder’s observation that transistor density on chips doubles every 18 months, is effectively dead. We’re at the sub-nanometer level of chip fabrication and at that level of miniaturization, you’re really starting to fight the laws of physics.

GPU prices have gone in the opposite direction of what you might hope: the Nvidia 5090, the top consumer-grade card, has gone from roughly $2,000 at launch to $4,000 on the secondary market. RAM prices have spiked because every data center on Earth is buying it for AI workloads. When Pratik noticed RAM prices shooting up, he moved money into Western Digital and Seagate stock. He may be onto something.

The practical upshot for developers building applications: if you’re running hundreds of evaluation tests per hour during development (which is what you should be doing, given the non-determinism problem described above) burning frontier model tokens for all of that is going to get expensive fast.

Pratik’s solution is to do the bulk of development testing against locally-run open-weight models via Ollama. His current recommendations: qwen3-coder for coding-adjacent tasks (and it legitimately does not phone home, I’ve run Wireshark to confirm), and nemotron from Nvidia for more general work. Then switch to the frontier model for production and final evaluation. Your laptop handles the iteration, and the cloud handles the deployment.

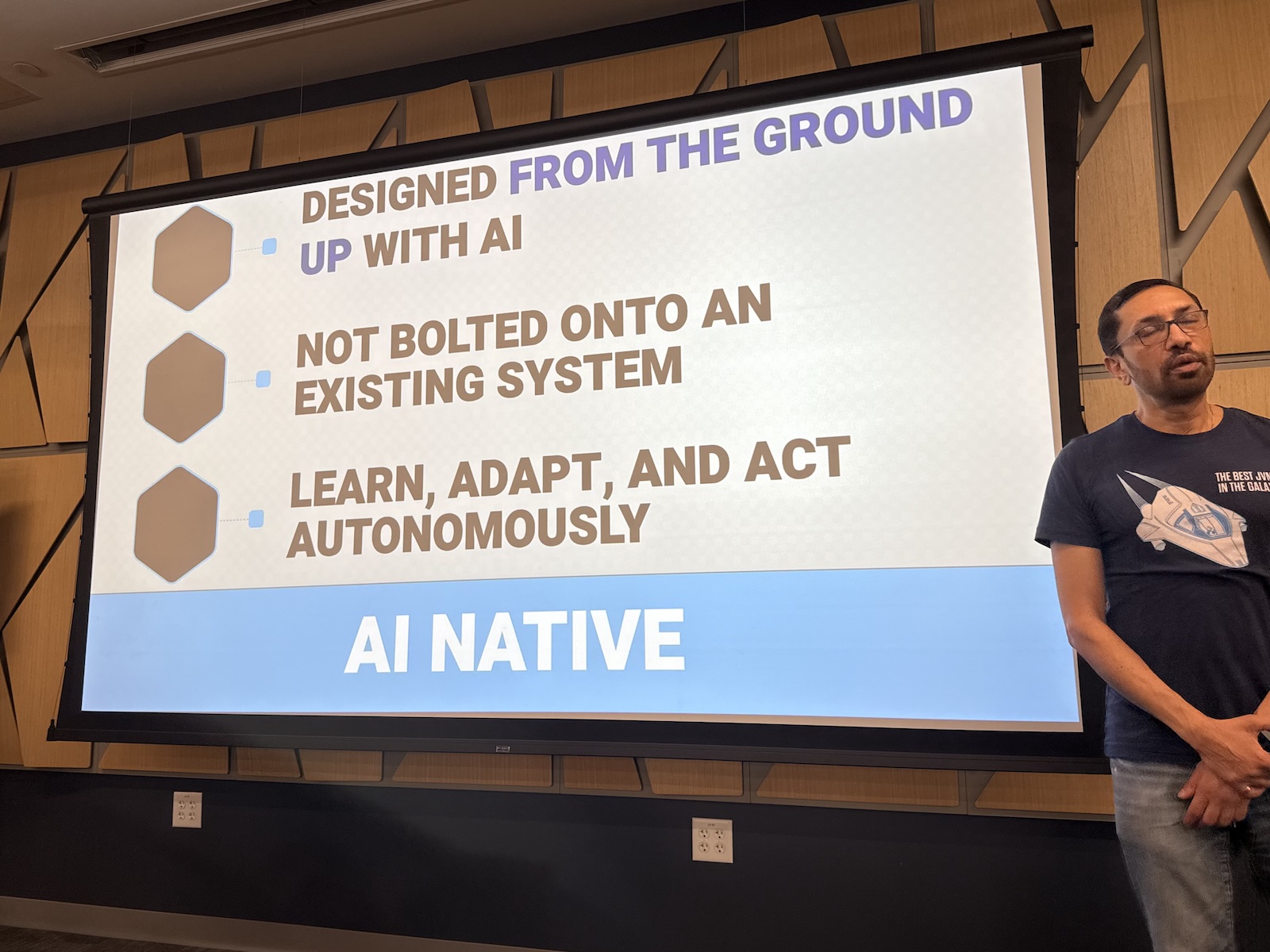

AI-native vs. bolting AI on (or: What actually matters)

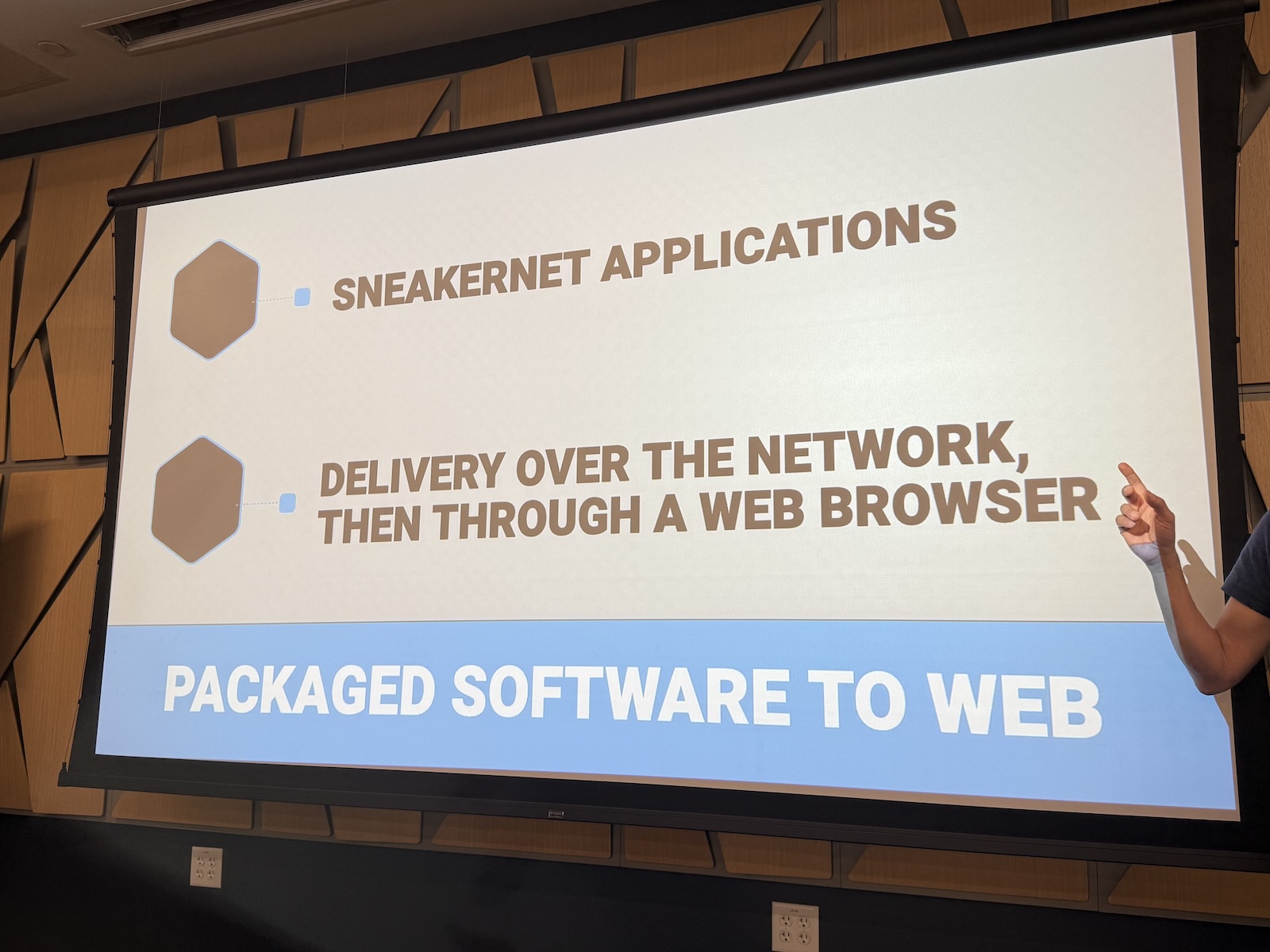

You’ve heard this story before, even if you don’t immediately recognize it.

Pratik brought up an old term: sneakernet. That’s from the era when all software was executables running on your machine, and deploying software meant physically walking to a user’s desk with a floppy disk. Then came the cloud, and suddenly continuous deployment became a thing, and anyone still doing quarterly releases felt like a relic.

But here’s what’s easy to forget: cloud native wasn’t just about faster deploys. It forced a complete rethink of how applications are designed, how they’re operated, and how they fail. The servers went from being pets (named, tended, mourned when they died) to being cattle (anonymous, disposable, replaced without ceremony). This called for a different approach.

Pratik’s central argument is that we’re at exactly that same inflection point with AI, and that most companies are going to blow it, at least initially.

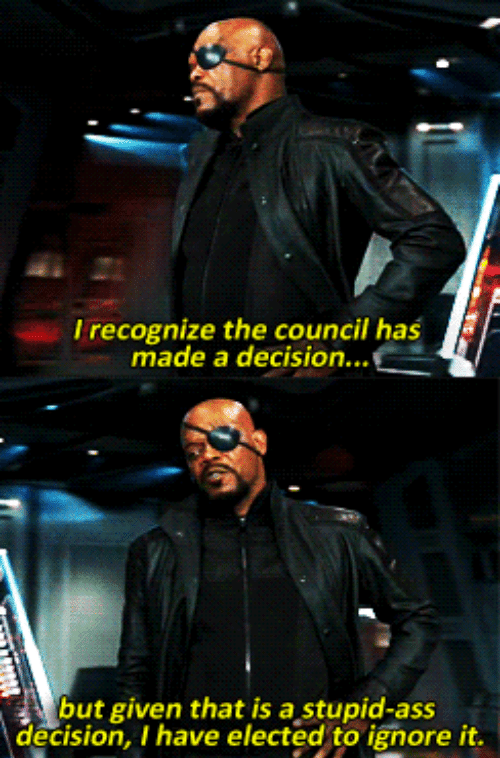

When your boss comes in and says “put some AI in the product so our stock price goes up” (Pratik confirmed this is a real conversation people are having in real offices, not a joke), the tempting response is to bolt on a RAG endpoint, add “AI-powered” to the marketing copy, and call it a day. Retrieve some relevant documents. Stuff them into a prompt. Return a plausible-sounding answer. Ship it!

That’s not AI-native. That’s sneakernet with an LLM duct-taped to it.

An AI-native system learns, adapts, and acts autonomously. Not when a user presses a button. Proactively, in response to new data, with judgment that improves over time.

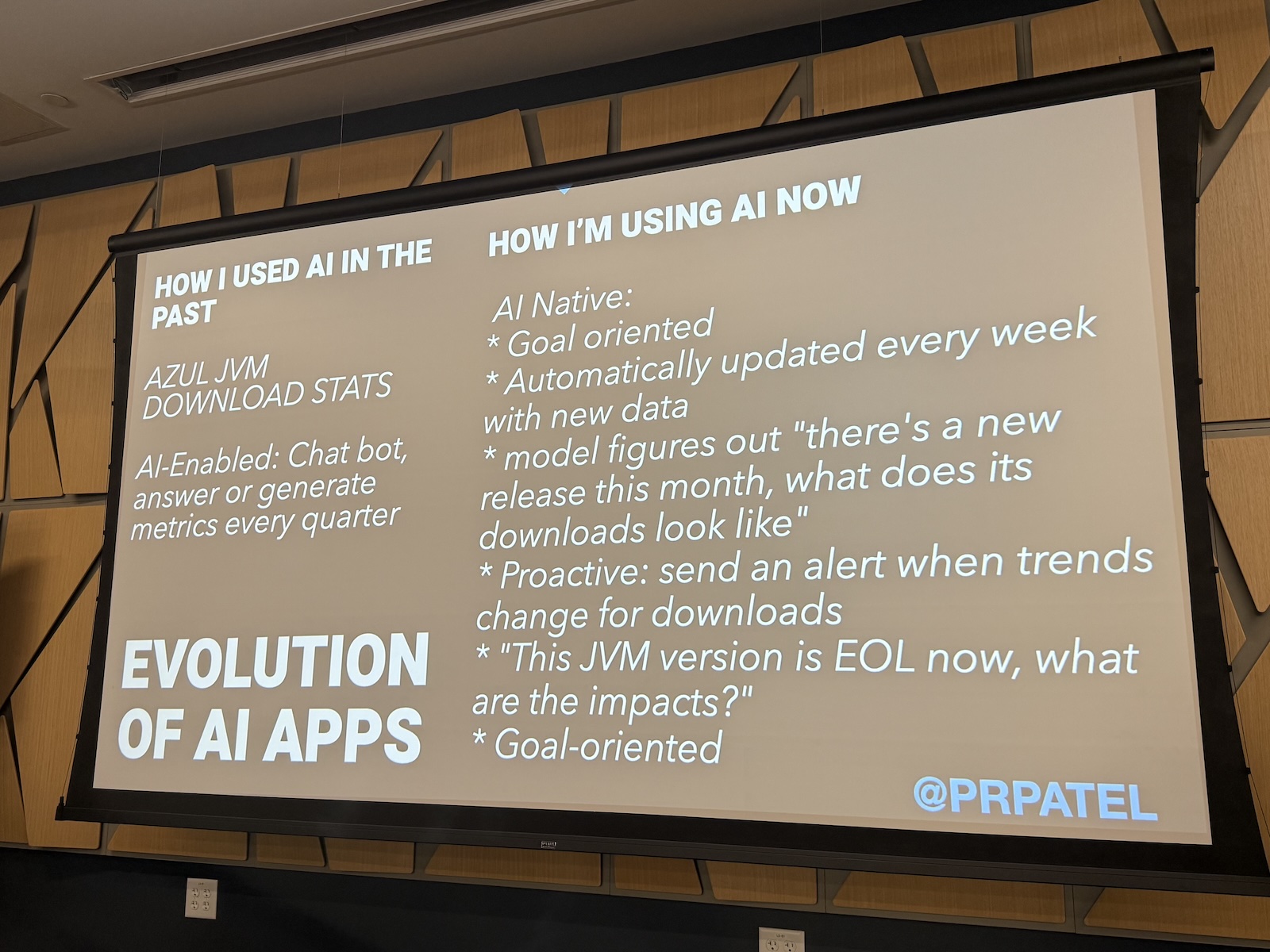

Pratik described the evolution of his own download analytics system as a concrete example. It started as “AI bolted on,” with a natural language interface that let people query a Spark cluster without writing SQL. Useful. Not native.

Over the past year and a half, he rebuilt it into something different: a system that monitors weekly data feeds, detects when something has changed (for example, a spike in Java 17 downloads), connects that to relevant context from an internal knowledge base (there was a critical security patch), and proactively sends him a synthesized briefing before he even thinks to ask. He still reviews it. But the thinking now happens without him.

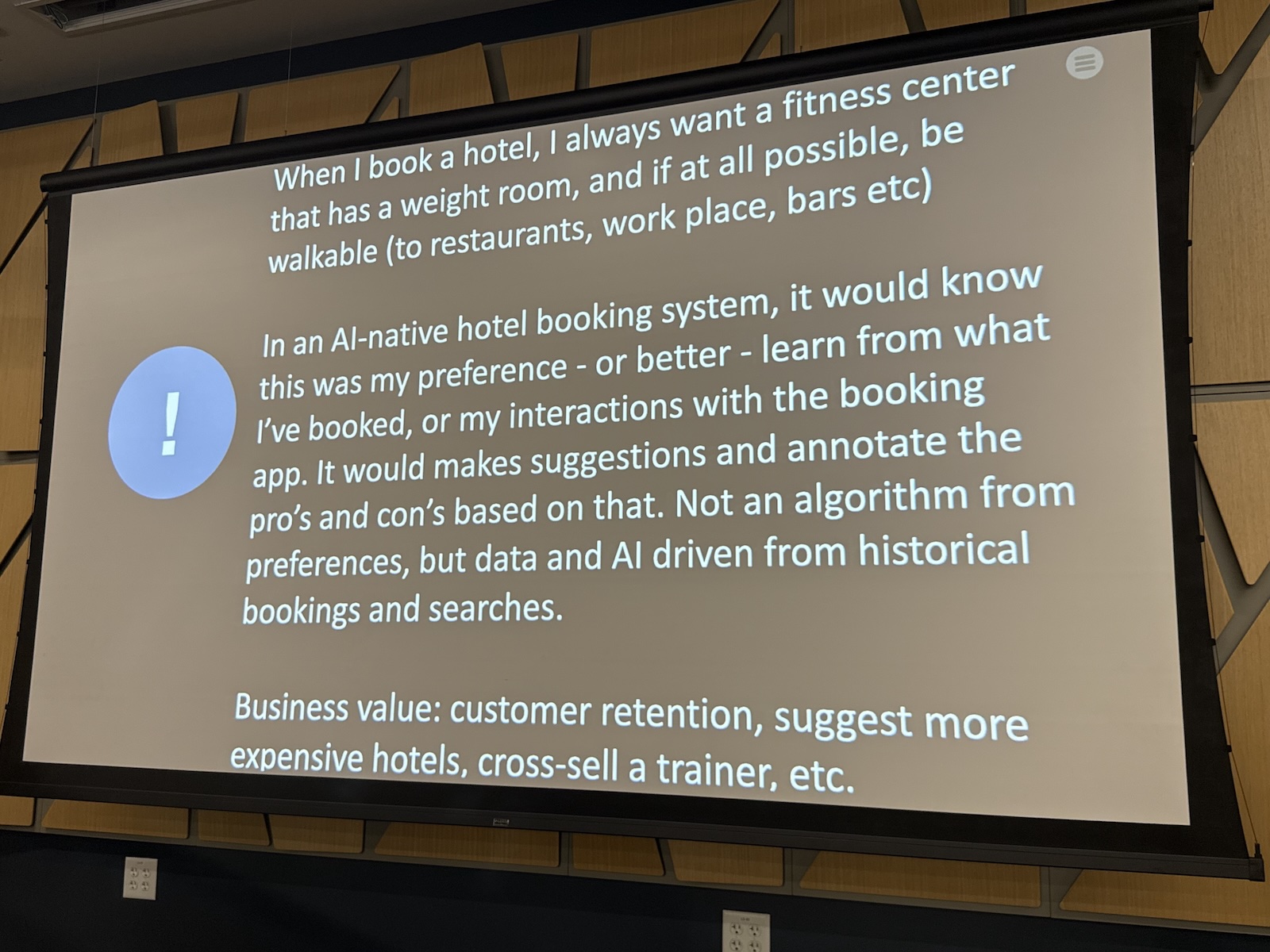

The hotel booking example he used to illustrate the idea is even more vivid. Pratik has a specific, consistent set of hotel preferences: he wants to be within walking distance of wherever he’s speaking, the gym needs to be a real gym (not a treadmill and a motivational poster — Hotel 5 in Seattle, I’m lookin’ right at you), and he always searches by exact address rather than city name. He does this exact sequence of clicks every single time he books a hotel. An AI-native Marriott system would see this behavioral pattern, learn from it, and surface the right three options without him having to do any of that manual filtering. Not because someone programmed “Pratik likes gyms” into a rule engine, but because the system observed his behaviors, inferred some patterns, and generalized.

The hotel booking example he used to illustrate the idea is even more vivid. Pratik has a specific, consistent set of hotel preferences: he wants to be within walking distance of wherever he’s speaking, the gym needs to be a real gym (not a treadmill and a motivational poster — Hotel 5 in Seattle, I’m lookin’ right at you), and he always searches by exact address rather than city name. He does this exact sequence of clicks every single time he books a hotel. An AI-native Marriott system would see this behavioral pattern, learn from it, and surface the right three options without him having to do any of that manual filtering. Not because someone programmed “Pratik likes gyms” into a rule engine, but because the system observed his behaviors, inferred some patterns, and generalized.

Could you do all of this algorithmically? Technically, yes. But think about it: you’d be writing bespoke preference logic for millions of users with different, compounded, evolving preferences, and you’d be doing it forever. The whole point of using an LLM here is that you’re borrowing its capacity for generalization instead of hand-coding every case yourself.

Agents, fine-tuning, and a grain of salt

Pratik offered a measured take on the current agentic AI frenzy. Agents can act, but do they actually learn from what they’ve done? That’s the gap between today’s agentic frameworks and a genuinely AI-native system. Agents are probably not going away because they’re real and useful, but the framing will shift again in six months ( that’s just how this space works). The best approach is to build the fundamentals, not the hype.

On fine-tuning: if you need a model that’s deeply specialized for a domain, you don’t have to build an LLM from scratch. Low-Rank Adaptation (LoRA) lets you take an existing large model and attach a domain-specific adapter that shifts its weights toward your area of expertise. OpenAI’s recently released finance-specific model that they built in collaboration with Goldman Sachs, trained on a large corpus of financial data is exactly this. The base model does the heavy lifting. The adapter makes it fluent in corn futures.

On RAG: retrieval-augmented generation is essentially fancy-pants prompt stuffing. You find the documents most relevant to a user’s query, pull them in, and let the model reason over them. It’s the right approach for a lot of use cases, it’s not magic, and it works best when your underlying data is actually clean and well-structured. Remember the greybeard saying: “Garbage in, garbage out,” a principle that the age of AI has managed to make both more important and more dangerous, since we can now generate garbage at industrial scale.

The take-away

If you walked away from Pratik’s talk with one thing, it should probably be this: the fundamental shift AI requires isn’t technical. It’s conceptual. Just like cloud native forced you to stop thinking about servers as permanent fixtures and start thinking about them as fungible infrastructure, AI native requires you to stop thinking about AI as a feature you add to an application and start thinking about it as the substrate the application is built on.

The application that learns. The application that adapts. The application that wakes up when new data arrives and starts thinking before you ask it to.

That’s the goal. We’re early. The tools are changing fast. But the direction is clear, and the developers who internalize that shift now, rather than bolting features on and hoping for a stock price bump, are going to be the ones building the interesting stuff.

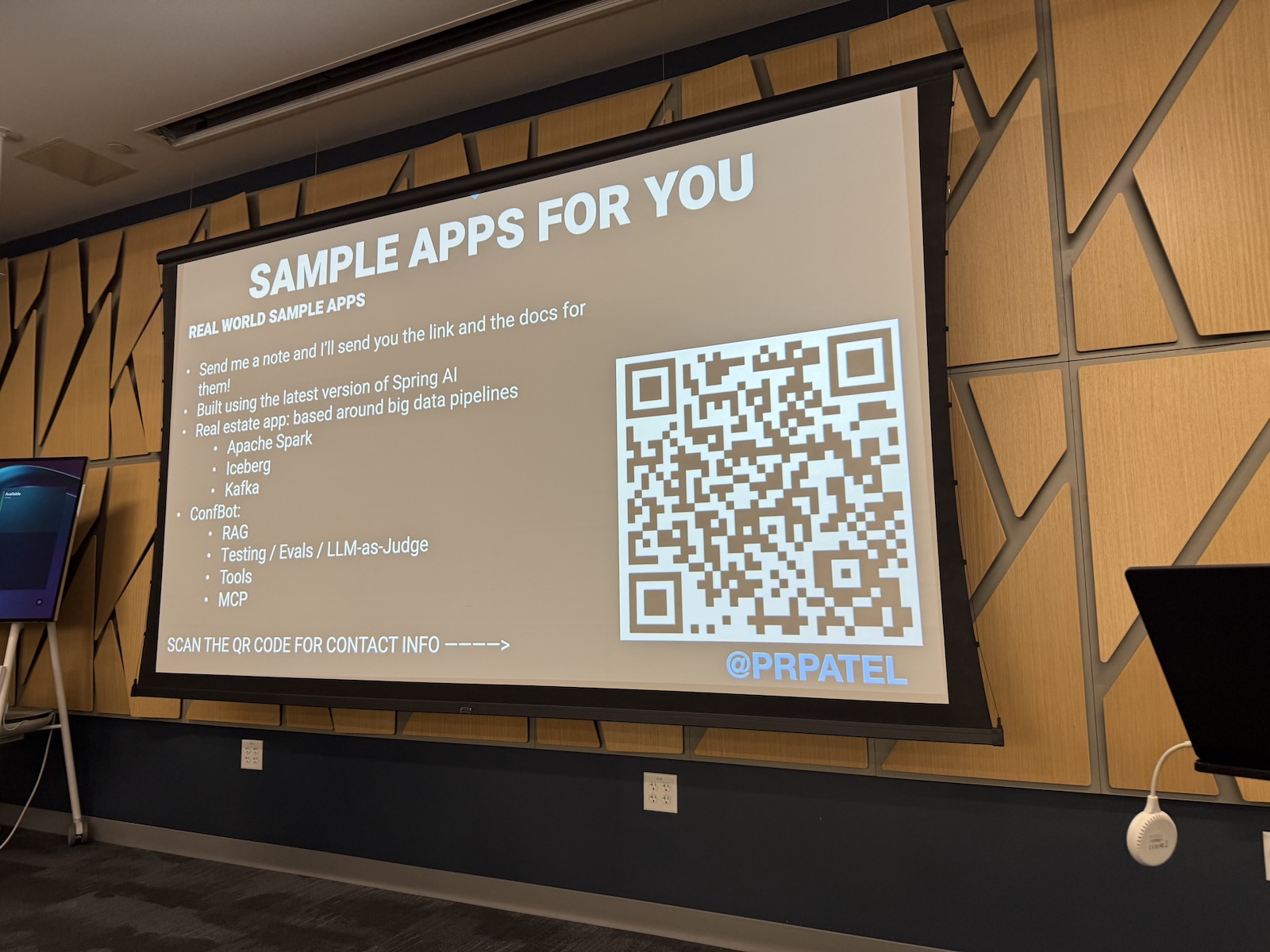

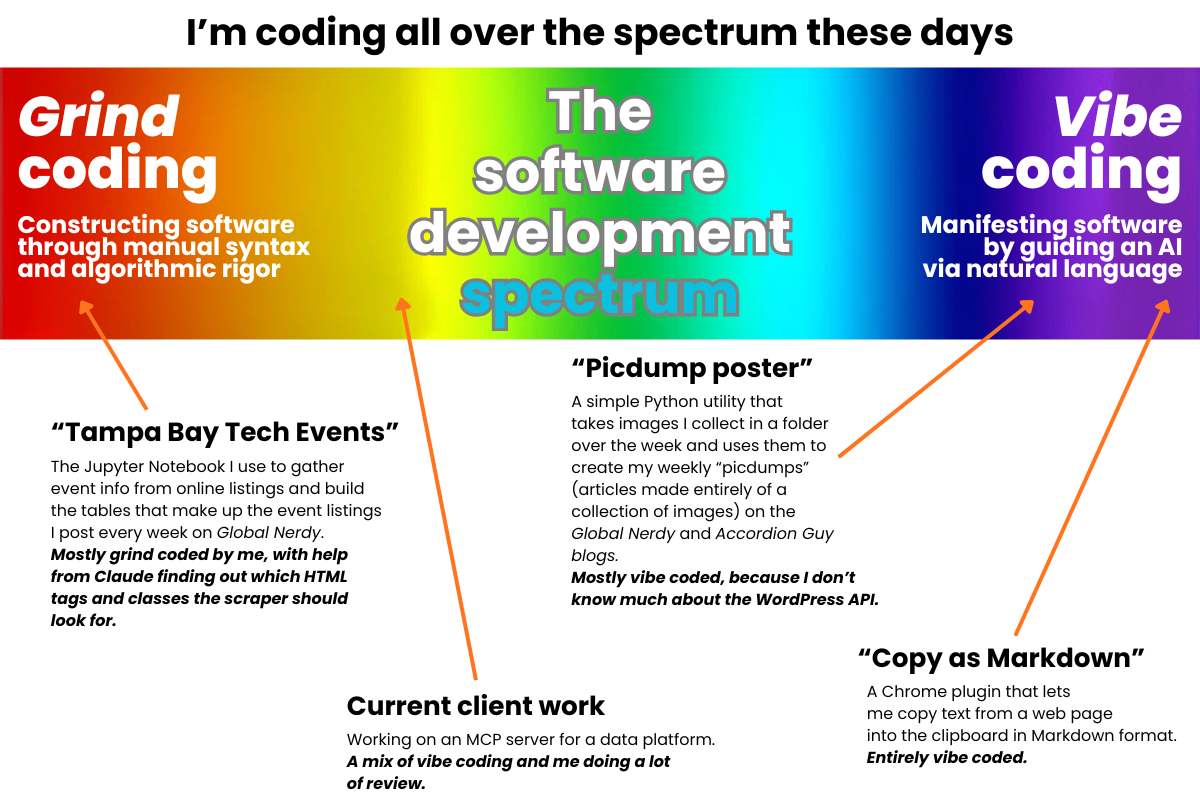

Sample apps!

If you’d like to dive deeper into what Pratik was talking about, he has companion sample apps. The details are in this picture: