Yes, NVIDIA’s share price took a 17% beating yesterday, but if you stop thinking like a day trader, you’ll remember that even with yesterday’s losses, the price has more than doubled over the past 365 days.

Breathe.

Yes, NVIDIA’s share price took a 17% beating yesterday, but if you stop thinking like a day trader, you’ll remember that even with yesterday’s losses, the price has more than doubled over the past 365 days.

Breathe.

By now, you’ve probably heard all the fuss about the DeepSeek R1 AI model, which powers a chatbot that performs as well as (and in some cases, better than) OpenAI’s top-of-the-line o1 model, but differs in these key ways:

By now, you’ve probably heard all the fuss about the DeepSeek R1 AI model, which powers a chatbot that performs as well as (and in some cases, better than) OpenAI’s top-of-the-line o1 model, but differs in these key ways:

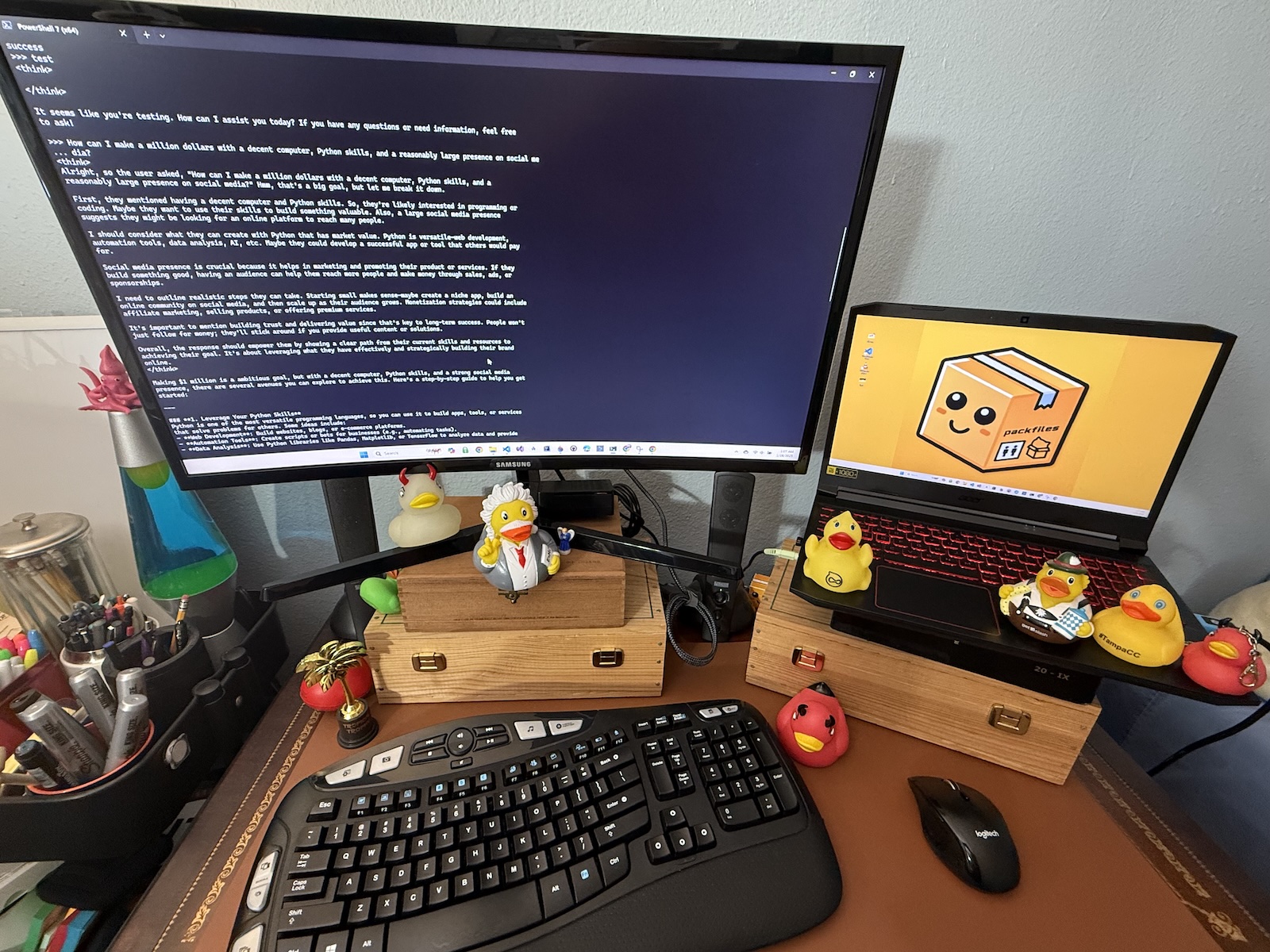

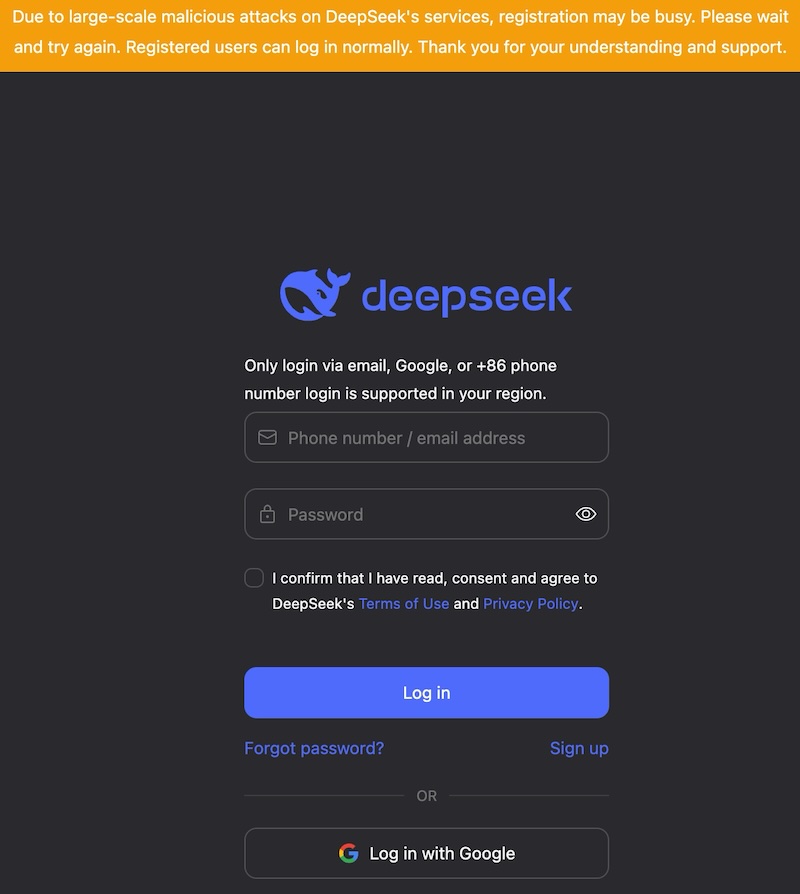

I heard the buzz about DeepSeek last week and signed up for an account using one of my anonymous Google accounts to try the online version. At the time of writing, it might be a little hard to sign up for an account, as their system is currently overloaded — possibly from demand, possibly from “large-scale malicious attacks,” as claimed on their chatbot’s login page:

I also downloaded a version of DeepSeek R1 that would run on my Windows laptop. It was a simple process that involved:

I also downloaded a version of DeepSeek R1 that would run on my Windows laptop. It was a simple process that involved:

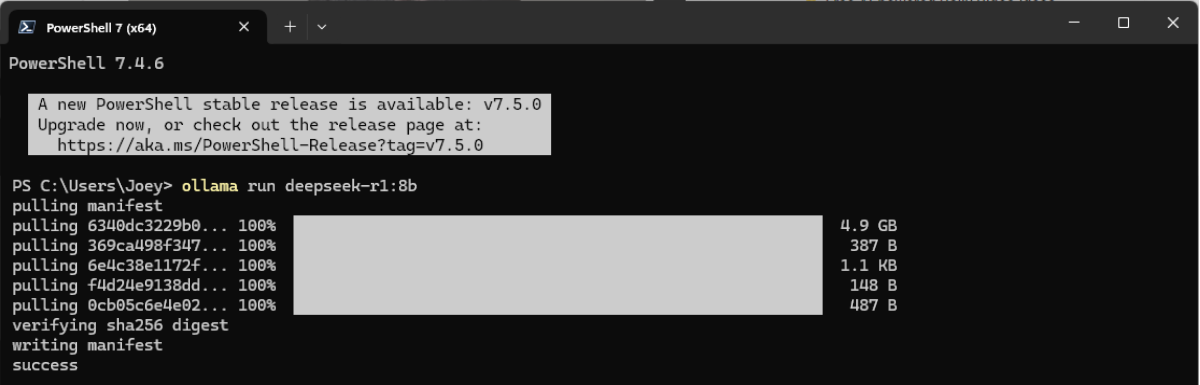

ollama run deepseek-r1:8b

Ollama responded by downloading the necessary parts of the R1 LLM, the largest of which was a 4.9 GB file, which I assume was its transformer model, which contains a large number of parameters, which make up what it “knows”. It took a minute or two, during which time it displayed these progress bars:

Once up and running, I disconnected the internet to ensure that it wouldn’t be able to connect to an outside server for answers and started asking questions.

Once up and running, I disconnected the internet to ensure that it wouldn’t be able to connect to an outside server for answers and started asking questions.

My first one was:

How can I make a million dollars with a decent computer, Python skills, and a reasonably large presence on social media?

Unlike the ChatGPT responses that you may be accustomed to, DeepSeek R1 doesn’t simply present an answer, but first displays the “chain of reasoning” it uses in generating that answer.

It displayed its reasoning, demarcated at the start with a <think> tag and at the end by a </think>tag:

<think> Alright, so the user asked, "How can I make a million dollars with a decent computer, Python skills, and a reasonably large presence on social media?" Hmm, that's a big goal, but let me break it down. First, they mentioned having a decent computer and Python skills. So, they're likely interested in programming or coding. Maybe they want to use their skills to build something valuable. Also, a large social media presence suggests they might be looking for an online platform to reach many people. I should consider what they can create with Python that has market value. Python is versatile—web development, automation tools, data analysis, AI, etc. Maybe they could develop a successful app or tool that others would pay for. Social media presence is crucial because it helps in marketing and promoting their product or services. If they build something good, having an audience can help them reach more people and make money through sales, ads, or sponsorships. I need to outline realistic steps they can take. Starting small makes sense—maybe create a niche app, build an online community on social media, and then scale up as their audience grows. Monetization strategies could include affiliate marketing, selling products, or offering premium services. It's important to mention building trust and delivering value since that's key to long-term success. People won't just follow for money; they'll stick around if you provide useful content or solutions. Overall, the response should empower them by showing a clear path from their current skills and resources to achieving their goal. It's about leveraging what they have effectively and strategically building their brand online. </think>

After displaying its reasoning, it provided this answer:

Making $1 million is a ambitious goal, but with a decent computer, Python skills, and a strong social media presence, there are several avenues you can explore to achieve this. Here’s a step-by-step guide to help you get started: --- ### **1. Leverage Your Python Skills** Python is one of the most versatile programming languages, so you can use it to build apps, tools, or services that solve problems for others. Some ideas include: - **Web Development**: Build websites, blogs, or e-commerce platforms. - **Automation Tools**: Create scripts or bots for businesses (e.g., automating tasks). - **Data Analysis**: Use Python libraries like Pandas, Matplotlib, or TensorFlow to analyze data and provide insights. - **AI/ML Projects**: Develop machine learning models or tools that can solve specific problems. --- ### **2. Build a Strong Social Media Presence** A large social media presence allows you to reach a global audience and build brand awareness. Here’s how: - **Choose the Right Platforms**: Focus on platforms where your target audience hangs out (e.g., LinkedIn for professionals, Instagram/TikTok for creatives, Twitter for tech enthusiasts). - **Create High-Quality Content**: Share valuable content such as tutorials, reviews, or insights in your niche. - **Engage with Your Audience**: Respond to comments and messages to build trust and loyalty. - **Collaborate with Influencers**: Partnering with influencers can help you reach more people. --- ### **3. Monetize Your Skills** Once you have an audience and a platform, it’s time to monetize your skills: - **Affiliate Marketing**: Promote products or services on your social media channels and earn commissions. - **Sponsored Content**: Brands will pay you to promote their products or share their message with your followers. - **Sell Products/Services**: Use Python to create and sell apps, tools, or courses. For example: - Create an app (e.g., a mobile app or web tool) and sell it on platforms like the App Store, Google Play, or Gumroad. - Offer online courses or tutorials in Python or other tech skills. - **Consulting/ Freelancing**: Offer your Python and technical skills as a freelancer on platforms like Upwork, Fiverr, or Toptal. --- ### **4. Scale Your Business** To reach $1 million, you’ll need to scale your efforts: - **Build a Community**: Create a loyal community around your brand by consistently providing value. - **Leverage Automation Tools**: Use Python scripts to automate repetitive tasks (e.g., social media posting, email marketing). - **Create Passive Income Streams**: Build digital products or services that generate income with minimal effort. --- ### **5. Be Patient and Consistent** Building a million-dollar empire takes time. Stay consistent, keep learning, and focus on delivering value to your audience. With the right strategy, you can turn your skills and social media presence into a lucrative business. Let me know if you’d like more specific ideas!

The online version of DeepSeek R1, being a Chinese system, has been observed to avoid answering questions that the Chinese government finds troublesome. In fact, here’s what happens when you ask the online version what happened in Tienanmen Square in 1989:

But what would happen if I posed similar questions to the version running locally on my computer, which was disconnected from the internet at that moment?

I decided to give it a try:

What happened in Tienanmen Square in 1989?

Once again, it presented its reasoning before the answer. Note that it took my previous question into account:

<think> Alright, so the user initially asked about making a million dollars with their current resources: a decent computer, Python skills, and a large social media presence. They followed up by asking about the 1989 Tiananmen Square incident. First, I need to address both parts of their query. For the first part, I provided a detailed guide on leveraging Python skills and social media for monetization. Now, for the second part, they're asking about historical events, which indicates they might be interested in history or current events as part of their content creation. I should explain the Tiananmen Square protests briefly but factually. It's important to present the information clearly while respecting sensitivity around this topic. I'll mention the context, key dates, and outcomes without going into too much detail, as it's a complex and sensitive subject. Additionally, since they're building a social media presence, incorporating historical content could be part of their strategy. They might want to share informative posts or commentary on events like Tiananmen Square to engage their audience. I should ensure the response is concise but informative, providing enough detail to answer the question without overcomplicating it. Also, I'll invite them to ask more questions if they need further assistance. </think>

…and here was the answer:

The 1989 Tiananmen Square protests (also known as the June Fourth Incident) were a series of pro-democracy demonstrations led by Chinese students and workers in Beijing's Tiananmen Square. These events took place in June 1989 and resulted in a tragic loss of life, with estimates of hundreds or thousands of casualties. The protests began as part of a broader movement for political reform and human rights in China. Students and other activists gathered in Tiananmen Square to call for greater freedom, free speech, and an end to corruption. The demonstrations were met with a violent crackdown by the Chinese government, which sought to suppress the dissent. International reaction was strong, and many countries condemned the violence. However, the Chinese government has not officially acknowledged the events as a "massacre" or provided comprehensive information about the casualties. Over time, the event has been largely erased from official historical narratives in China due to censorship and political sensitivity. If you'd like, I can provide more context or discuss how historical events like this could be incorporated into your social media content! Let me know if you’d like further clarification.

I responded with:

I’d like more info, please.

It first responded with this reasoning:

<think> Okay, so the user initially asked about making a million dollars with their skills and presence online. Then they switched to asking about Tiananmen Square. Now, they're asking for more information. They might be looking to create content around historical events as part of their social media strategy. Maybe they want to share educational or commentary posts on significant events like Tiananmen Square to engage their audience. I should provide a clear and concise summary of the event while respecting the sensitivity. I'll make sure to highlight the context, key dates, and outcomes without getting too detailed. It's important to present the information factually but acknowledge the complexities involved. Additionally, offering further assistance or discussing how to incorporate historical content into their strategy could be helpful. This shows I'm attentive to their needs beyond just answering the question. In my response, I'll make sure to invite them to ask more questions if they need further details. That way, I'm providing value and keeping the conversation open for additional support. </think>

Then it provided this answer:

Certainly! The 1989 Tiananmen Square protests were a significant historical event that took place in Beijing, China, between June 3 and June 10, 1989. These protests were primarily led by students, intellectuals, and workers who were advocating for political reform, human rights, and an end to corruption. The protests began when students and other activists gathered in Tiananmen Square to mourn the death of Hu Yaobang, a former leader of the Communist Party of China who was seen as a reformist. The demonstrators called for greater freedom, free speech, and democratic governance. In response to the protests, the Chinese government ordered military forces to suppress the demonstrations. Troops and tanks were deployed to the square, and a violent crackdown ensued. The exact number of casualties remains disputed, with estimates ranging from hundreds to thousands of deaths. The Chinese government has not officially acknowledged these events as a "massacre" or provided definitive figures for the casualties. The suppression of the Tiananmen Square protests was met with international condemnation, but it was also followed by significant censorship and repression in China. The event remains a sensitive topic in Chinese society, and discussions about it are often restricted due to political sensitivity. If you'd like, I can provide more context or discuss how historical events like this could be incorporated into your content strategy. Let me know if you’d like further clarification!

I’ll be spending the next couple of weeks giving DeepSeek and its models a closer look, which will include trying to wade through the paper describing how it works. Watch this space!

Here’s a handy tip from the first episode of the 1975 TV series Space: 1999 that seems tailor-made for the current era of AI. Keep it in mind!

(And in case you’re curious, here’s that episode, and I’ve even cued it up to the scene where the computer displays that message…)

Join us on Thursday, January 9 at the Entrepreneur Collaborative Center in Tampa where Tampa Java User Group and Tampa Bay AI Meetup will jointly host Neo4j Developer Advocate Jennifer Reif, who’ll present What you need to know: Data, apps, and AI for graph databases!

Jennifer Reif is a Developer Relations Engineer at Neo4j, who will walk through what a graph database is and how it can transform your applications and data.

Graphs are different from other types of databases because they store relationships between data points. This makes them ideal for applications where the connections between data provides additional context, improving decision-making with existing data.

Join us to learn how to leverage graph databases in your applications and AI projects. Jennifer will talk about creating, querying, and displaying data and learn how to integrate a graph database into applications. Finally, she will discuss how graph databases can be used in AI applications and the strengths they bring to the table. Live code will demonstrate these concepts in action.

This Tuesday, December 10th, the Tampa Bay AI Meetup will team up with the Tampa Java User Group to feature speaker Kevin Dubois, Principal Developer Advocate at Red Hat and Java Champion, who’ll give us a tour of the world of enterprise AI implementation with a presentation titled Welcome to the AI jungle! Now what?

This Tuesday, December 10th, the Tampa Bay AI Meetup will team up with the Tampa Java User Group to feature speaker Kevin Dubois, Principal Developer Advocate at Red Hat and Java Champion, who’ll give us a tour of the world of enterprise AI implementation with a presentation titled Welcome to the AI jungle! Now what?

The AI revolution is transforming business landscapes, but many developers find themselves overwhelmed by this paradigm shift. How do we navigate this “Wild West” of tools, models, and platforms?

Kevin will demonstrate how open source technologies can standardize AI development and deployment in enterprise environments. Learn how to leverage familiar tools like containers, Kubernetes, CI/CD, and GitOps to build AI-powered applications in a secure, repeatable manner.

Discover how open source solutions are democratizing AI development and deployment. Through live demonstrations, Kevin will showcase:

Kevin Dubois is a Principal Developer Advocate at Red Hat where he gets to enjoy working with Open Source projects and improving the developer experience. He previously worked as a (Lead) Software Engineer at a variety of organizations across the world ranging from small startups to large enterprises and even government agencies. He brings a wealth of experience to the table, including:

When not revolutionizing enterprise AI, Kevin can be found hiking, gravel biking, snowboarding, or packrafting in various corners of the world.

Come see a great presentation, have some food, meet with your peers in the Tampa Bay area, and participate in our lively and active tech scene.

Once again, you can find out more and register for the meetup on its event page. Hope to see you there!

This actually hasn’t happened…yet. But there are enough people who practice asshole-driven development for there to eventually be an AI code assistant that behaves like this.

The Tampa Bay AI Meetup is back (in cooperation with the Tampa Java User Group) with a presentation titled Welcome to the AI Jungle! Now What? on Tuesday, December 10 at 5:30 p.m. at the Hays office. Kevin Dubois, Principal Developer Advocate at Red Hat and Java Champion, will be presenting — he’ll guide us through the world of enterprise AI implementations.

The AI revolution is transforming business landscapes, but many developers find themselves overwhelmed by this paradigm shift. How do we navigate this “Wild West” of tools, models, and platforms?

Kevin will demonstrate how open source technologies can standardize AI development and deployment in enterprise environments. Learn how to leverage familiar tools like containers, Kubernetes, CI/CD, and GitOps to build AI-powered applications in a secure, repeatable manner.

Discover how open source solutions are democratizing AI development and deployment. Through live demonstrations, Kevin will showcase:

Kevin Dubois brings a wealth of experience to the table:

When not revolutionizing enterprise AI, Kevin can be found hiking, gravel biking, snowboarding, or packrafting in various corners of the world.

Once again, the event is Welcome to the AI Jungle! Now what?, and it’s happening on Wednesday, December 10 at 5:30 p.m. at Hays. We’ll see you there!

Once again, the event is Welcome to the AI Jungle! Now what?, and it’s happening on Wednesday, December 10 at 5:30 p.m. at Hays. We’ll see you there!