C. Titus Brown delivering his presentation.

C. Titus Brown delivering his presentation.

Here’s the first of my notes from the Science 2.0 conference, a conference for scientists who want to know how software and the web is changing the way they work. It was held on the afternoon of Wednesday, July 29th at the MaRS Centre in downtown Toronto and attended by 102 people. It was a little different from most of the conferences I attend, where the primary focus is on writing software for its own sake; this one was about writing or using software in the course of doing scientific work.

This entry contains my notes from C. Titus Brown’s presentation, Choosing Infrastructure and Testing Tools for Scientific Software Projects. Here’s the abstract:

The explosion of free and open source development and testing tools offers a wide choice of tools and approaches to scientific programmers. The increasing diversity of free and fully hosted development sites (providing version control, wiki, issue tracking, etc.) means that most scientific projects no longer need to self-host. I will explore how three different projects (VTK/ITK; Avida; and pygr) have chosen hosting, development, and testing approaches, and discuss the tradeoffs of those choices. I will particularly focus on issues of reliability and reusability juxtaposed with the mission of the software.

Here’s a quick bio for Titus:

C. Titus Brown studies development biology, bioinformatics and software engineering at Michigan State University, and he has worked in the fields of digital evolution and physical meteorology. A cross-cutting theme of much of his work has been software development for computational science, which has led him to software testing and agile software development practices. He is also a member of Python Software Foundation and the author of several widely-used Python testing toolkits.

- Should you do open source science?

- Ideological reason: Reproducibility and open communication are supposed to be at the heart of good science

- Idealistic reason: It’s harder to change the world when you’re trying to do good science and keep your methods secret

- Pragmatic reason: Maybe having more eyes on your project will help!

- When releasing the code for your scientific project to the public, don’t worry about which open source licence to use – the important thing is to release it!

- If you’re providing a contact address for your code, provide a mailing list address rather than your own

- It makes it look less “Mickey Mouse” – you don’t seem like one person, but a group

- It makes it easy to hand off the project

- Mailing lists are indexed by search engines, making your project more findable

- Take advantage of free open source project hosting

- Distributed version control

- “You all use version control, right?” (Lots of hands)

- For me, distributed version control was awesome and life-changing

- It decouples the developer from the master repository

- It’s great when you’re working away from an internet connection, such as if you decide to do some coding on airplanes

- The distributed nature is a mixed mixed blessing

- One downside is "code bombs", which are effective forks of the project, created when people don’t check in changes often enough

- Code bombs lead to complicated merges

- Personal observation: the more junior the developer, the more they feel that their code isn’t “worthy” and they hoard changes until it’s just right. They end up checking in something that’s very hard to merge

- Distributed version control frees you from permission decisions – you can simply say to people who check out your code "Do what you want. If I like it, I’ll merge it."

- Open source vs. open development

- Do you want to simply just release the source code, or do you want participation?

- I think participation is the better of the two

- Participation comes at a cost, in both support time and attitude

- There’s always that feeling of loss of control when you make your code open to use and modification by other people

- Some professors hate it when someone takes their code and does "something wrong" with it

- You’ll have to answer “annoying questions” about your design decisions

- Frank ("insulting") discussion of bugs

- Dealing with code contributions is time-consuming – it takes time to review them

- Participation is one of the hallmarks of a good open source project

- Do you want to simply just release the source code, or do you want participation?

- Anecdote

- I used to work on the “Project Earthshine” climatology project

- The idea behind the project was to determine how much of the sunlight hitting the Earth was being reflected away

- You can measure this be observing the crescent moon: the bright part is lit directly by the sun; the dark part is also lit – by sunlight reflected from the Earth

- You can measure the Greenhouse Effect this way

- It’s cheaper than measuring sunlight reflected by the Earth directly via satellite

- I did this work at Big Bear Lake in Califronia, where they hung telescopes to measure this effect at solar observatories

- I went through the the source code of the application they were using, trying to figure out what grad student who worked on it before me did

- It turned out that to get “smooth numbers” in the data, his code applied a correction several times

- His attitude was that there’s no such thing as too many corrections

- "He probably went on to do climate modelling, and we know how that’s going"

- How do we know that our code works?

- We generally have no idea that our code works, all we do is gain hints

- And what does "works" mean anyway, in the context of research programming? Does it means that it gives results that your PI expects?

- Two effects of that Project Earthshine experience:

- Nowadays, if I see agreement between 2 sources of data, I think at least one of them must be wrong, if not both

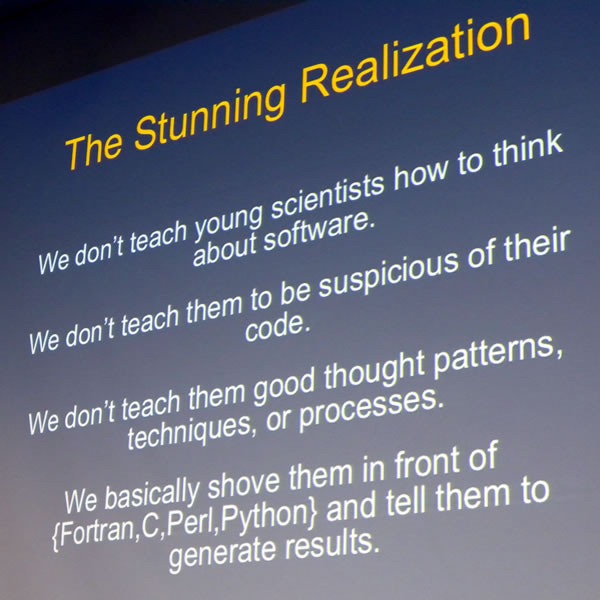

- I also came to a stunning realization that:

- We don’t teach young scientists how to think about software

- We don’t teach them to be suspicious of their code

- We don’t teach them good thought patterns, techniques or processes

- (Actually, CS folks don’t teach this to their students either)

- Fear is not a sufficient motivator: there are many documented cases where things have gone wrong because of bad code, and they will continue to do so. Famous cases include:

- Therac-25 – a radiation therapy machine that administered lethal doses of radiation to patients

- Pfizer Backtracks on Benefiit of Atorvastatin over Simvastatin – the result of a “programming error”

- A Sign, a Flipped Structure and a Scientific Flameout of Epic Proportions – several scientific papers retracted after a programming error was found

- If you’re throwing out experimental data because of ifs lack of agreement with your software model, that’s not a technical problem, that’s a social problem!

- Automated testing

- The basic idea behind automated testing is to write test code that runs your main code and verifies that the behaviour is expected

- Example – regression test

- Run program with a given set of parameters and record the output

- At some later time, run the same program with the same parameters and record the output

- Did the output change in the second run, and if so, do you know why?

- This is different thing from "is my program correct"

- If results change unintentionally, you should ask why

- Example – functional test

- Read in known data

- Check that the known data matches your expectations

- Does you data loading routine work?

- It works best if you also test with "tricky" data

- Example – assertions

- Put "assert parameter >=0" in your code

- Run it

- Do I ever pass garbage into this function?

- You’ll be surprised that things that "should never happen", do happen

- Follow the classic Cold War motto: “Trust, but verify”

- Other kinds of automated testing (acceptance testing, GUI testing), but they don’t usually apply to scientists

- In most cases, you don’t need to use specialized testing tools

- One exception is a code coverage tool

- Answers the question “What lines of code are executed?”

- Helps you discover dead code branches

- Guide test writing to untested portions of code

- Continuous integration

- Have several "build clients" building your software, running tests and reporting back

- Does my code build and run on Windows?

- Does my code run under Python 2.4? Debian 3.0? MySQL 4?

- Answers the question: “Is there a chance in hell that anyone else can use my code?”

- Automated testing locks down "boring" code (that is, code you understand)

- Lets you focus on "interesting" code – tricky code or code you don’t understand

- Freedom to refactor, tinker, modify, for you and others

- If you want to suck people into your open source project:

- Choose your technology appropriately

- Write correct software

- Automated testing can help

- Closed source science is not science

- If you can’t see the code, it’s not falsifiable, and if it’s not falsifiable, it’s not science!

4 replies on “Science 2.0: Choosing Infrastructure and Testing Tools for Scientific Software Projects”

[…] Choosing Infrastructure and Testing Tools for Scientific Software Projects – C. Titus Brown […]

[…] Science 2.0: Choosing Infrastructure and Testing Tools for Scientific Software Projects | Global Ner… […]

[…] tag is tosci20. Andrew Louis also blogged (part of) it, and has some great photos; Joey DeVilla has detailed blog posts on several of the speakers; Titus reflects on his own participation; and Jon Udell has a more […]

[…] C. Titus Brown – Choosing Infrastructure and Testing Tools for Scientific Software Projects – (deVilla) […]