…and it’s not something I ordered. I don’t even own a USB-C MacBook!

Of course, this means that the matching computer — which the new employer is sending my way — should be arriving soon.

…and it’s not something I ordered. I don’t even own a USB-C MacBook!

Of course, this means that the matching computer — which the new employer is sending my way — should be arriving soon.

Between the gig I’ve got teaching JavaScript and then Python a couple of evenings a week until the end of the year and a whole lot of technical interviews, I decided it was time to up my webcam lighting game. The result is pictured above and below.

I had an Amazon credit and a coupon code, so I got this thing for “cheaper than free”: A set of two 5600K USB-powered LED lights with tripods and filters…

It works quite well — and better than I expected. While it’s light and takes up little space once collapsed, it’s a little too flimsy for someone who needs on-the-go lighting.

However, if you plan on keeping the rig in just one place and not travel with it, it’s a good setup for your video chats, meetings, interviews, classes, and so on. Check it out on Amazon.

The lights worked well for last night’s class and the previous week’s tech interview. I’ll write more about how the interview went soon.

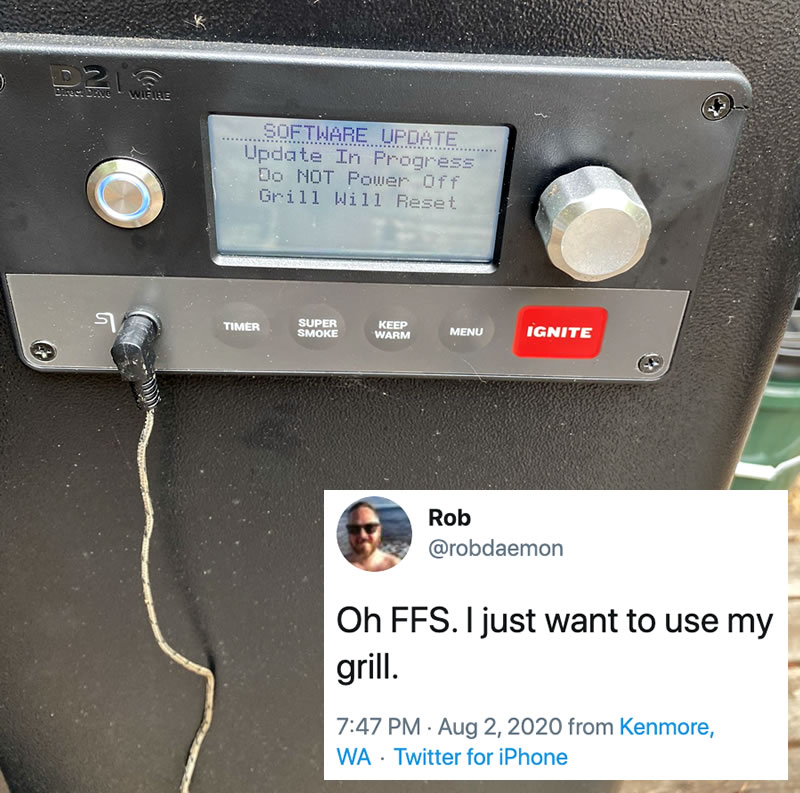

…wait until your IoT grill does it.

I need to look up this grill to see what its embedded controller does. Aside from…

…what else does it do that needs an update, never mind an update big enough to interfere with cooking?

My new Android phone, a Motorola One Hyper, which I wrote about a couple of weeks ago, came out of the box with Android 10.

My new Android phone, a Motorola One Hyper, which I wrote about a couple of weeks ago, came out of the box with Android 10.

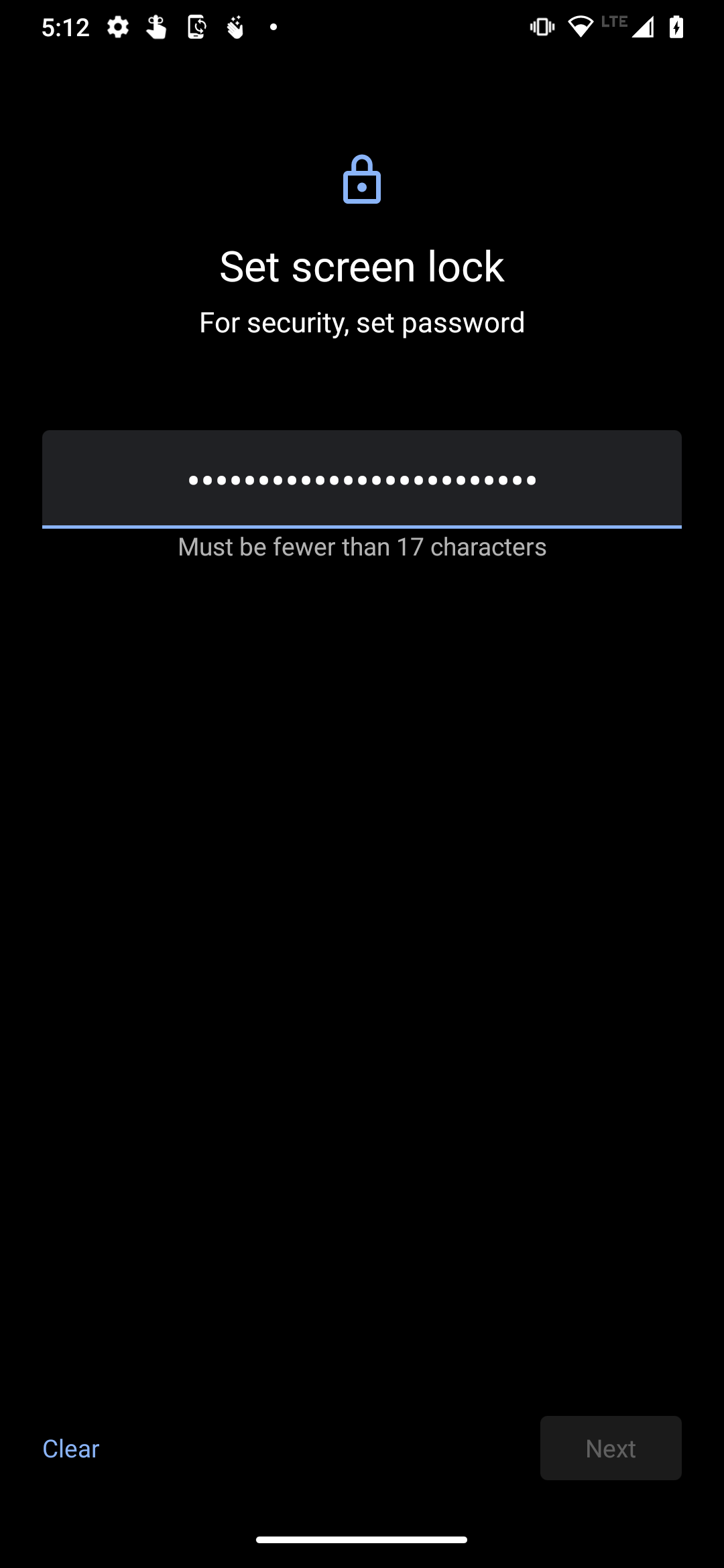

When it came time to set the passcode to unlock the phone, I found out that the longest device unlock passcode that even the most recent version of Android will accept is 16 characters. That was the case five years ago, and it’s still the case today.

Android’s “Choose Lock Password” screen is part of AOSP (Android Open Source Project), which means that its source code is easy to find online. It’s ChooseLockPassword.java, and the limitation is a constant defined in a class named ChooseLockPasswordFragment, which defines the portion of the screen where you enter a new passcode.

Here are the lines from that class that define passcode requirements and limitations:

private int mPasswordMinLength = LockPatternUtils.MIN_LOCK_PASSWORD_SIZE; private int mPasswordMaxLength = 16; private int mPasswordMinLetters = 0; private int mPasswordMinUpperCase = 0; private int mPasswordMinLowerCase = 0; private int mPasswordMinSymbols = 0; private int mPasswordMinNumeric = 0; private int mPasswordMinNonLetter = 0;

Note the values assigned to these variables. It turns out that there are only two constraints on Android passcodes that are currently in effect:

mPasswordMinLength, which is set to the value stored in the constant LockPatternUtils.MIN_LOCK_PASSWORD_SIZE. This is currently set to 6.mPasswordMaxLength, which is set to 16.As you might have inferred from the other variable names, there may eventually be other constraints on passcodes — namely, minimums for the number of letters, uppercase letters, lowercase letters, symbol characters, numeric characters, and non-letter characters — but they’re currently not in effect.

16 is a power of 2, and to borrow a line from Snow Crash, powers of 2 are numbers that a programmer would recognize “more readily than his own mother’s date of birth”. This might lead you to believe that 16 characters would be some kind of technical limit or requirement, but…

…Android (and in fact, every current non-homemade operating system) doesn’t store things like passcodes and passwords as-is. Instead, it stores the hashes of those passcodes and passwords. The magic of hash functions is that no matter how short or long the text you feed into them, their output is always the same fixed size (and a relatively compact size, too).

For example, consider SHA-256, from the SHA-2 family of hash functions:

| String value | Its SHA-256 hash |

|---|---|

| (empty string) | e3b0c44298fc1c149afbf4c8996fb92427ae41e4649b934ca495991b7852b855 |

| x | 2d711642b726b04401627ca9fbac32f5c8530fb1903cc4db02258717921a4881 |

| Chunky bacon! | f0abf4f096ac8fa00b74dbcee6d24c18cfd8ab5409d7867c9767257d78427760 |

| I have come here to chew bubblegum and kick ass… and I’m all out of bubblegum! | 3457314d966ef8d8c66ee00ffbc46c923d1c01adb39723f41ab027012d30f7fd |

| (The full text of T.S. Eliot’s The Love Song of J. Alfred Prufrock) | 569704de8d4a61d5f856ecbd00430cfe70edd0b4f2ecbbc0196eda5622ba71ab |

No matter the length of the input text, the output of the SHA-256 function is always the same length: 64 characters, each one a hexadecimal digit.

Under the 16-character limit, the password will always be shorter than the hash that actually gets stored! There’s also the fact that in a time when storage is measured in gigabytes, we could store a hash that was thousands of characters long and not even notice.

My guess is that the Android passcode size limit of 16 characters is purely arbitrary. Perhaps they thought that 16-character passwords like the ones below were the longest that anyone would want to memorize:

TvsV@PA9UNa$yvNN sDrgjwN#Vc^pmjL4 argmdKAP?!Gzh9mG <Wea2CKufNk+UuU8 EmNv%LN9w4T.sc76

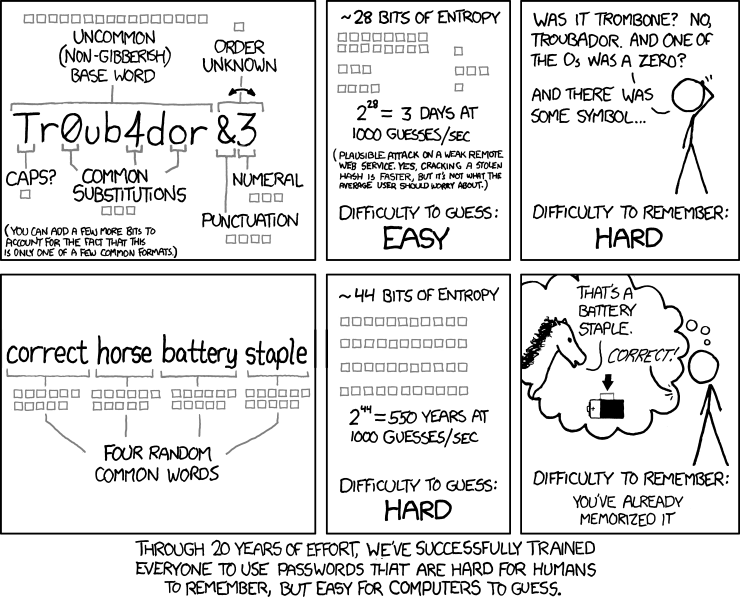

The problem is that it doesn’t account for (theoretically) more secure yet easier to remember passwords of the “correct horse battery staple” method described in the webcomic xkcd, which can easily make passwords longer than 16 characters:

Based on usability factors, there is a point after which a password is just too long, but it’s not 16 characters. I think that iOS’ 37-character limit is more suitable.

In my opinion, when it comes to getting the best bang and build quality for the buck on an Android phone, check out Motorola’s phones. Lenovo — the same company who took Right now, they’ve got discounts on many of their mobiles, including $100 off any of the Motorola One family — the Action, the Zoom, and the one I got: the Hyper.

With the discount, the unlocked Hyper goes for US$299 when purchased directly from Motorola. That’s a pretty good price for an Android phone with mid-level specs.

Released on January 22, 2020, the Hyper features the Qualcomm Snapdragon 675 chipset, which was released in October 2018. This chipset features 8 cores:

Its GPU is the Adreno 612, and it has an X12 LTE modem with category 13 uplink and category 15 downlink.

Here’s a quick video review of this chipset from Android Authority’s Gary Sims:

As a point of reference, this chipset is also used in Samsung’s Galaxy A70, A60, and M40, and LG’s Q70.

This chipset puts the Moto One Hyper firmly in the middle of the road of current Android offerings, making it a reasonably representative device for an indie Android developer/article author like Yours Truly.

The phone’s “Hyper” name is a reference to its “hyper charging” — high-speed charging thanks to its ability to take a higher level of power during the charging process. It comes with an 18 watt charger (the same level of power provided by the current iPad Pro and iPhone 11 chargers), but if you have a 45 watt charger handy, the phone’s 4,000 mAh battery will charge in just over 10 minutes.

The phone also comes with the usual literature and SIM extraction pin:

There is one additional goodie that I didn’t expect: a clear, flexible, rubber-like plastic case. It’s nothing fancy, but it was still a nice surprise.

I’ll post more details about the phone as I use it and start doing development work (native stuff in Kotlin, as well as some cross-platform work in Flutter, and maybe even Kivy).

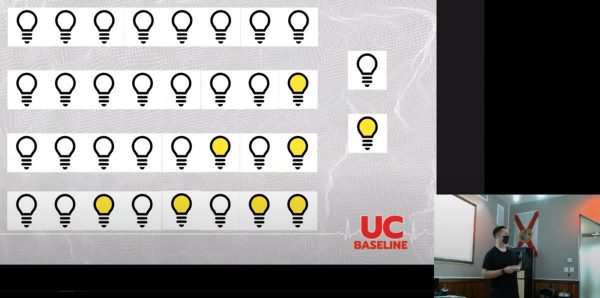

Day 4 of the Hardware 101 component of the UC Baseline cybersecurity program was all about security for the enterprise, which naturally included topics such as servers. Not everyone in the class has had the opportunity to tour a server room or data center, and this was their chance to see these machines up close.

Unlike the previous days, we did not attempt to dismantle and then reassemble the servers — this was a “look, but don’t touch” sort of lesson.

We also had a guest lecturer who gave us a pretty thorough walkthrough of the sorts of things involved in an enterprise server/data center setup, some of which went way over my head. I don’t see a sysadmin/system architect role in my future, but it might not hurt for me to do some supplementary reading on this topic.

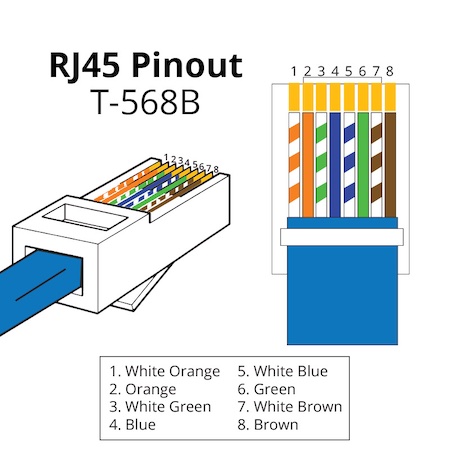

Day 5 was the final day of Hardware 101 and started with something that I’ve always been terrible at: Making networking cables.

Arrrrgh.

We also spent some time looking over all sorts of intrusion devices, such as the incredibly cute “Pwnagotchi”, a Raspberry Pi Zero-based device that “listens” to wifi chatter to feed its machine learning program in order to figure out wifi passwords.

It uses an e-paper screen, which is quite legible and consumes little power.

It’s incredibly small:

Here’s a Pwnagotchi beside a U.S. quarter for size reference:

A great way to steal information to gain access to people’s accounts and systems is to set up a fake wifi hotspot at a place that offers free wifi, such as Starbucks. That’s what the Wifi Pineapple is for — people connect to it, thinking they’re connecting to Starbucks wifi. You route their signals through to the real Starbucks wifi, but you’re the go-between, and can “see” everything that your marks are sending on the internet: the data they’re passing back and forth, including stuff like user IDs and passwords:

Here’s the actual unit:

Here’s a wrist-mounted device for performing wifi de-authentication attacks:

It sends out a signal that causes devices currently connected to wifi to disconnect. You could use it in tandem with a Wifi Pineapple to force people to disconnect from the real wifi and then connect to the Pineapple instead, enabling you to read their internet communications.

If you really want to “sniff” all the wifi traffic in the room, you’ll want one of these — a high-gain antenna system hooked to a network interface controller (NIC) that reads signals in “promiscuous mode”, a capability that’s disabled in most NICs. In promiscuous mode, you can capture all wifi traffic instead of the bits of data that you’re authorized to receive. It’s a good network diagnostics tool — and it’s also useful for getting up to no good:

And finally, the Shark Jack. Plug it into someone’s network, either via the ethernet jack or USB, and it will execute scripts to get a map of the network or even deliver a payload somewhere onto the system:

It’s basically a real-world version of the device that Tony Stark slipped onto the command console of the SHIELD helicarrier in the first Avengers movie (it’s at the 0:44 mark):

It’s basically a real-world version of the device that Tony Stark slipped onto the command console of the SHIELD helicarrier in the first Avengers movie (it’s at the 0:44 mark):

I may have to invest in one of those bad boys. For research purposes, you understand.

We also had a guest lecturer who delivered a very thorough and informative presentation on getting started in cybersecurity. I’ll have to post notes on it later:

And at the end of the day, we were each issued our very own Raspberry Pi 4 Model B’s!

And at the end of the day, we were each issued our very own Raspberry Pi 4 Model B’s!

These were the Labists versions, and I have to say, I prefer their offering over Canakit’s.

Here’s what the board looks like:

It has some pretty impressive specs, especially when you consider that it retails for under $100:

It also comes with a pretty nice case…

…a power supply with an actual on/off switch on the cord, and not one, but two micro-HDMI to full-size HDMI cables…

…heatsinks and a fan, plus a screwdriver…

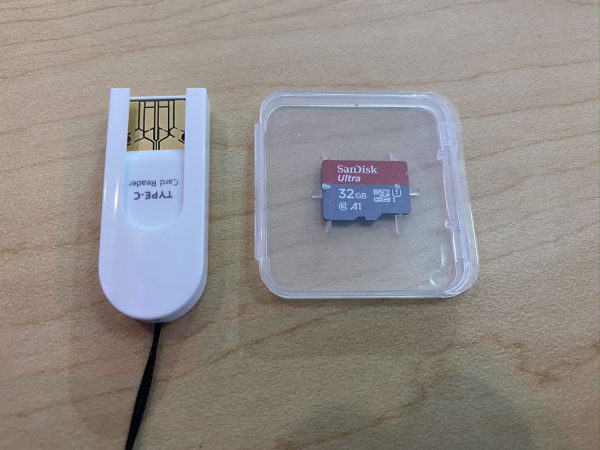

…and a micro-SD card and USB adapter so that you can use your standard computer to download an OS…

I spent some time over the weekend noodling with it, and wow, is it a fun computer to play with!

I spent some time over the weekend noodling with it, and wow, is it a fun computer to play with!

We’re expected to use it for this week’s classes, which make up the “Networking 101” portion of the UC Baseline program. I’m looking forward to it!

For the benefit of my classmates in the UC Baseline program (see this earlier post to find out what it’s about), I’m posting a regular series of notes here on Global Nerdy to supplement the class material. As our instructor Tremere said, what’s covered in the class merely scratches the surface, and that we should use it as a launching point for our own independent study.

There was a lot of introductory material to cover on day one of the Hardware 101 portion of the program, and there’s one bit of basic but important material that I think deserves a closer look, especially for my fellow classmates who’ve never had to deal with it before: How binary and hexadecimal numbers are related.

Consider the population of Florida. According to the U.S. Census Bureau, on July 1, 2019, that number was estimated to be 21,477,737 in base 10, a.k.a. the decimal system.

Here’s the same number, expressed in base 2, a.k.a. the binary system: 1010001111011100101101001.

That’s the problem with binary numbers: Because they use only two digits, 0 and 1, they grow in length extremely quickly, which makes them hard for humans to read. Can you tell the difference between 100000000000000000000000 and 1000000000000000000000000? Be careful, because those two numbers are significantly different — one is twice the size of the other!

(Think about it: In the decimal system, you make a number ten times as large by tacking a 0 onto the end. For the exact same reason, tacking a 0 onto the end of binary number doubles that number.)

Once again, the problem is that:

What we need is a numerical system that:

Luckily for us, there’s a numerical system that fits this description: Hexadecimal. The root words for hexadecimal are hexa (Greek for “six”) and decimal (from Latin for “ten”), and it means base 16.

Using 4 binary digits, you can represent the numbers 0 through 15:

| Decimal | Binary |

|---|---|

| 0 | 0000 |

| 1 | 0001 |

| 2 | 0010 |

| 3 | 0011 |

| 4 | 0100 |

| 5 | 0101 |

| 6 | 0110 |

| 7 | 0111 |

| 8 | 1000 |

| 9 | 1001 |

| 10 | 1010 |

| 11 | 1011 |

| 12 | 1100 |

| 13 | 1101 |

| 14 | 1110 |

| 15 | 1111 |

Hexadecimal is the answer to the question “What if we had a set of digits that represented the 16 numbers of 0 through 15?”

Let’s repeat the above table, this time with hexadecimal digits:

| Decimal | Binary | Hexadecimal |

|---|---|---|

| 0 | 0000 | 0 |

| 1 | 0001 | 1 |

| 2 | 0010 | 2 |

| 3 | 0011 | 3 |

| 4 | 0100 | 4 |

| 5 | 0101 | 5 |

| 6 | 0110 | 6 |

| 7 | 0111 | 7 |

| 8 | 1000 | 8 |

| 9 | 1001 | 9 |

| 10 | 1010 | A |

| 11 | 1011 | B |

| 12 | 1100 | C |

| 13 | 1101 | D |

| 14 | 1110 | E |

| 15 | 1111 | F |

Hexadecimal gives us easier-to-read numbers where each digit represents a group of 4 binary digits. Because of this, it’s easy to convert back and forth between binary and hexadecimal.

Since we’re creatures of base 10, we have the single characters to represent the digits 0 through 9, but no single character to represent 10, 11, 12, 13, 14, and 15, which are digits in hexadecimal. To work around this problem, hexadecimal uses the first 6 letters from the Roman alphabet: A, B, C, D, E, and F.

Let’s try representing a decimal number in binary, and then hexadecimal. Consider the number 49,833. It’s the number for the Unicode character for ©, the copyright symbol. Here’s its representation in binary:

1100001010101001

That’s a hard number to read, and if you had to manually enter it, the odds are pretty good that you’d make a mistake. Let’s convert it to its hexadecimal equivalent.

We do this by first breaking that binary number into groups of 4 bits (remember, a single hexadecimal number represents 4 bits, and “bit” is a portmanteau for “binary digit”):

1100 0010 1010 1001

Now let’s use the table above to look up the hexadecimal digit for each of those groups of 4:

1100 0010 1010 1001

C 2 A 9

There you have it:

Because we’re base 10 creatures, we simply write decimal numbers as-is:

49,833

To indicate that a number is in binary, we prefix it with the number zero followed by a lowercase b:

0b1100001010101001

This is a convention used in many programming languages. Try it for yourself in JavaScript:

# This will print "49833" in the console console.log(0b1100001010101001)

Or if you prefer, Python:

# This will print "49833" in the console print(0b1100001010101001)

To indicate that a number is in hexadecimal, we prefix it with the number zero followed by a lowercase x:

oxC2A9

Once again, try it for yourself in JavaScript:

# This will print "49833" in the console print(0xc2a9) print(0xC2A9)

Or Python:

# Both of these will print "49833" in the console print(0xc2a9) print(0xC2A9)

A single hexadecimal digit represents 4 bits, and my favorite term for a group of 4 bits is nybble. The 4 bits that make up a nybble can represent the numbers 0 through 15.

“Nybble” is one of those computer science-y jokes that’s based on the fact that a group of 8 bits is called a byte. I’ve seen the terms half-byte and tetrade also used.

Two hexadecimal digits represent 8 bits, and a group of 8 bits is called a byte. The 8 bits that make up a byte can represent the numbers 0 through 255, or the numbers -128 through 127.

In the era of the first general-purpose microprocessors, the data bus was 8 bits wide, and so byte was the standard unit of data. Every character in the ASCII character set can be expressed in a single byte. Each of the 4 numbers in an IPv4 address is a byte.

Four hexadecimal digits represent 16 bits, and a group of 16 bits is most often called a word. The 16 bits that make up a word can represent the numbers 0 through 65,535 (a number sometimes referred to as “64K”), or the numbers -32,768 through 32,767.

If you were computing in the late ’80s or early ’90s — the era covered by Windows 1 through 3 or Macs in the classic chassis — you were using a 16-bit machine. That meant that it stored data a word at a time.

Eight hexadecimal digits represent 32 bits, and a group of 32 bits is often called a double word or DWORD; I’ve also heard the unimaginative term “32-bit word”. The 32 bits that make up a word can represent the numbers 0 through 4,294,967,295 (a number sometimes referred to as “4 gigs”), or the numbers −2,147,483,648 through 2,147,483,647.

32-bit operating systems and computers came about in the mid-1990s. Some are still in use today, although they’d now be considered older or “legacy” systems.

The IPv4 address system uses 32 bits, which means that it can represent a maximum of 4,294,967,29 internet addresses. That’s fewer addresses than there are people on earth, and as you might expect, we’re running out of these addresses. There are all manner of workarounds, but the real solution is for everyone to switch to IPv6, which uses 128 bits, which allows for over 3 × 1038 addresses — enough to assign 100 addresses to every atom on the surface of the earth.

16 hexadecimal digits represent 64 bits, and a group of 64 bits is often called a quadruple word, quad word, or QWORD; I’ve also heard the unimaginative term “64-bit word”. The 64 bits that make up a word can represent the numbers 0 through 18,446,744,073,709,551,615 (about 18.4 quintillion), or the numbers -9,223,372,036,854,775,808 to 9,223,372,036,854,775,807 (minus 9.2 quintillion through 9.2 quintillion).

If you have a Mac and it dates from 2007 or later, it’s probably a 64-bit machine. macOS has supported 32- and 64-bit applications, but from macOS Catalina (which came out in 2019) onward, it’s 64-bit only. As for Windows-based machines, if your processor is an Intel Core 2/i3/i5/i7/i9 or AMD Athlon 64/Opteron/Sempron/Turion 64/Phenom/Athlon II/Phenom II/FX/Ryzen/Epyc, you have a 64-bit processor.

The Khan Academy has a pretty good explainer of the decimal, binary, and hexadecimal number systems: